Macaw Overview

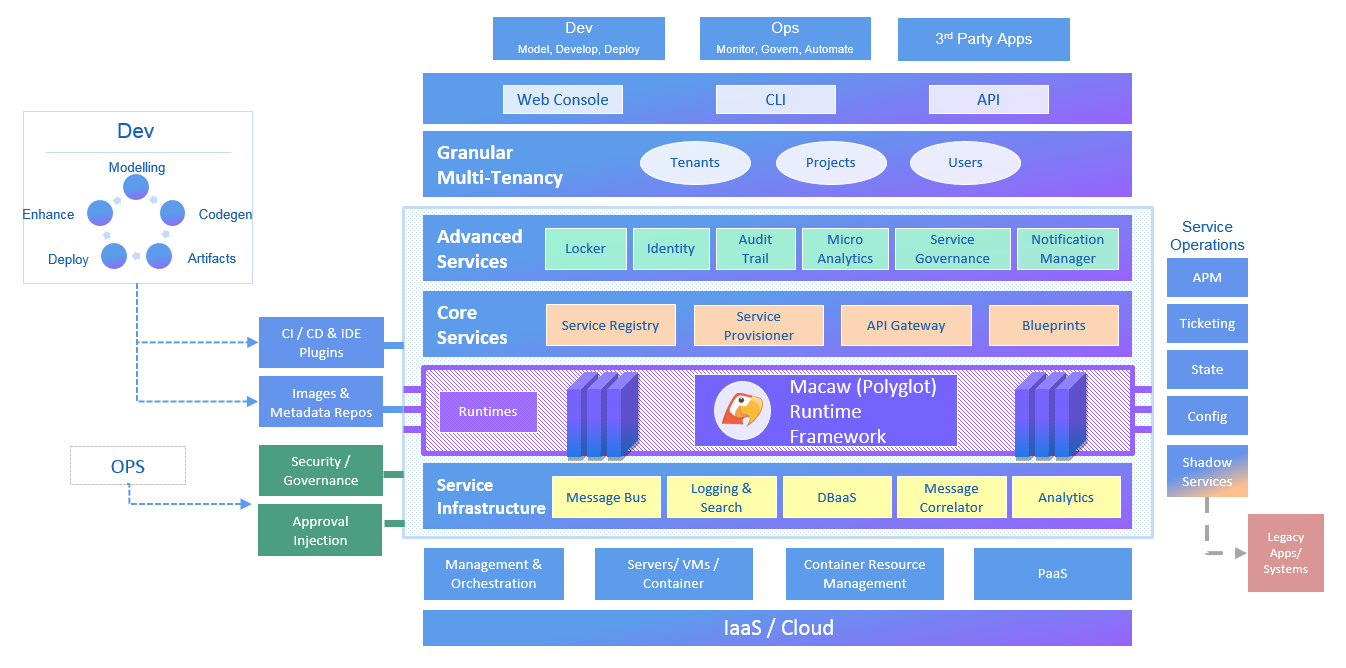

Macaw is an enterprise grade prescriptive Microservices platform. Macaw provides a comprehensive toolset, many built-in core services, CI/CD integrations, management & operational capabilities, and cloud agnostic deployment to accelerate the enterprise cloud native journey.

- API modeling in JSON or YANG and Swagger documentation for Microservices, or a collection of Microservices called blueprints.

- Codegen, build and publish toolset for Microservice development. Java, Javascript language for service implementation, with further language support coming in the future.

- Several built-in core, essential services like database (Cassandra, MySQL), logging (Elasticsearch), identity with multi-tenancy, etc. for rapid application development and a focus on business logic.

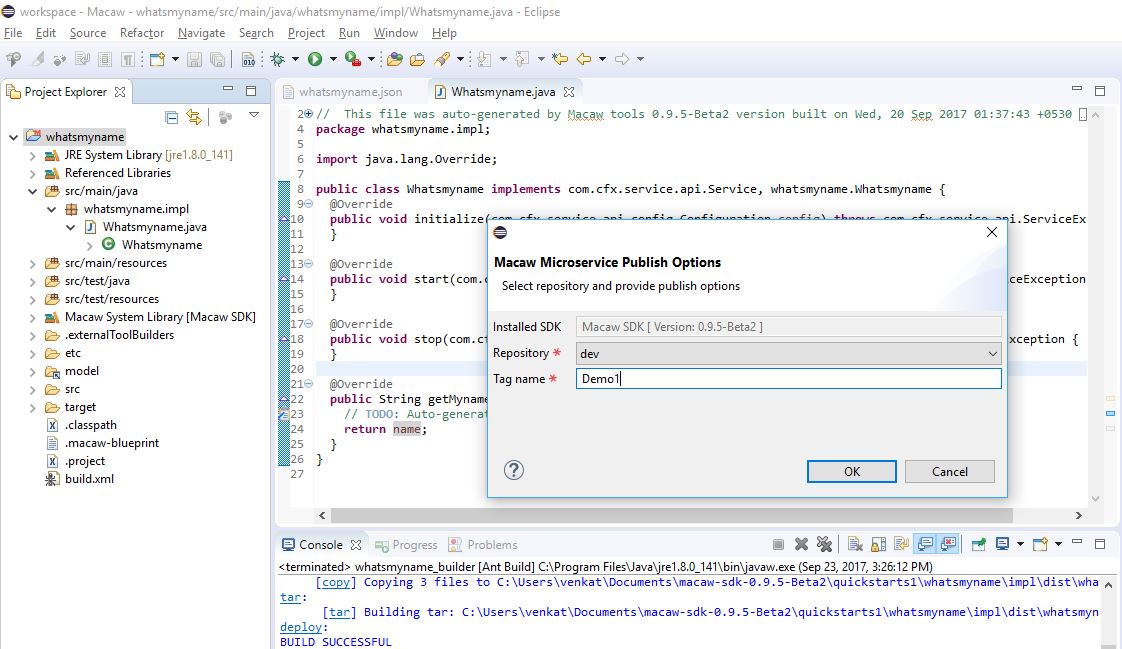

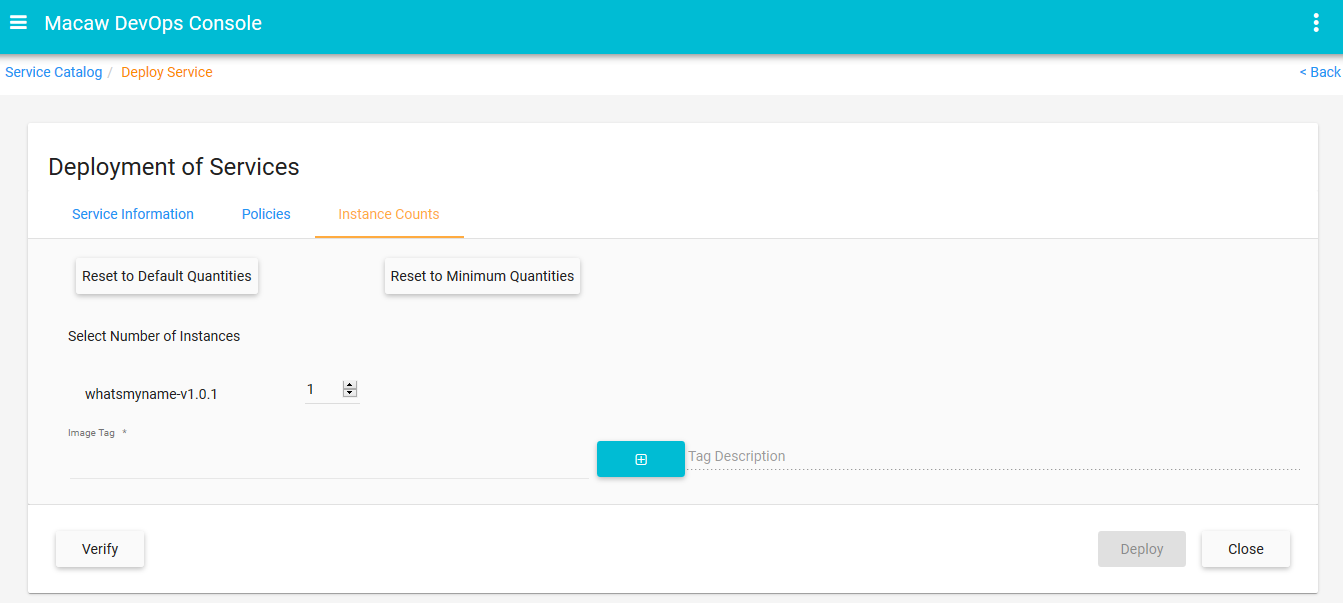

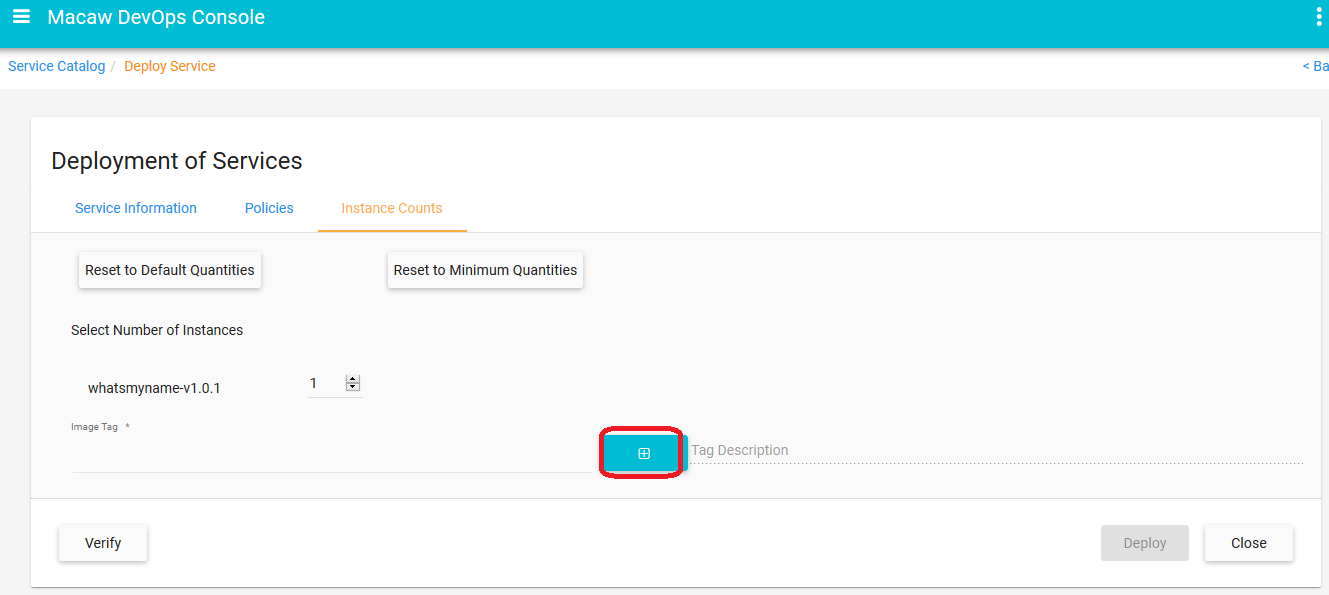

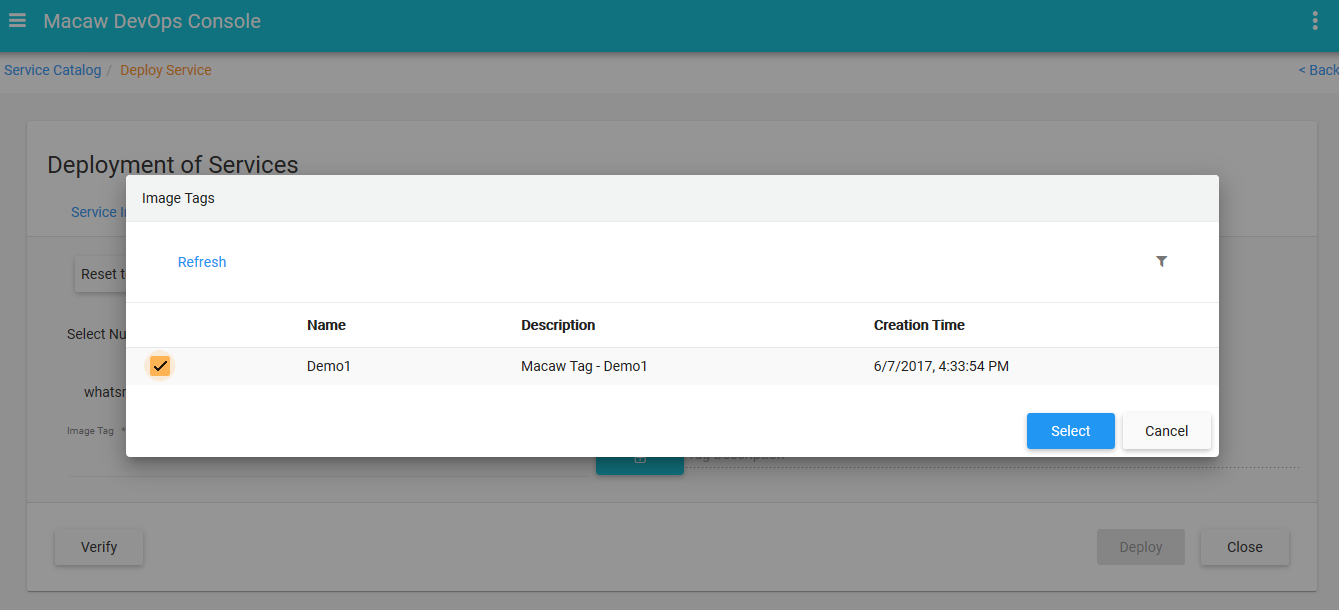

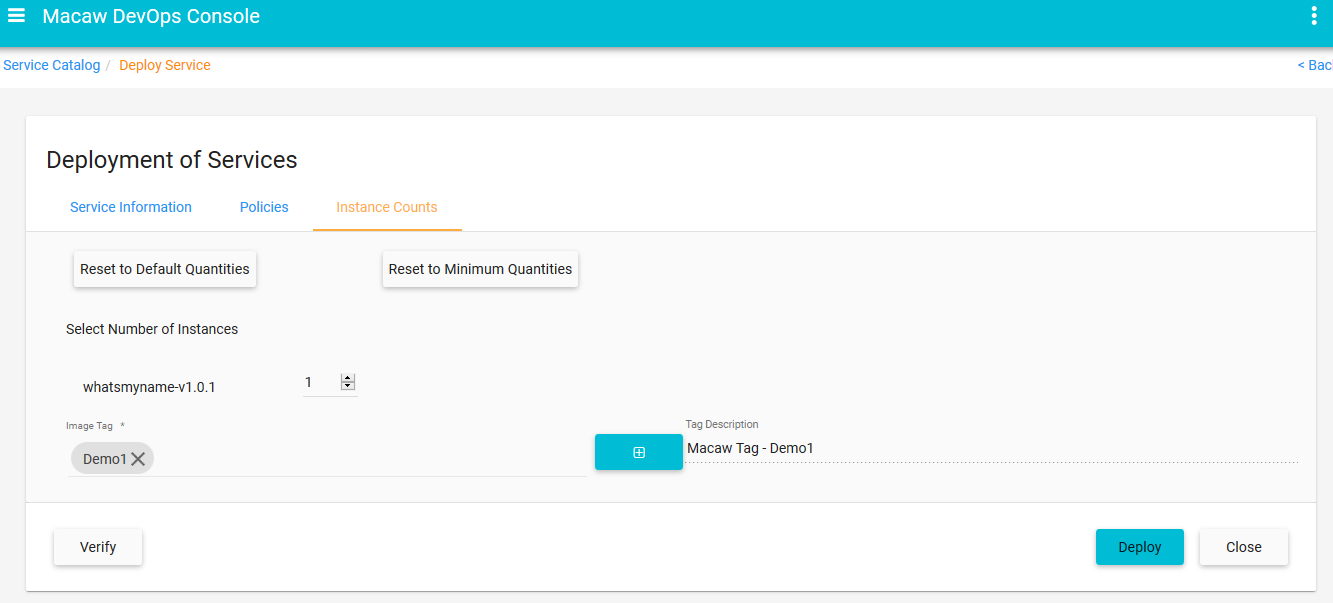

- Microservice metadata allows for standardization, auditing, policy enforcement, and service discovery & provisioning. Microservice tag identifies a specific code commit and can be used for release/sprint progression, staging or production usage.

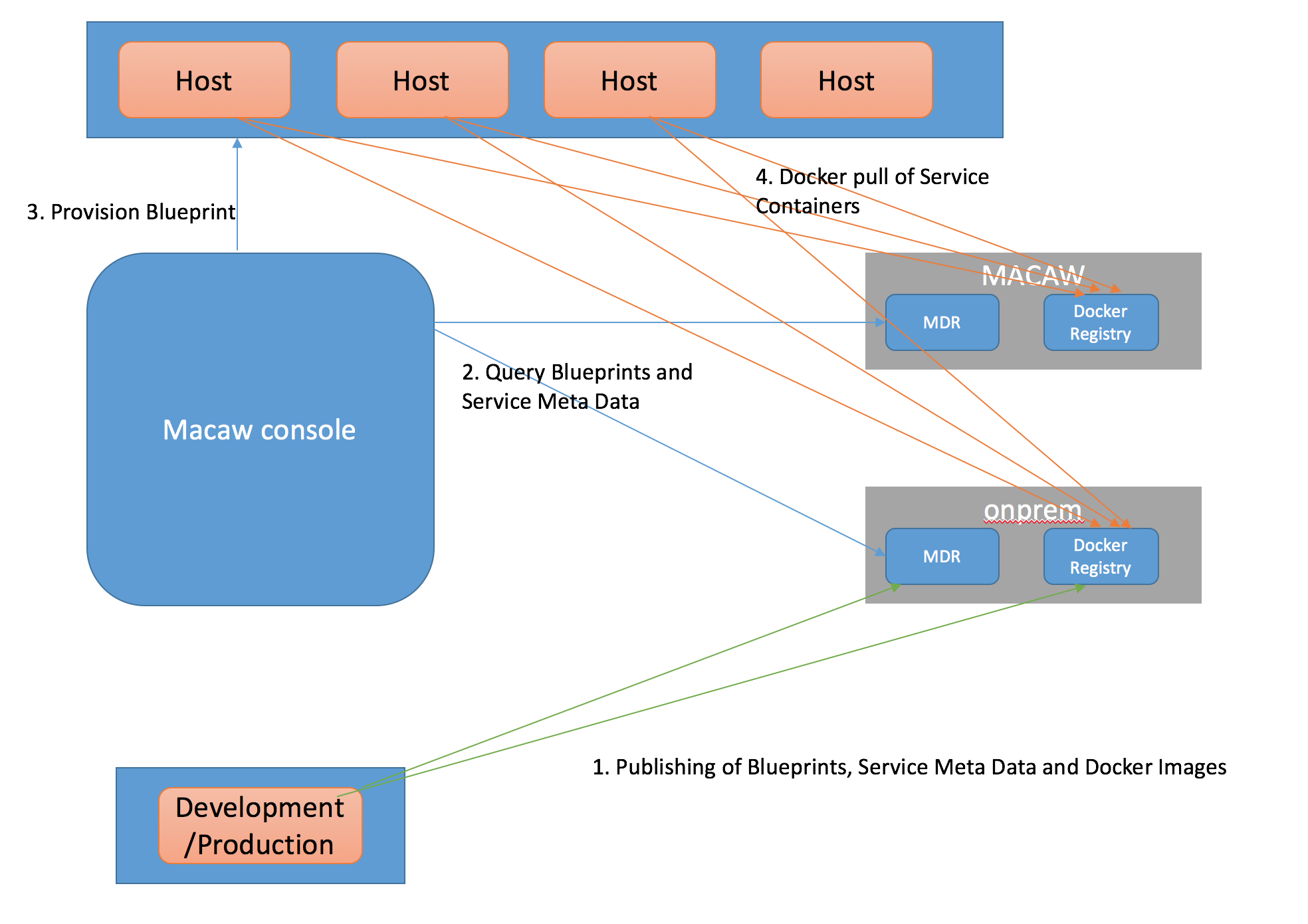

- Macaw microservices are packaged as Docker containers. MDR (Meta Data Repository) holds the service blueprints, Metadata information, available Docker tags for a specific service. Docker registry holds service container images.

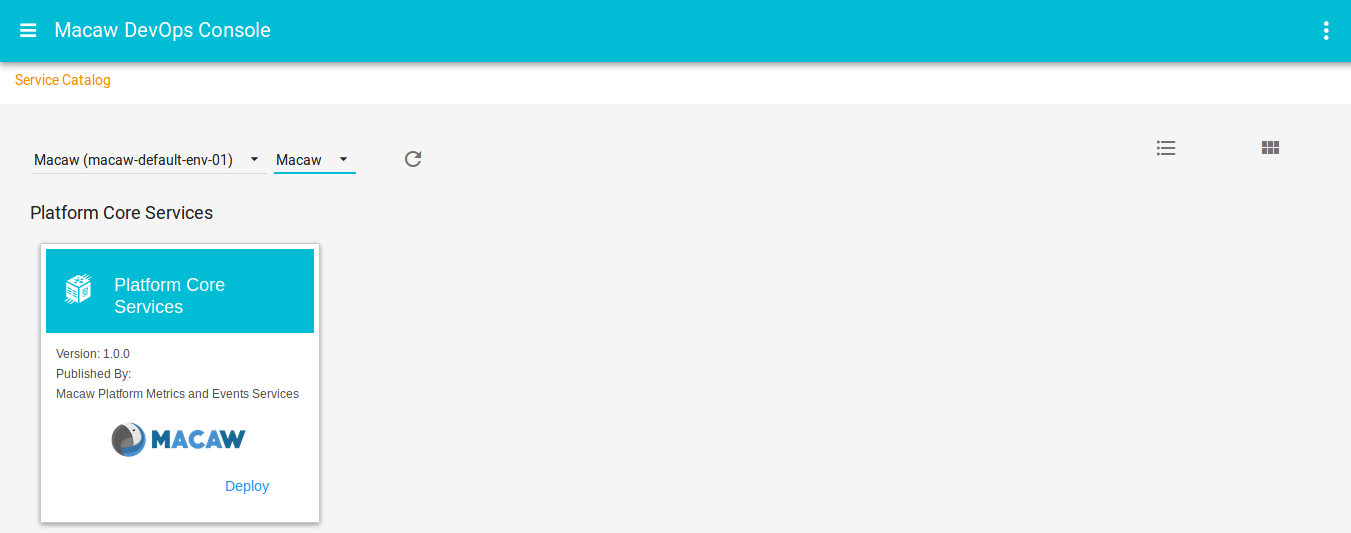

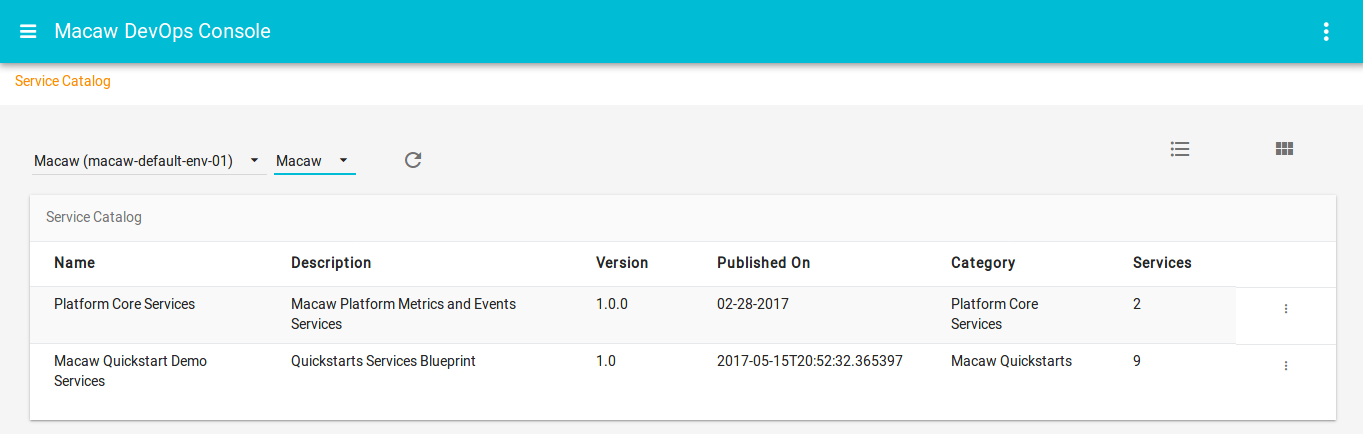

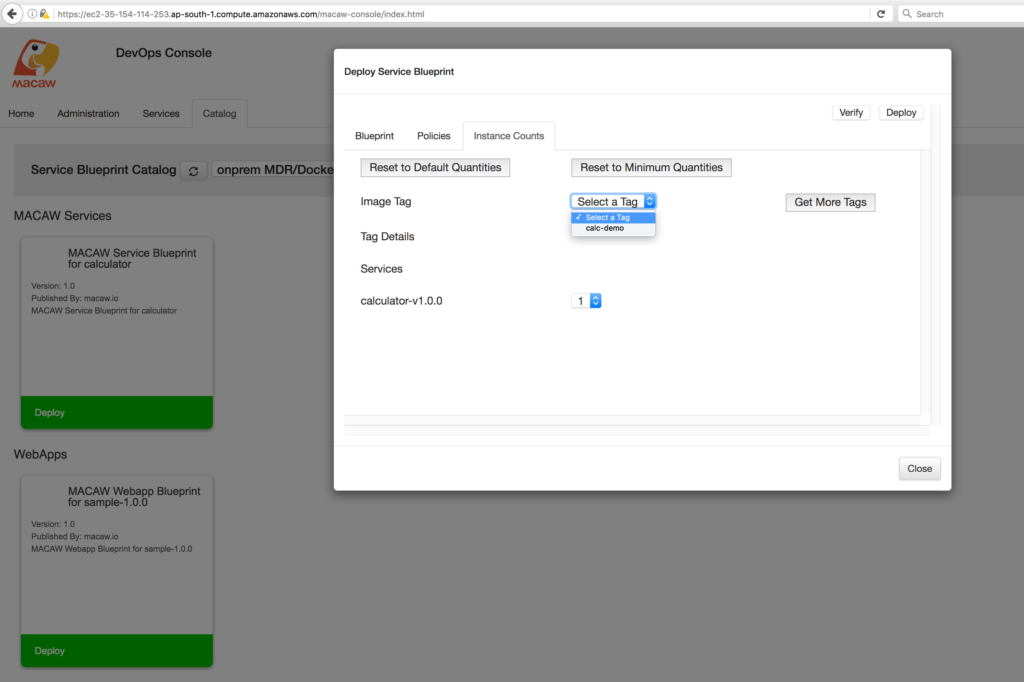

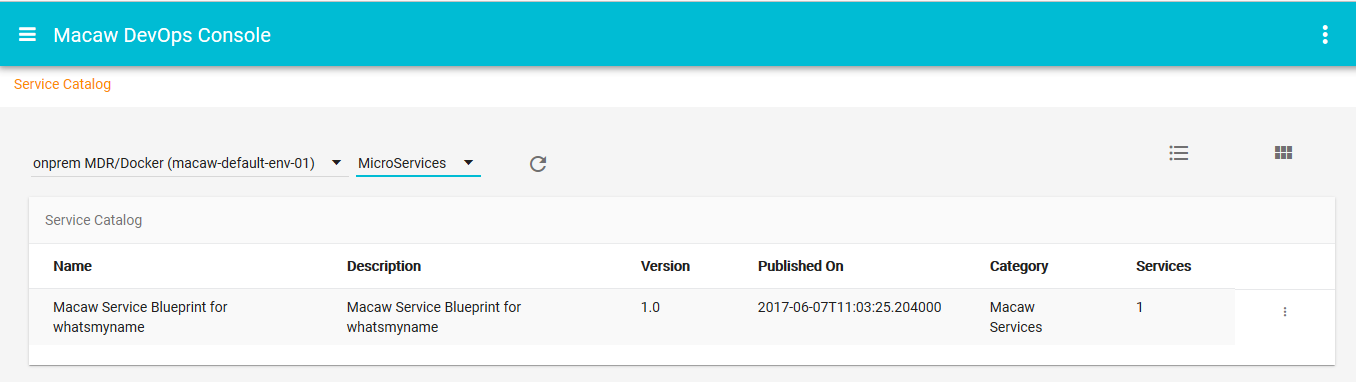

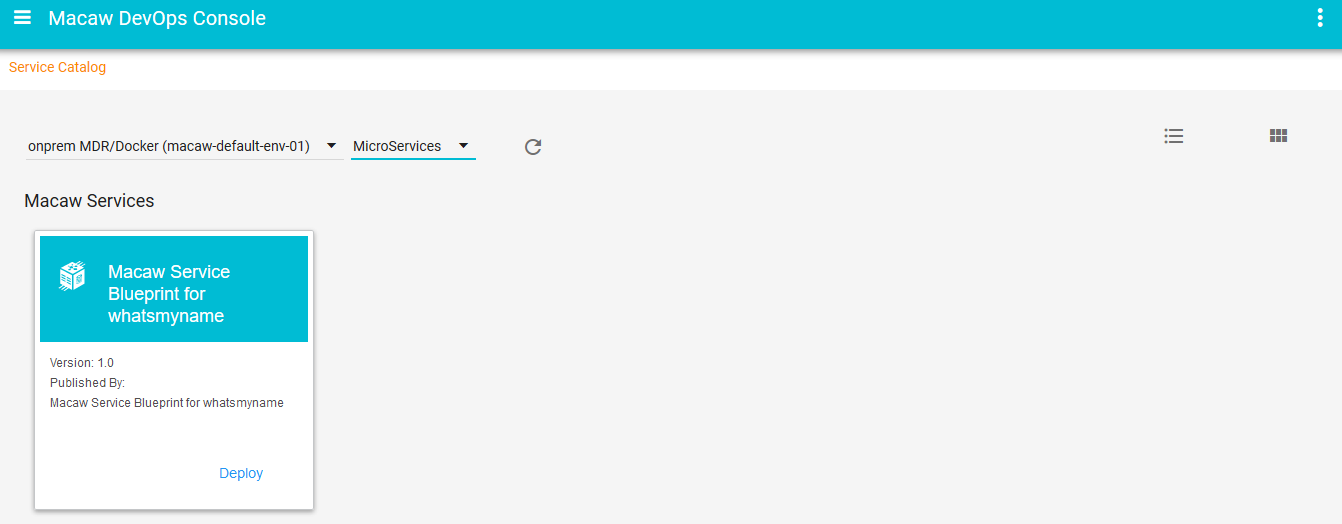

- Macaw service catalog serves as a marketplace and presents Microservices that are available in selected MDR/Docker repositories, which can be private, shared or public.

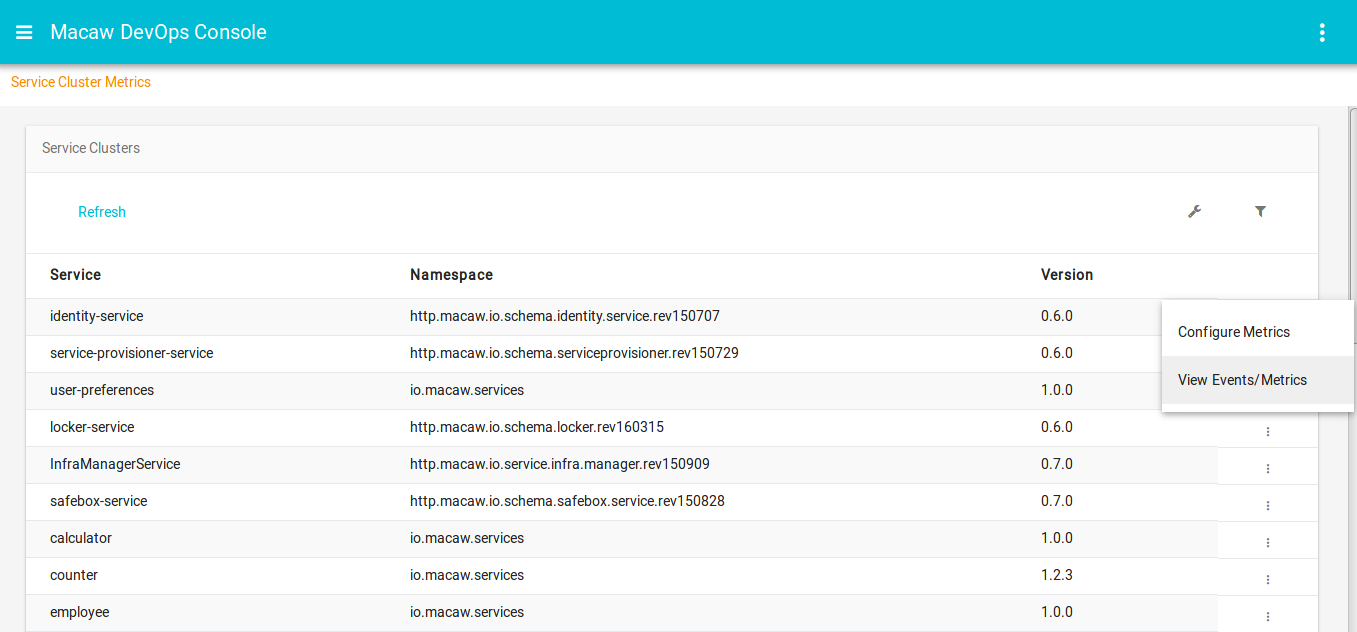

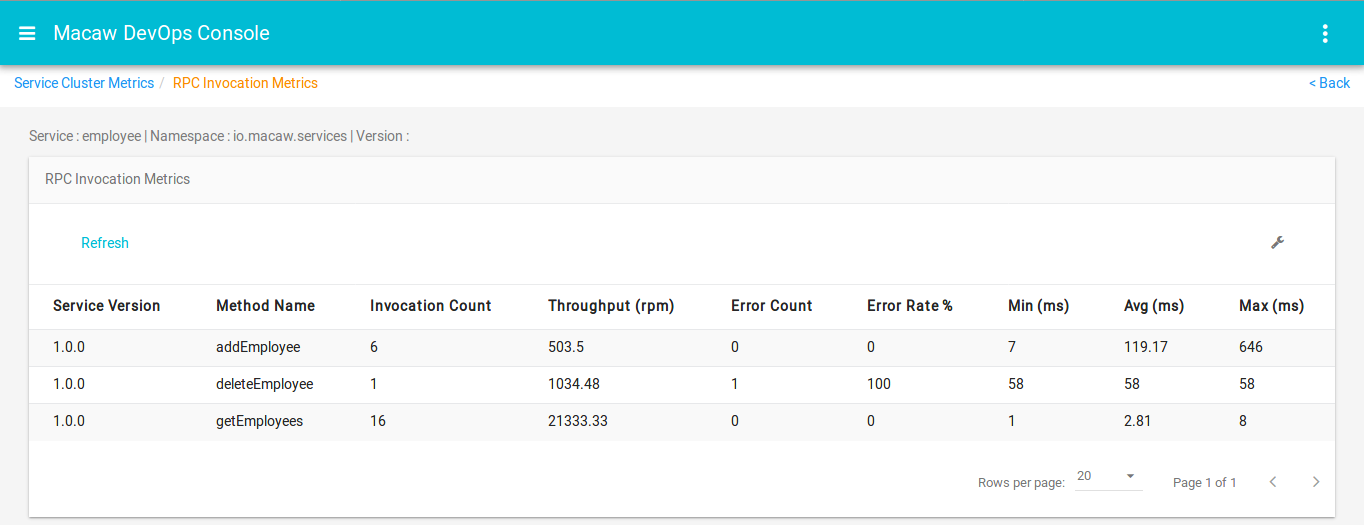

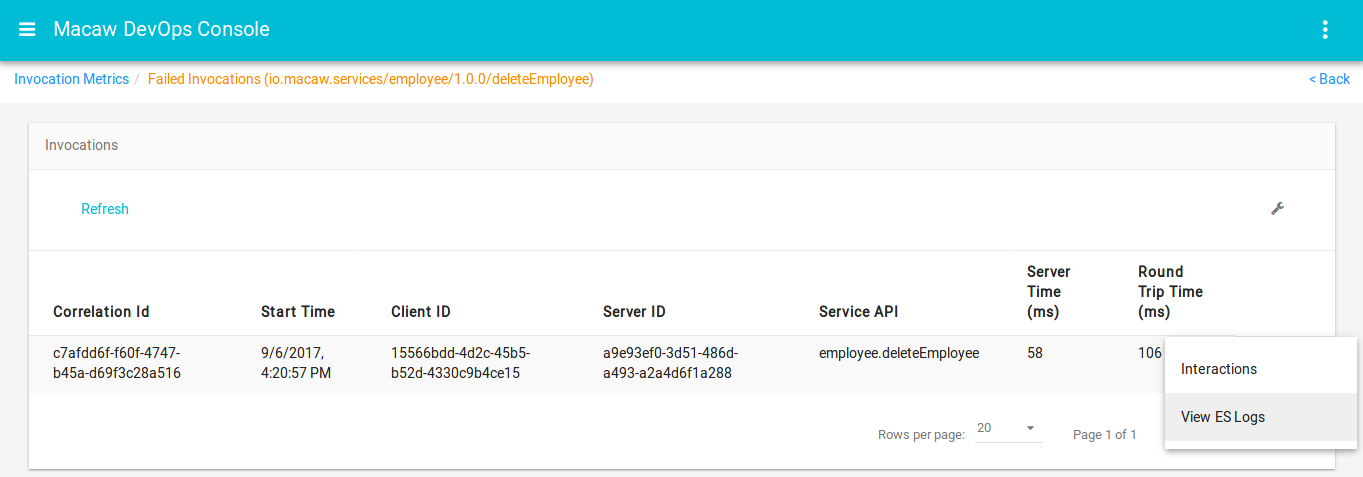

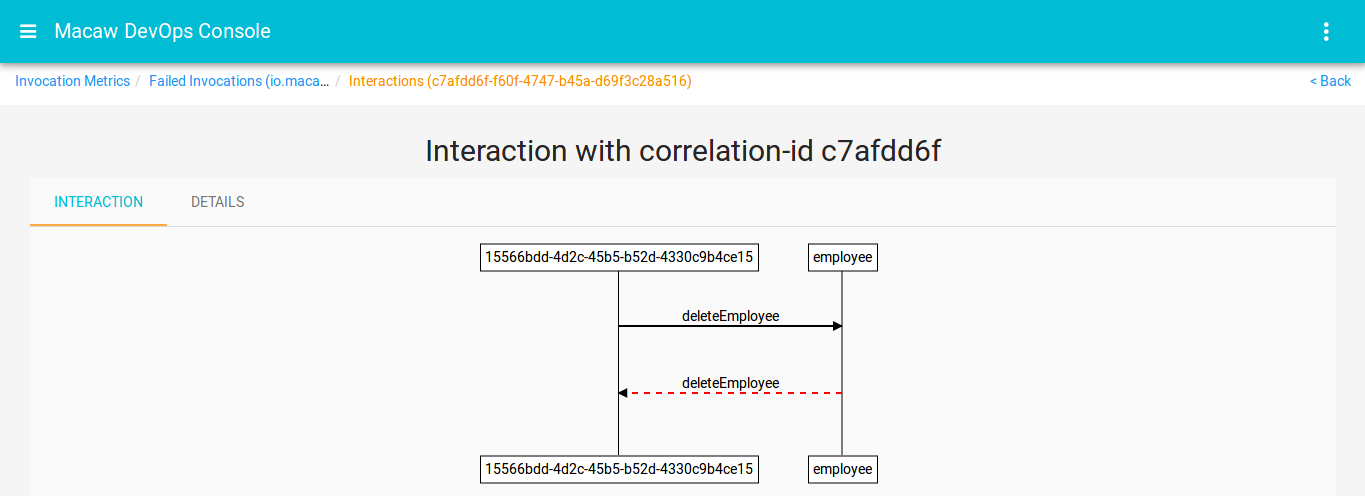

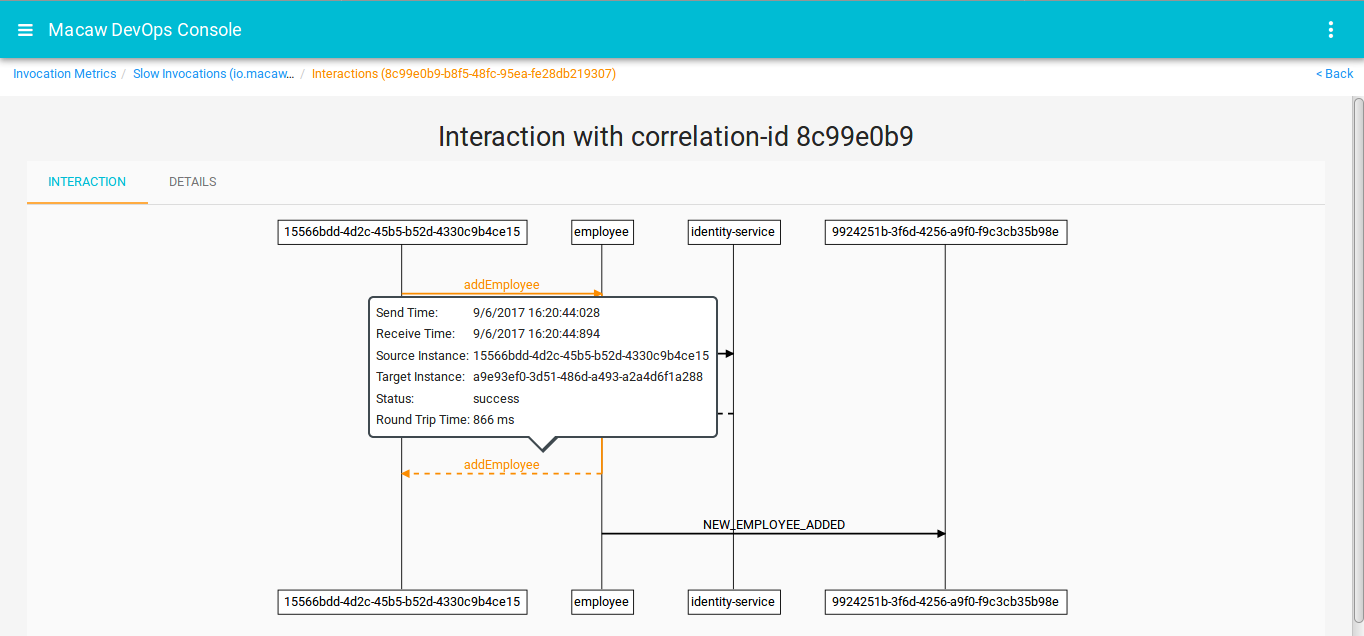

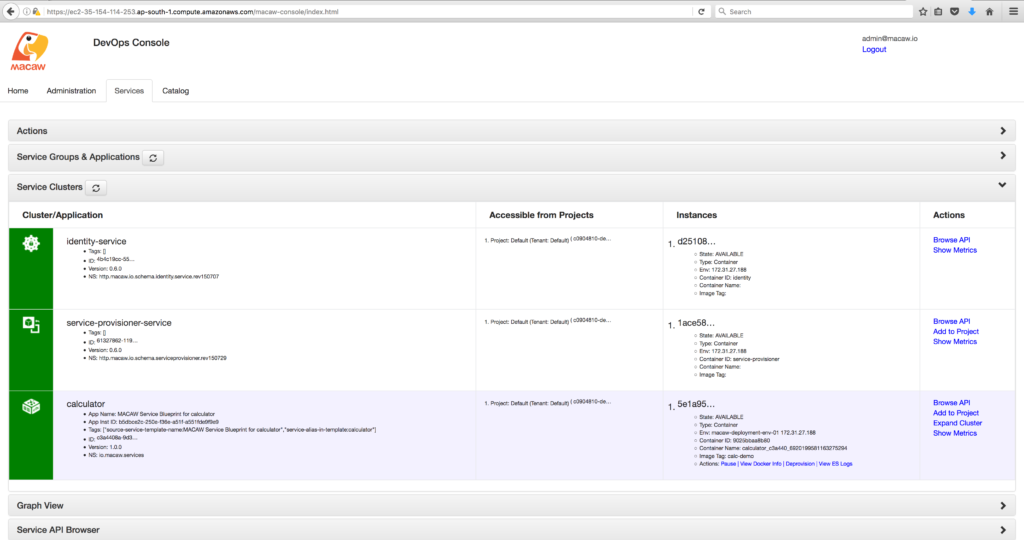

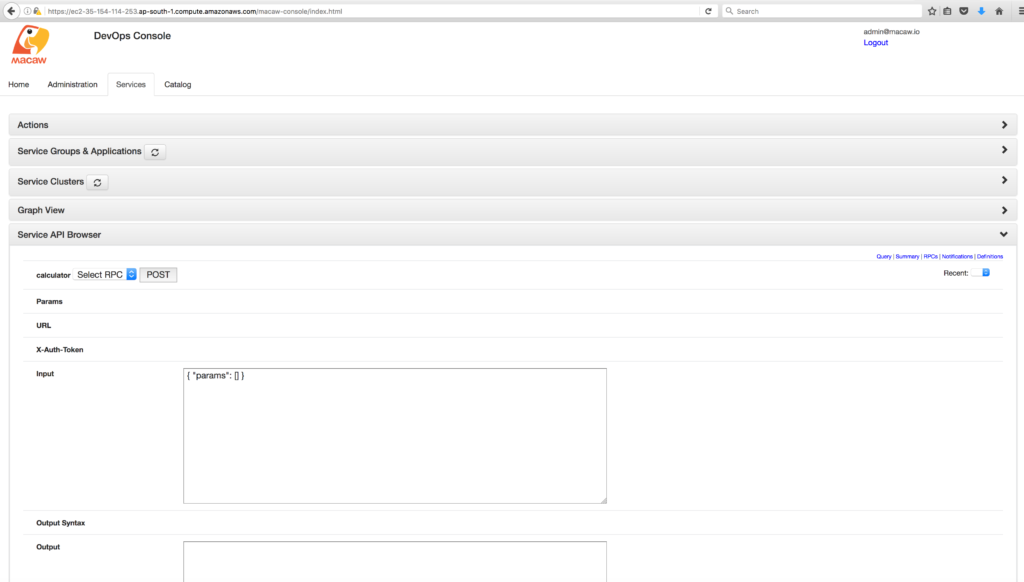

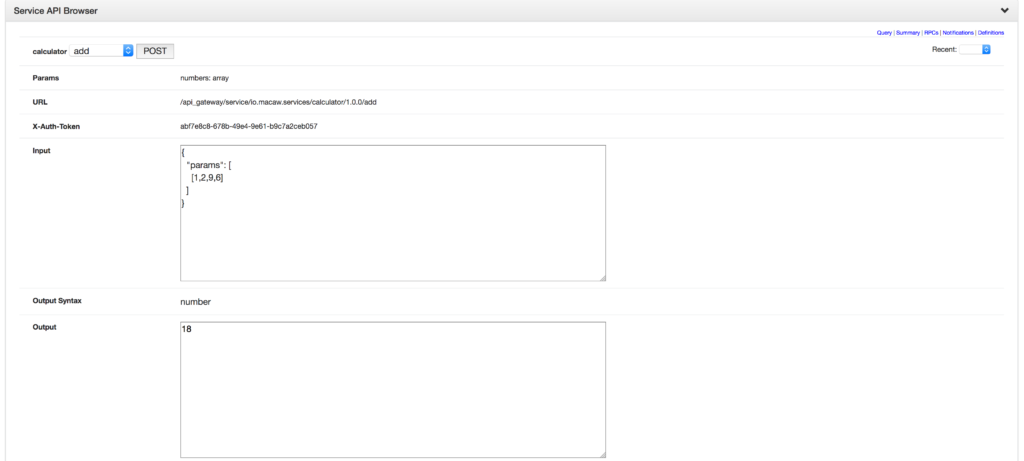

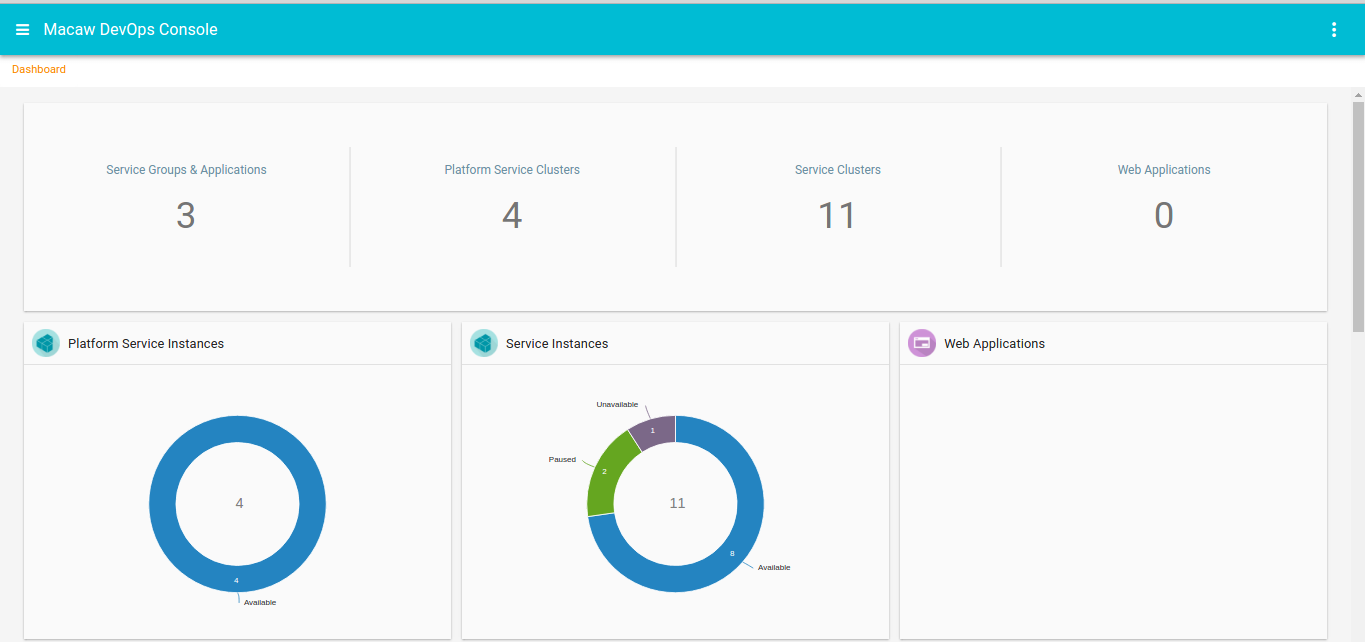

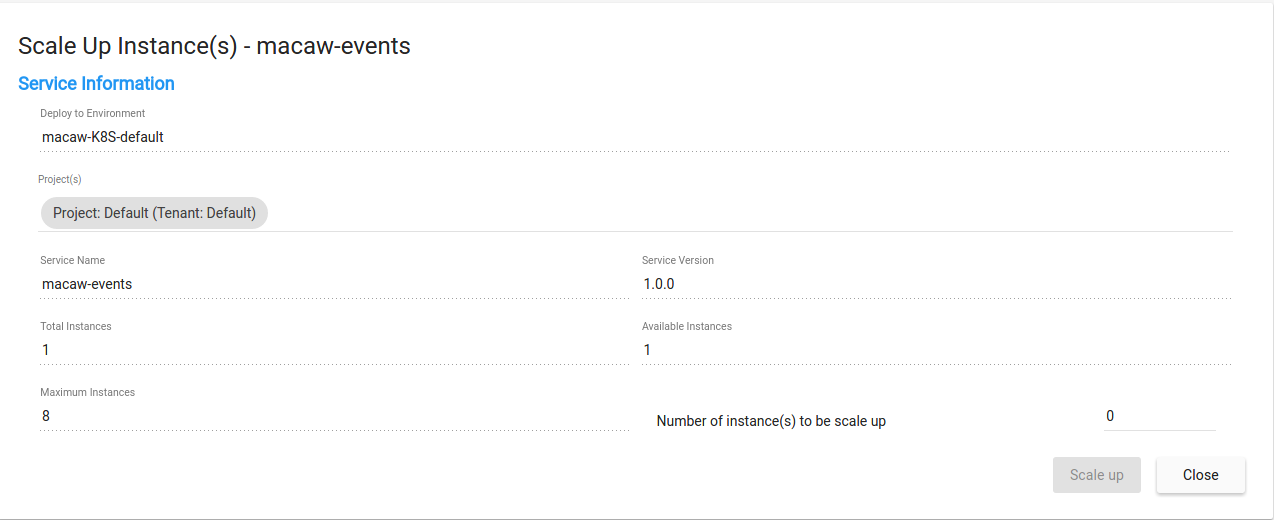

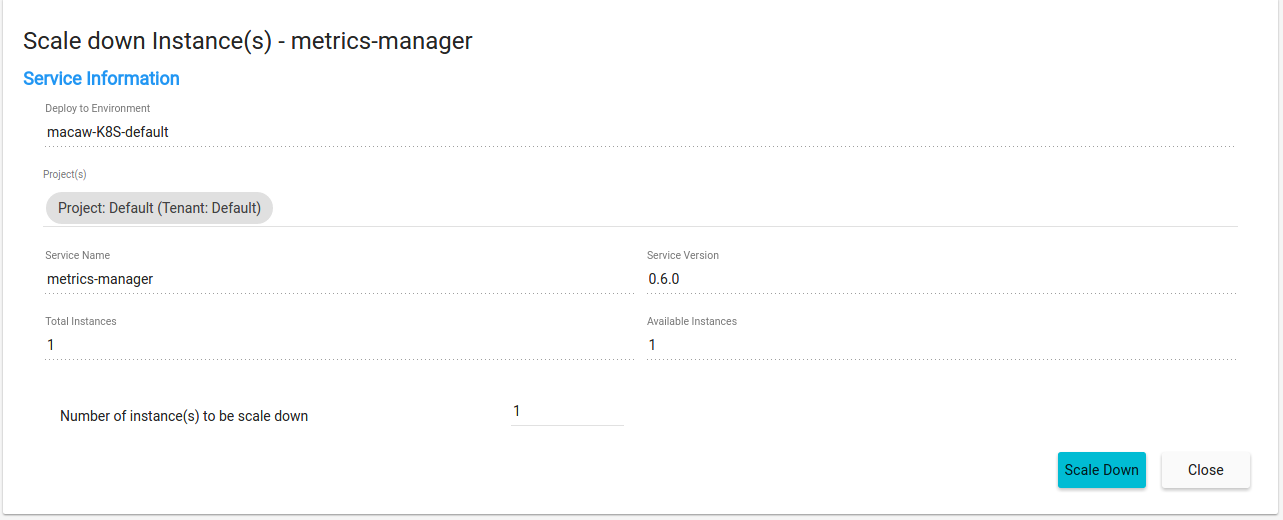

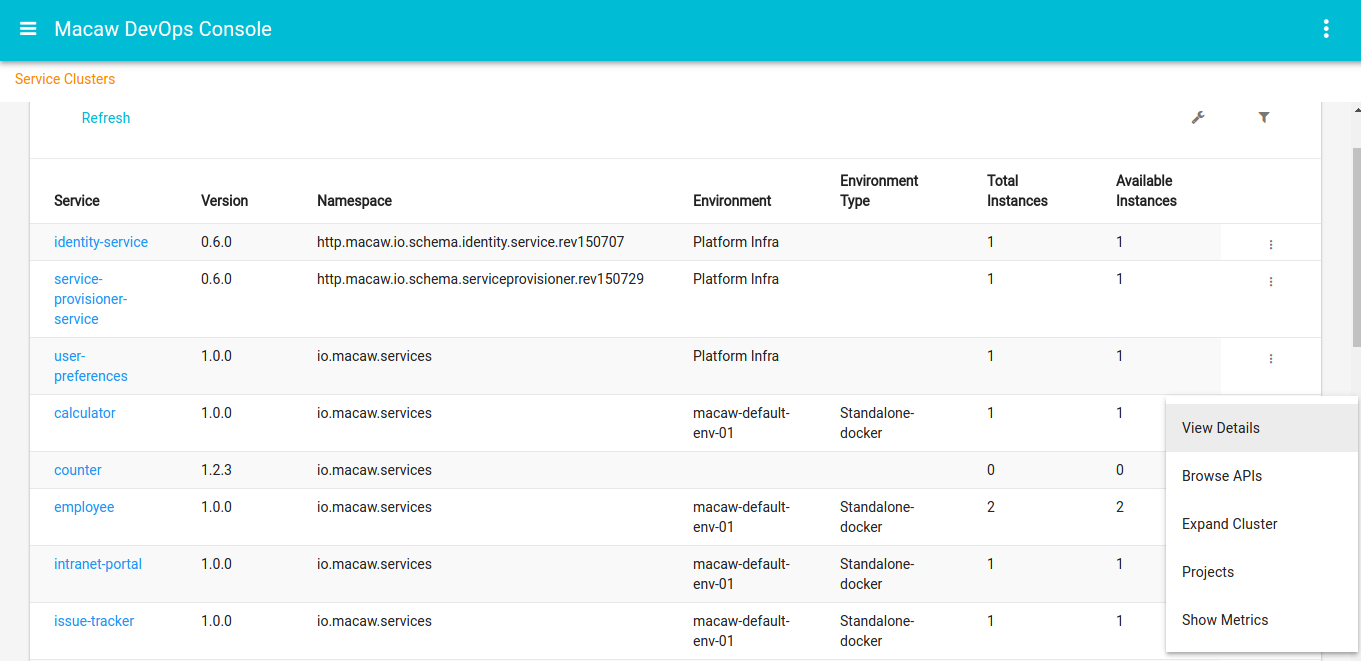

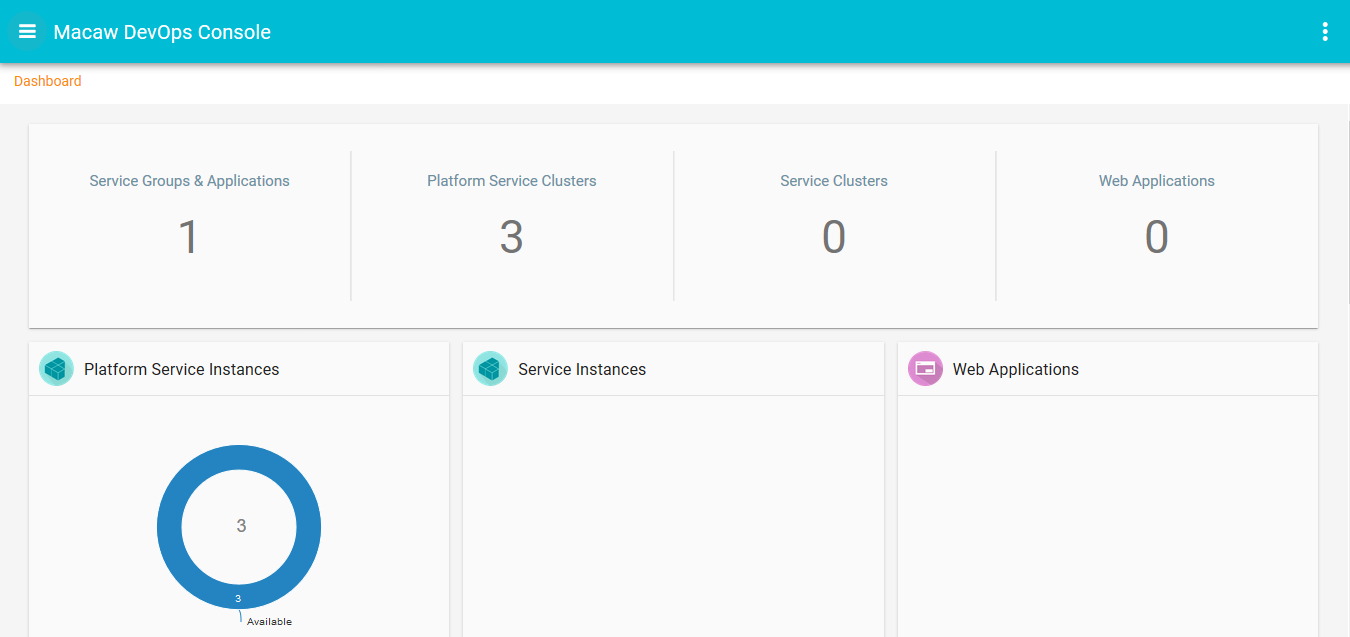

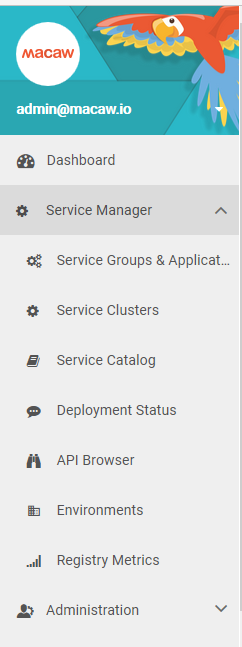

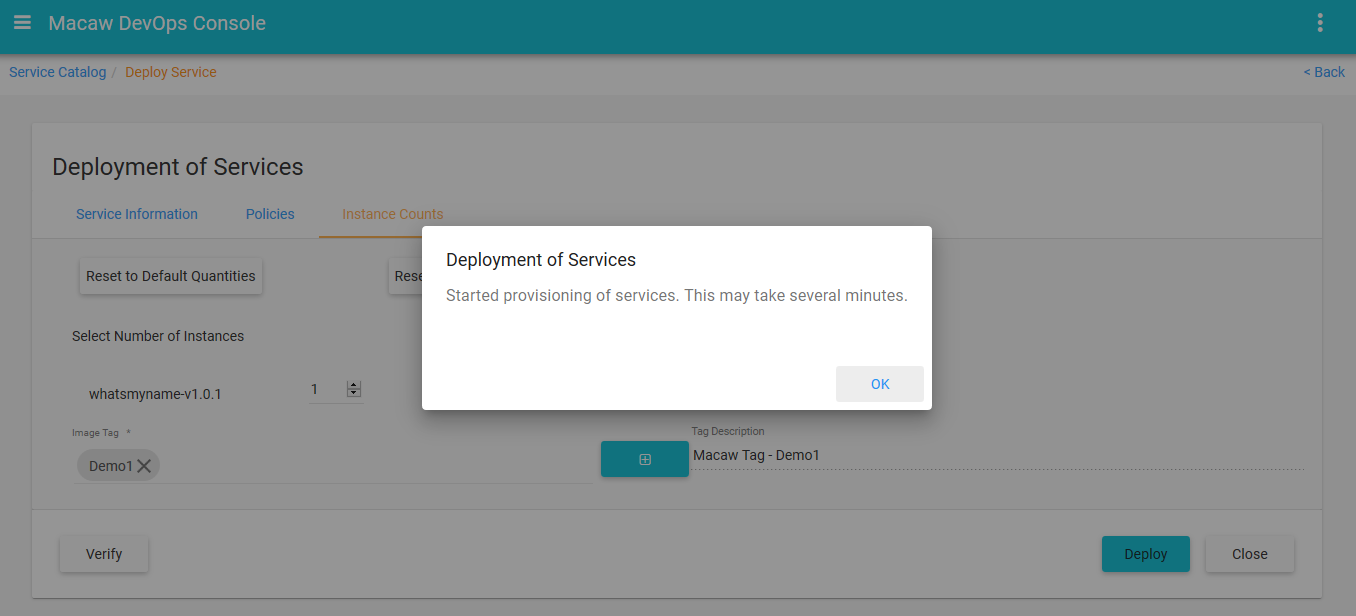

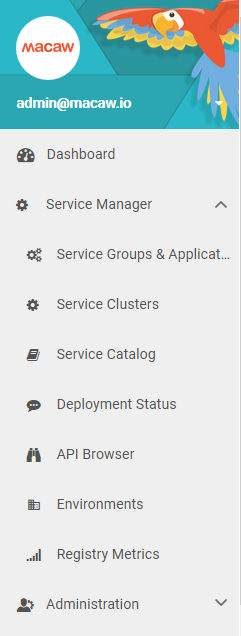

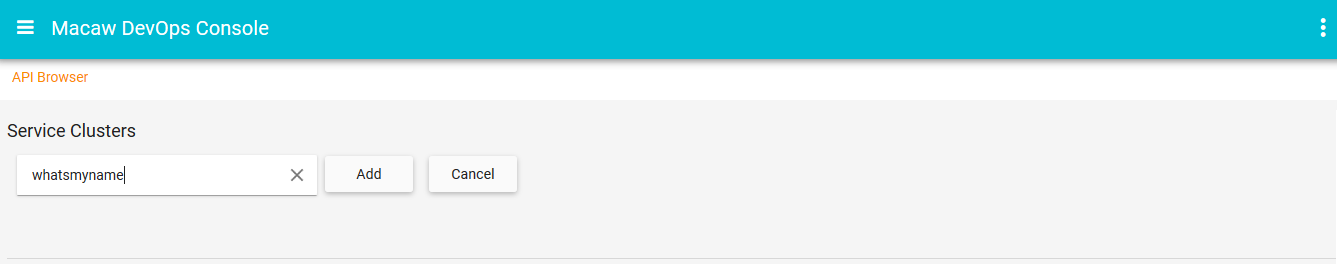

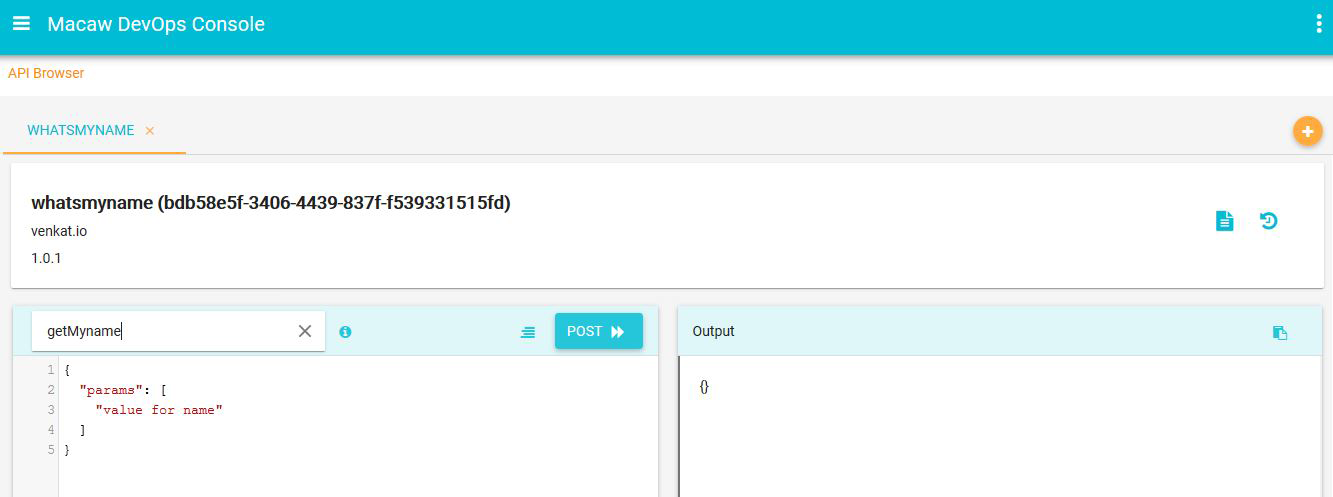

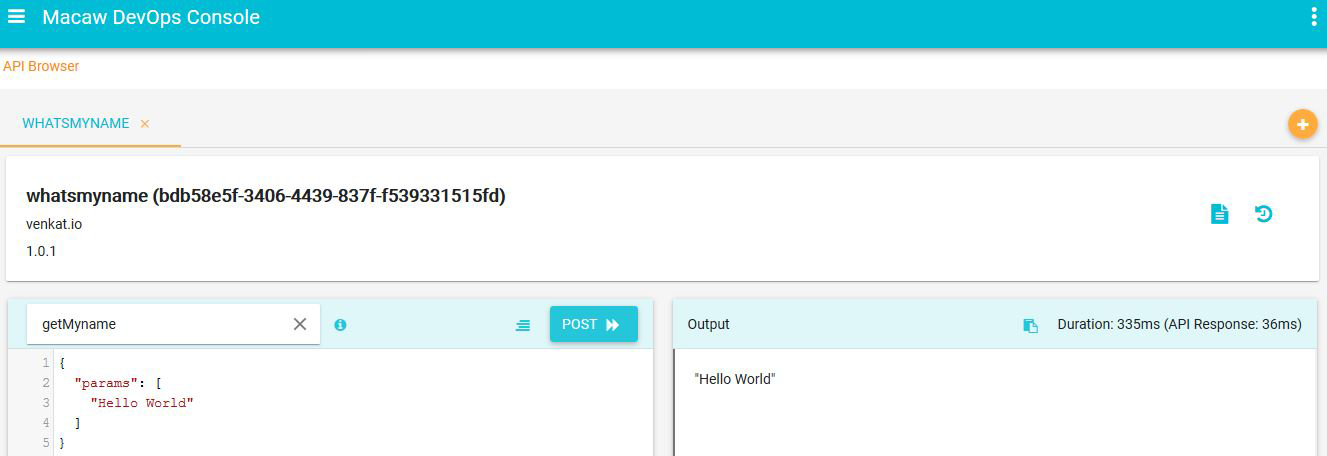

- Macaw provides Macaw CLI and a graphical web-based console to deploy, scale, and administer (monitor, troubleshoot) the platform and microservices. Message co-relation and event visualization serve as essential troubleshooting and analytics tools.

- From a runtime standpoint, Macaw is a distributed application running on top of one or more standalone Docker nodes that host Macaw microservices. Messaging, routing, and load balancing among the nodes and services are achieved using Kafka & Zookeeper over SSL. Front-end UI messages are load balanced through HAProxy. For service-level resiliency, Macaw creates multiple instances of a Microservice and operates it as a cluster using its native placement, load balancing algorithm.

- Macaw can be configured to send container placement requests to a resource scheduler like Kubernetes or Docker Swarm, which will assume responsibility of container placement and high availability.

- Macaw offers several installation choices. For on-premise, Macaw can be installed on the developer’s machine (Mac/PC/Linux) using Vagrant and VirtualBox, or on VMware vSphere. For cloud, Macaw AMIs are available on AWS Cloud. For other clouds, Macaw can be installed on top of CentOS-based Linux nodes using installer scripts.

Key Features

- Multi-Cloud Support: Runs on any cloud, public or private, and support on most on-premises infrastructure platforms.

- Container ready: Built-in support for standard container formats like Docker, making it easy to build, compose, deploy and move your workloads easily.

- Built-in security and scalability: Enterprises can define fine-grained policies for access management, scaling, and other life cycle management operations that encourages the platform natively support them. Its built-in workflow manager can be used to embed approval steps where required to provide an additional layer of controls.

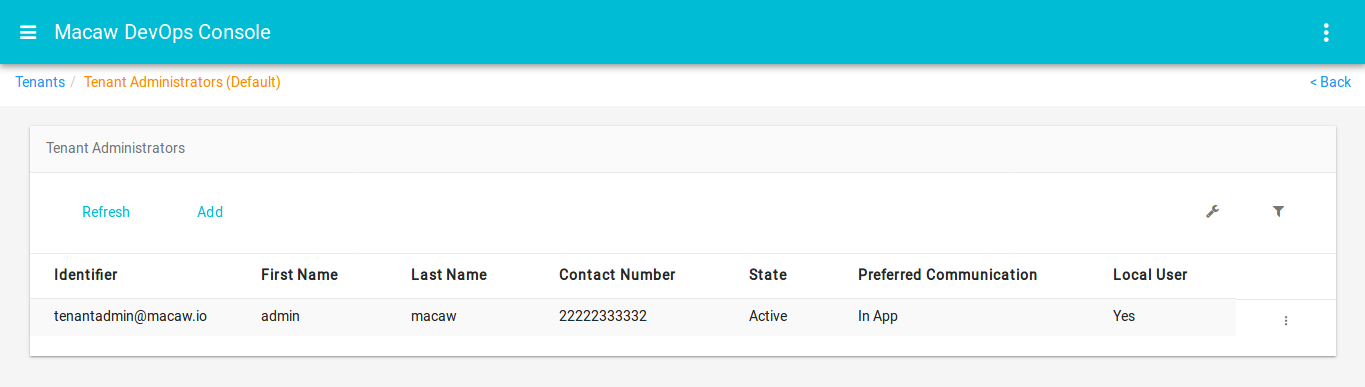

- Multi-Tenancy: Service providers and the Enterprise can achieve clear isolation and assure autonomy by enforcing policies at the tenant, project, user, or service levels.

- Self-governance: Built on the core principle of providing pervasive governance to ensure consistent operational behavior from availability, performance, compliance, and a security point of view is provided.

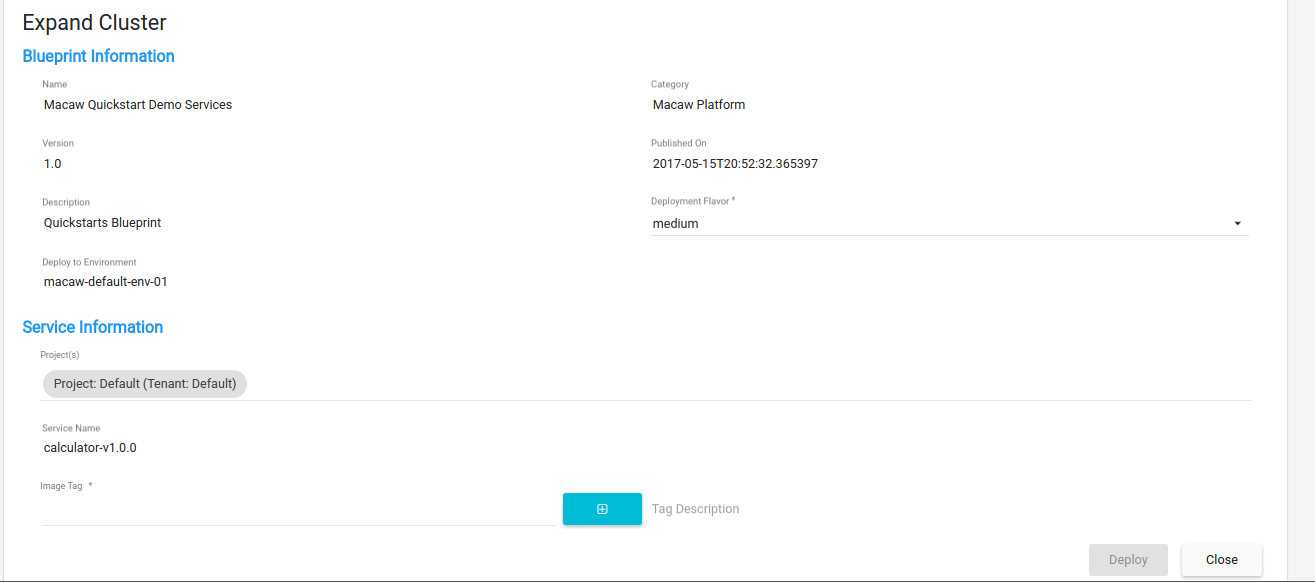

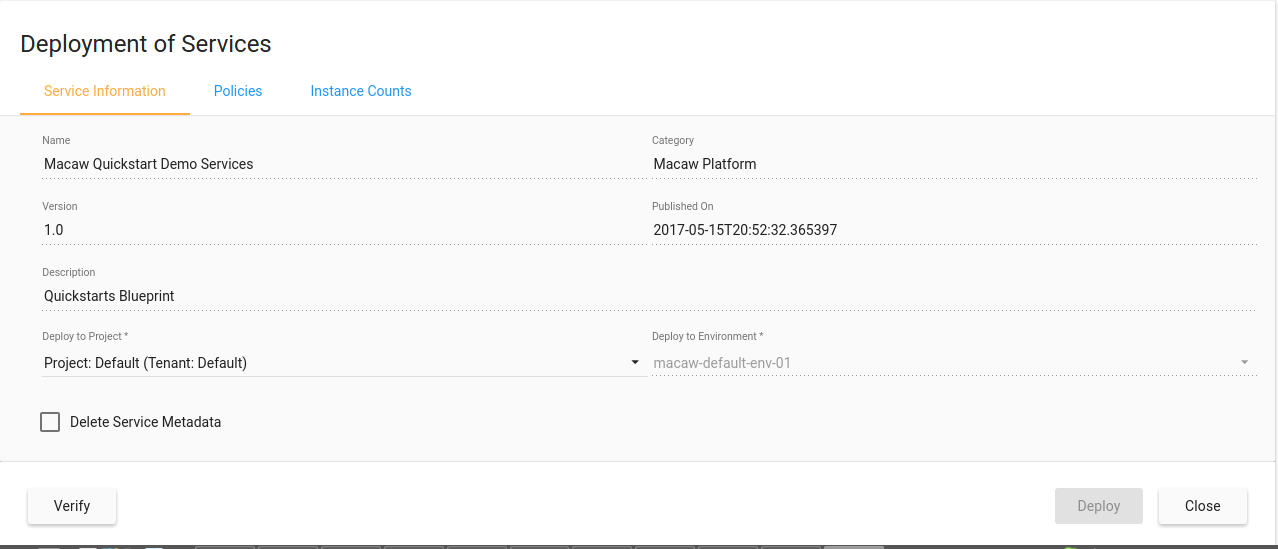

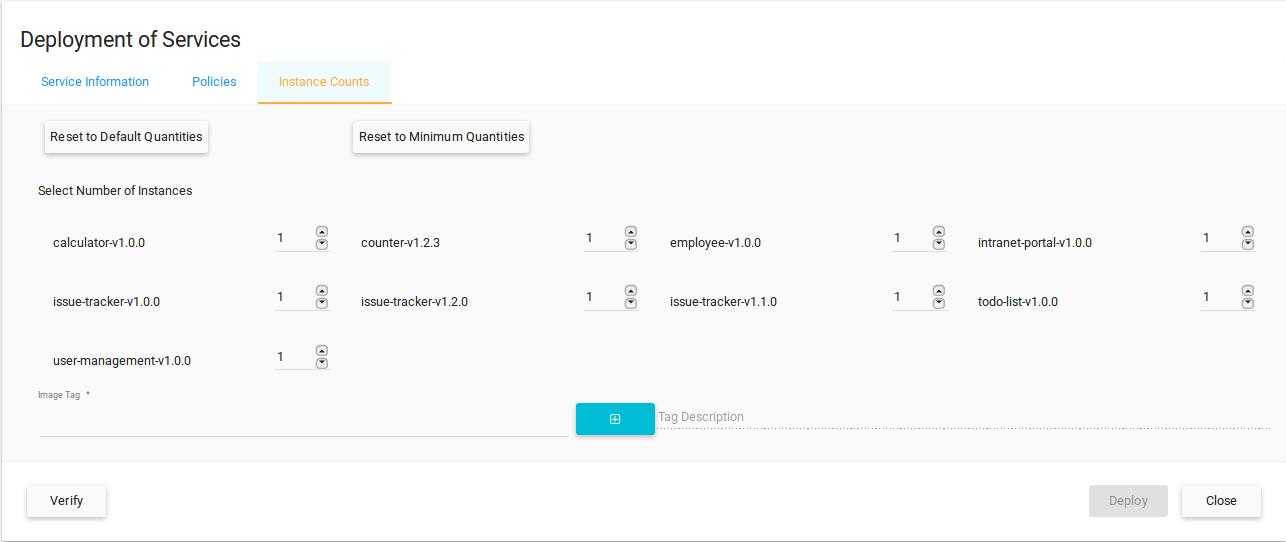

- Blueprint support: Developers and architects can group services into a logical entity called Blueprint with common scaling policies and access controls. Once modeled these blueprints can be used to deploy the group of services with a single click and manage the life cycle as per the policies specified.

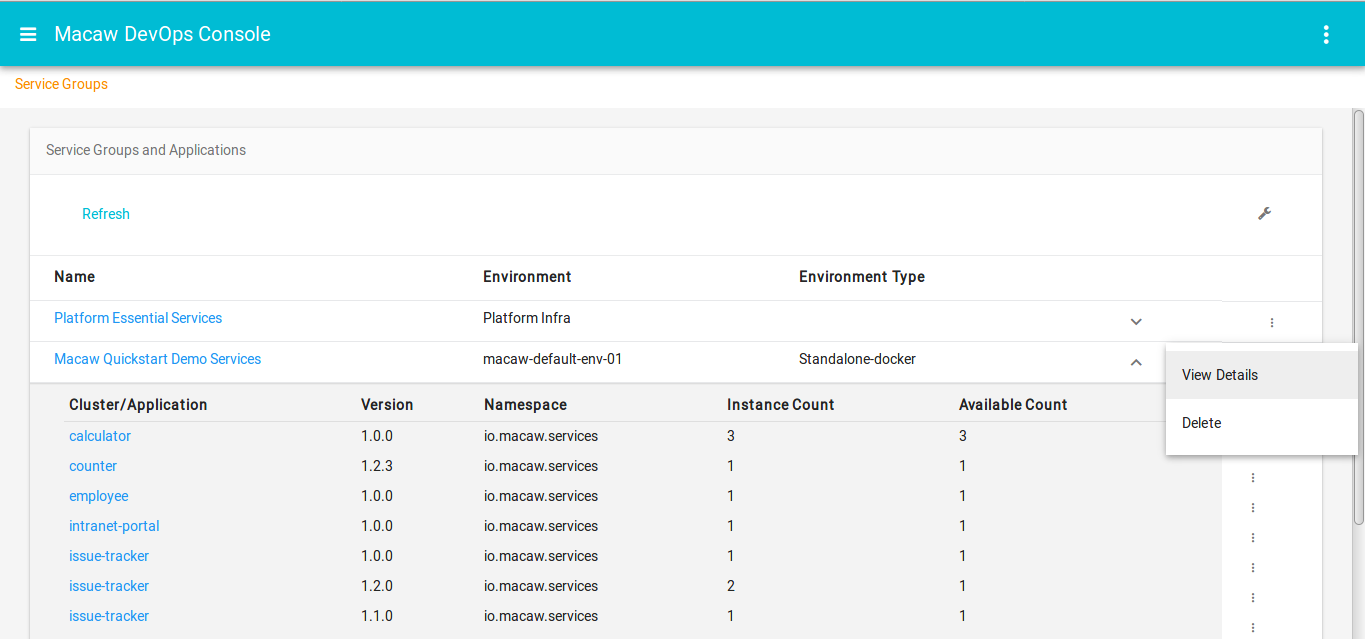

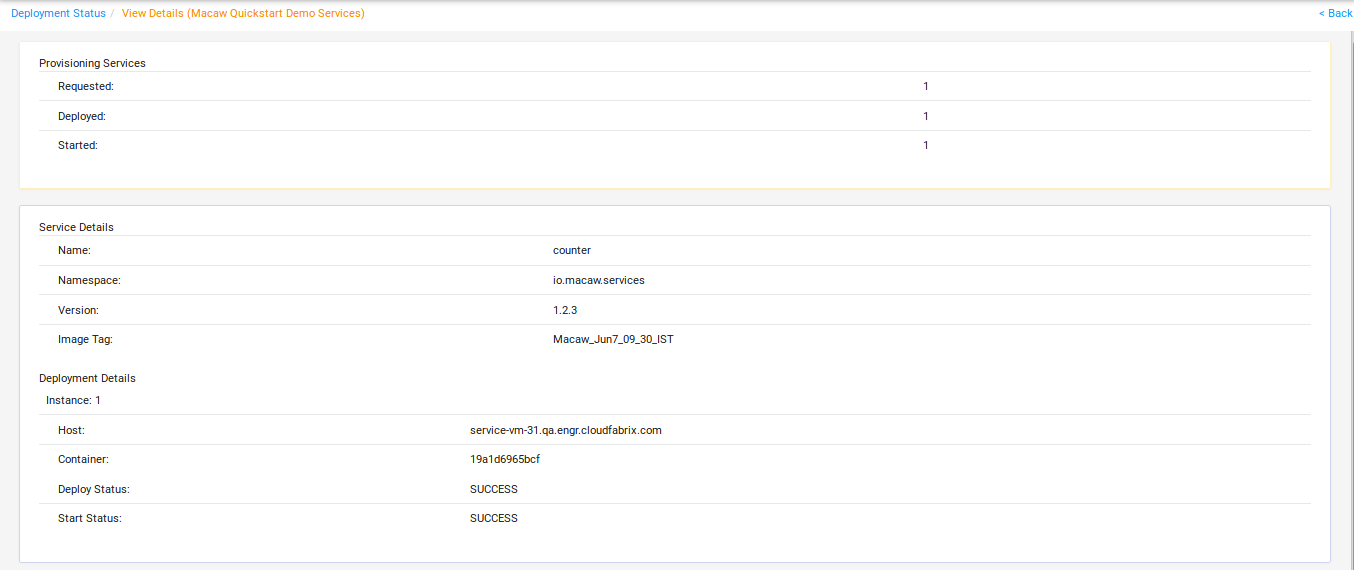

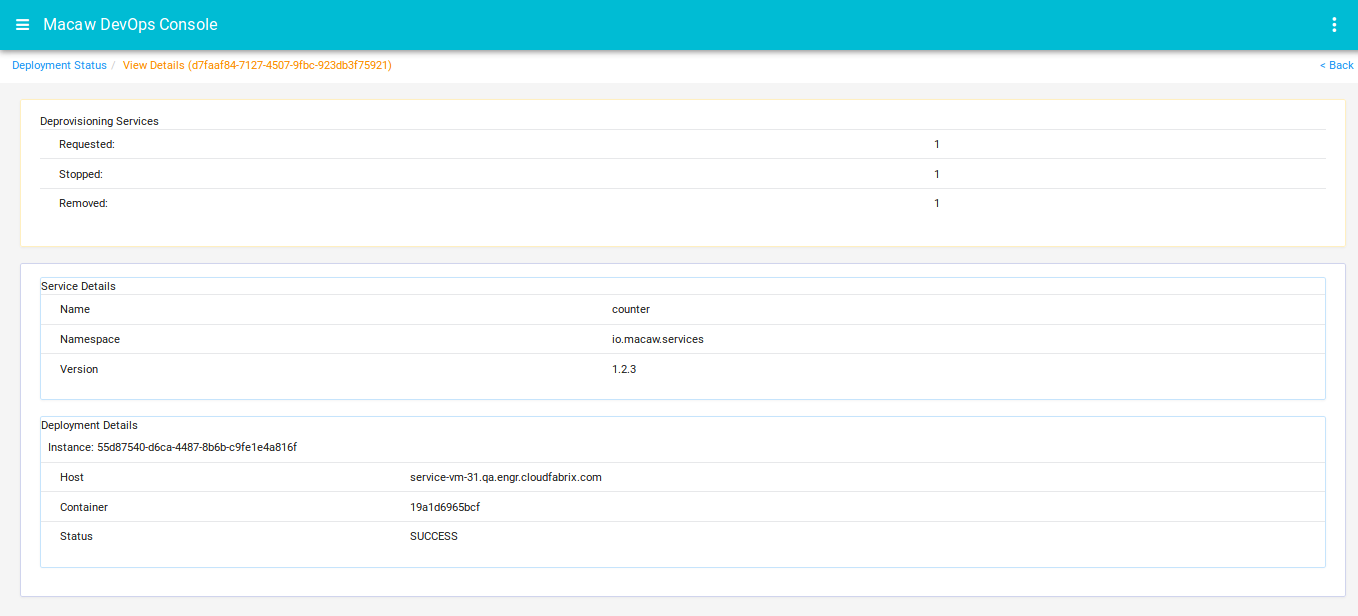

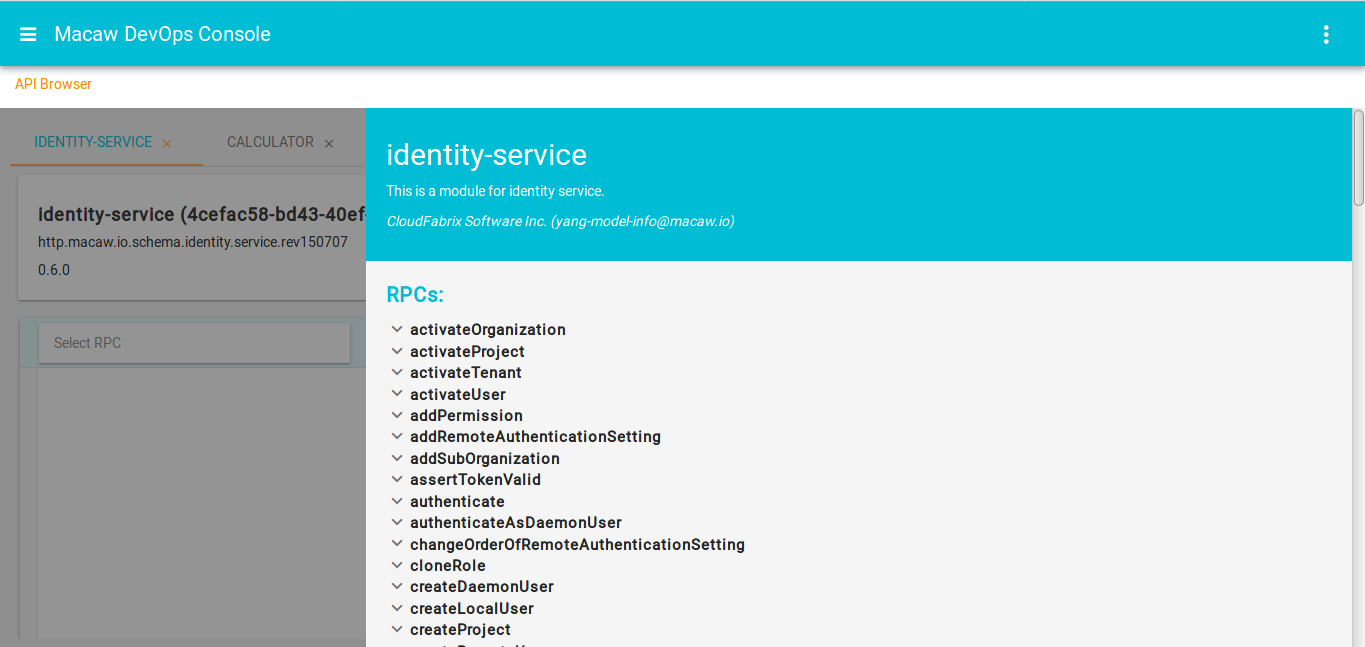

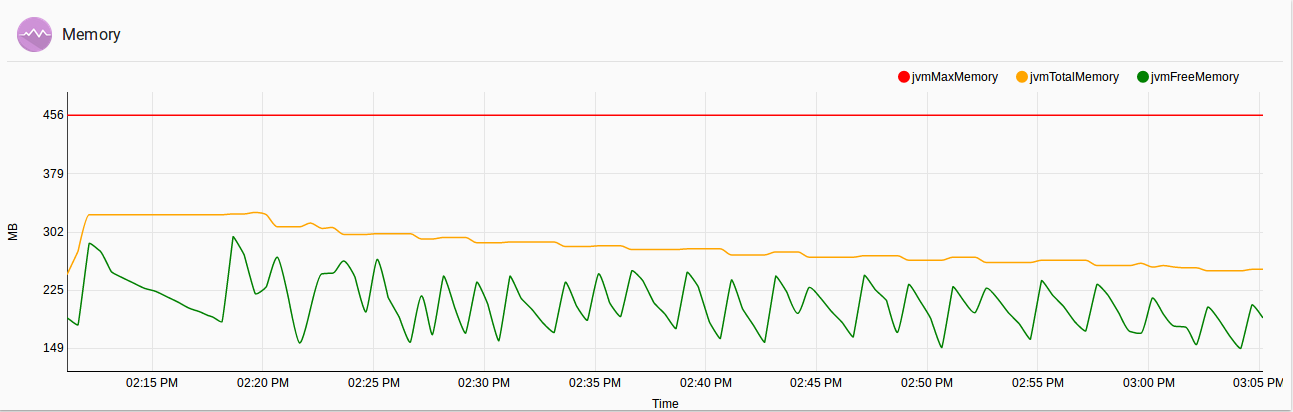

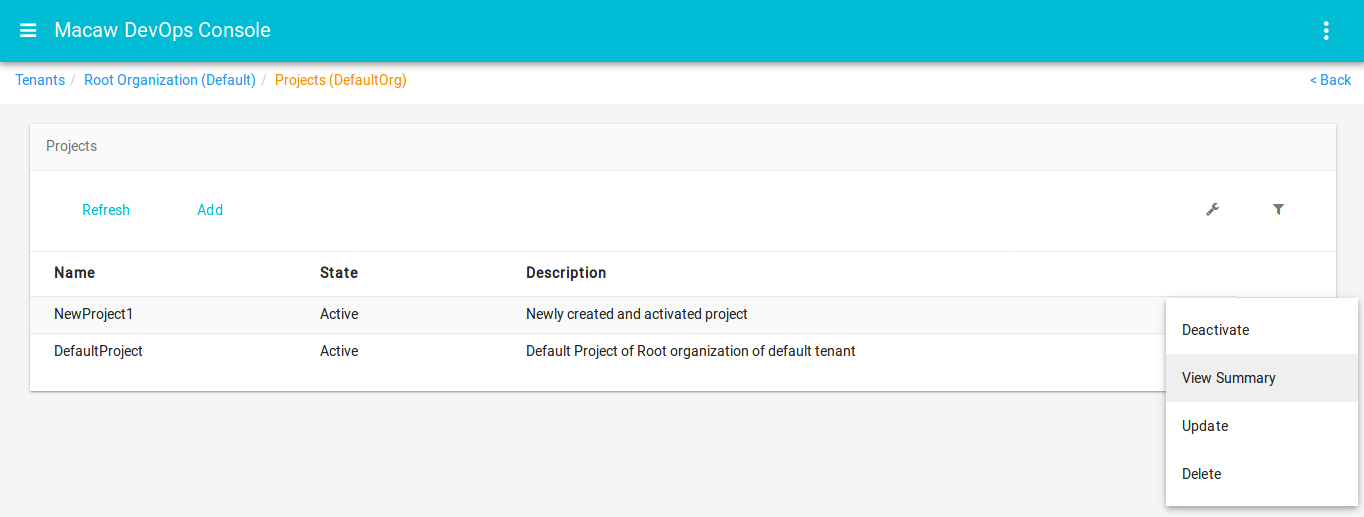

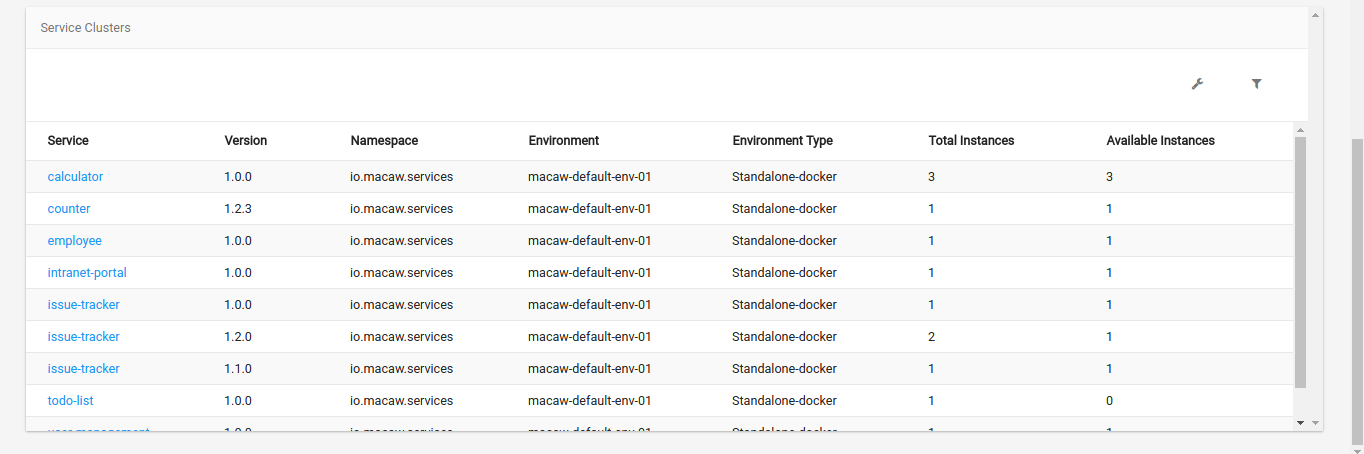

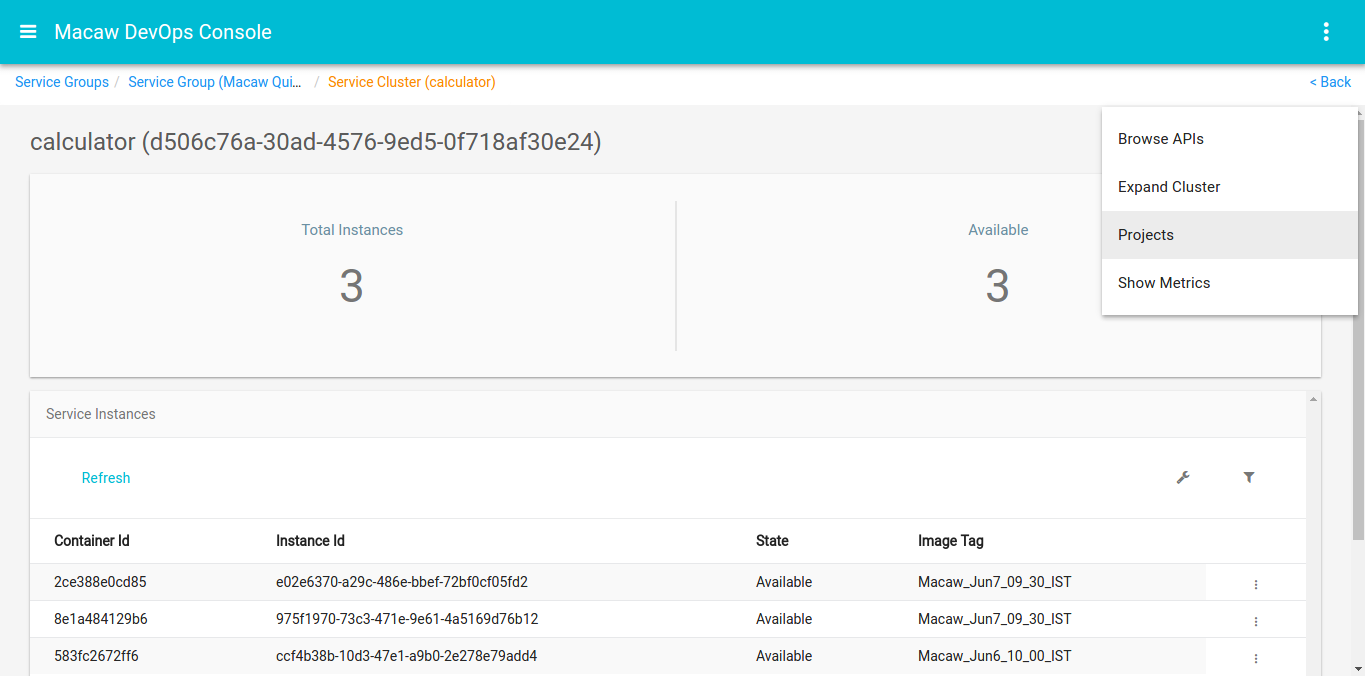

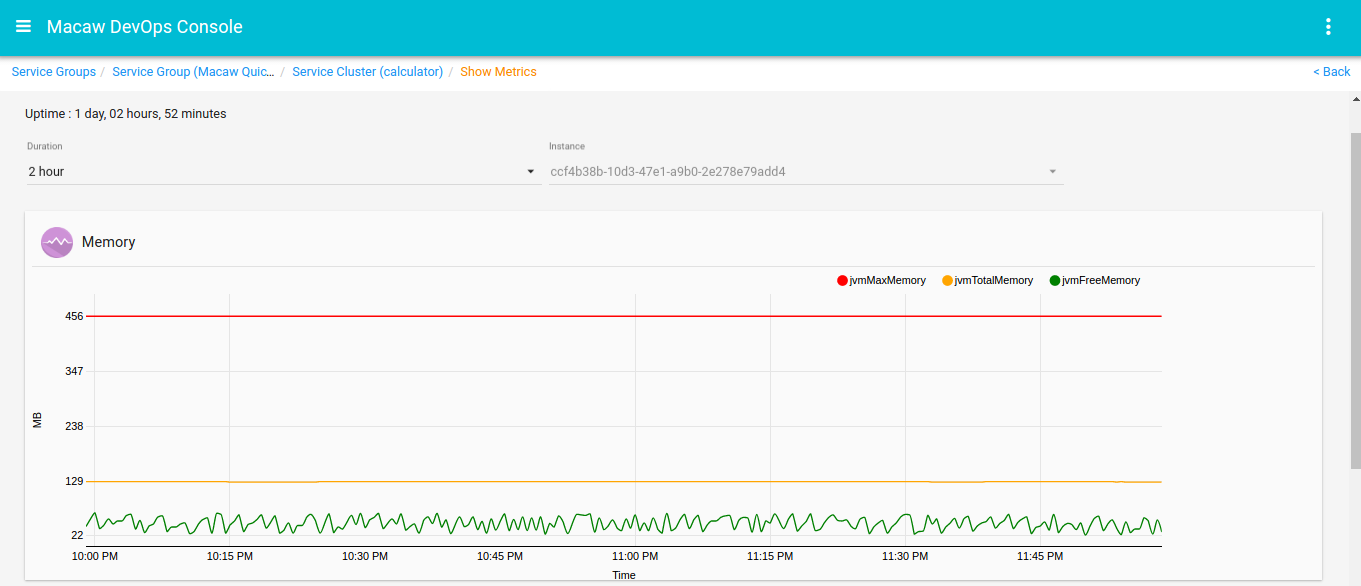

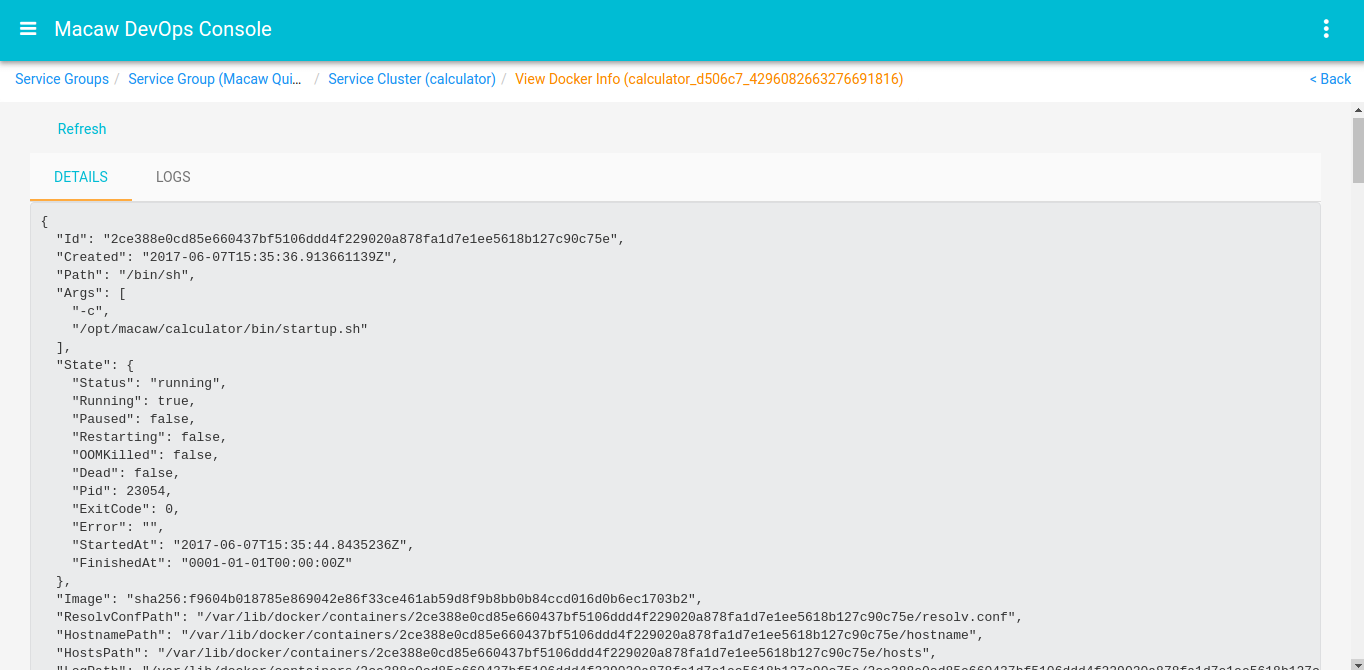

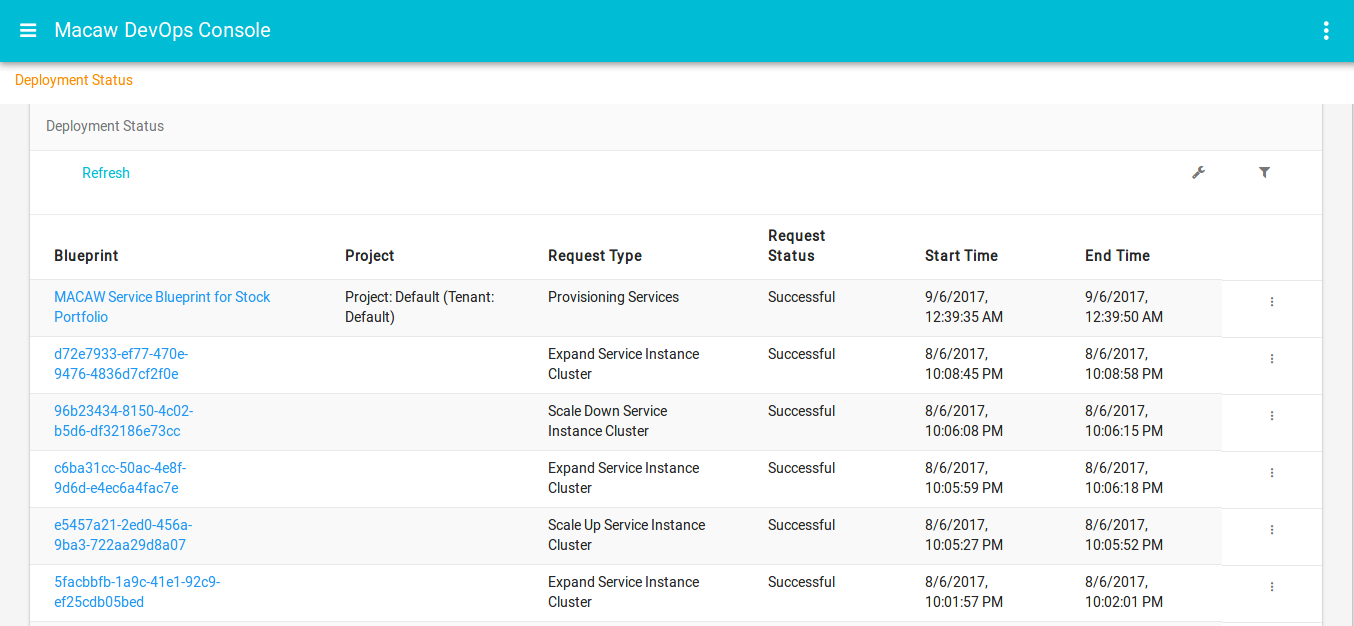

- DevOps Console: Microservices-based applications are dynamic in nature and Macaw DevOps console can help developers and operations team deploy and monitor their runtime behavior for easy troubleshooting.

- Application Modernization: Traditional applications can be transformed without any major disruption to embrace the principle of cloud-native applications. Macaw allows gradual migration to Microservices by leveraging the middleware support or shadow services to maintain integration with the legacy systems.

- Curated stack of open source technologies: The platform is built using the best of breed open source technologies including Kafka, Zookeeper, Spark, Cassandra, Docker, Swarm, Elastic Search among others. These technologies provide the scalability, multi-tenancy, and robustness enterprises demand. Further enhancement with automation and governance features simplify usage for DevOps functions.

Key Components

Service Infrastructure: Macaw’s set of core capabilities that provide a solid foundation of both services and platform.

- Message Bus: Distributed Kafka & Zookeeper over SSL running on multiple container nodes, used dually for internal and external messaging between microservices.

- Logging & Search: Logging as a service provides centralized logging capabilities leveraging Elasticsearch.

- DBaaS: Utilize Cassandra and MySQL database as services. Macaw covers the DB lifecycle, including init data, schema loading, loading DB drivers, and automatically mapping and building dependencies for service/container images.

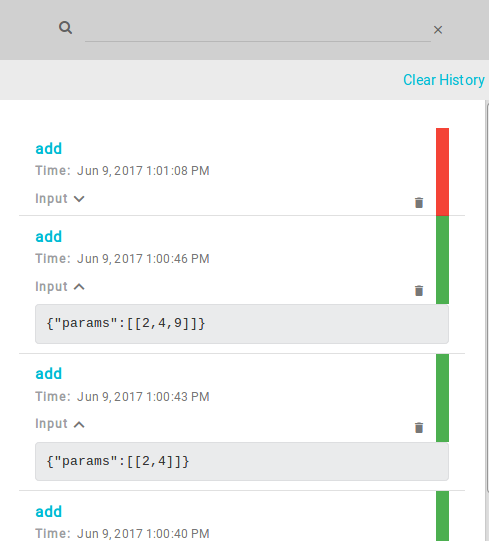

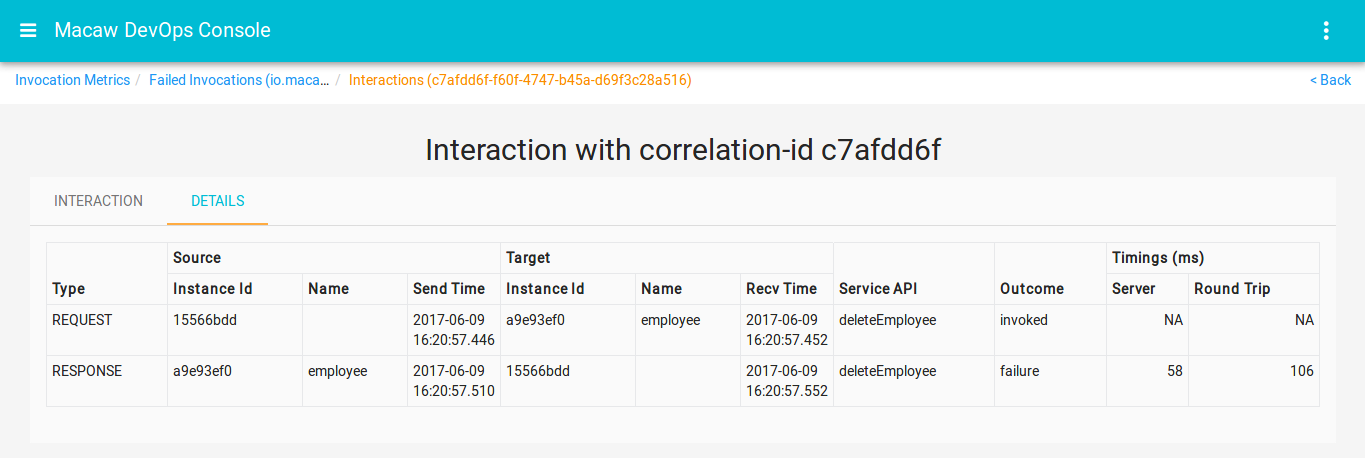

- Message Correlator: All user-generated API request chains are fully traceable and correlated through message ID and can be viewed in the DevOps console events widget. Serves effectively for troubleshooting plus acts as an analytics tool to build social service graphs.

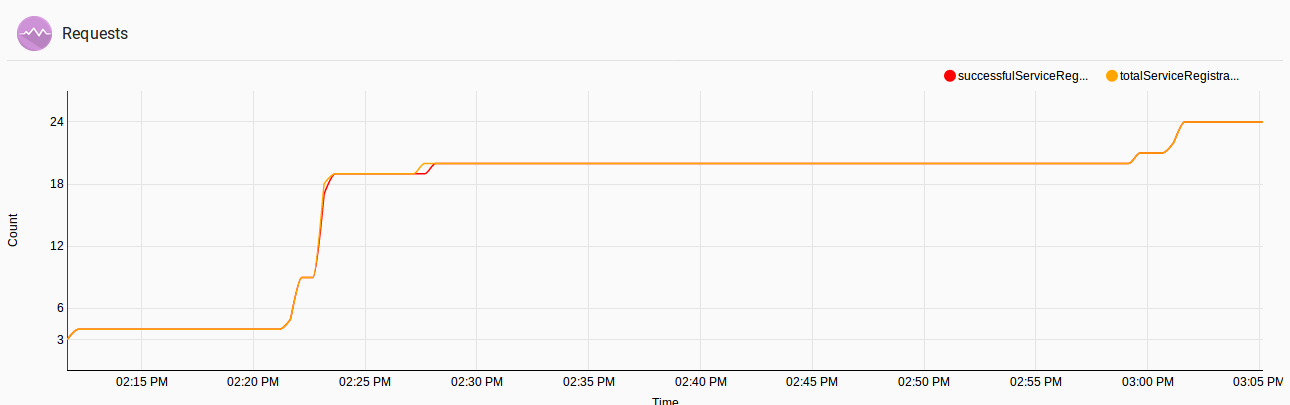

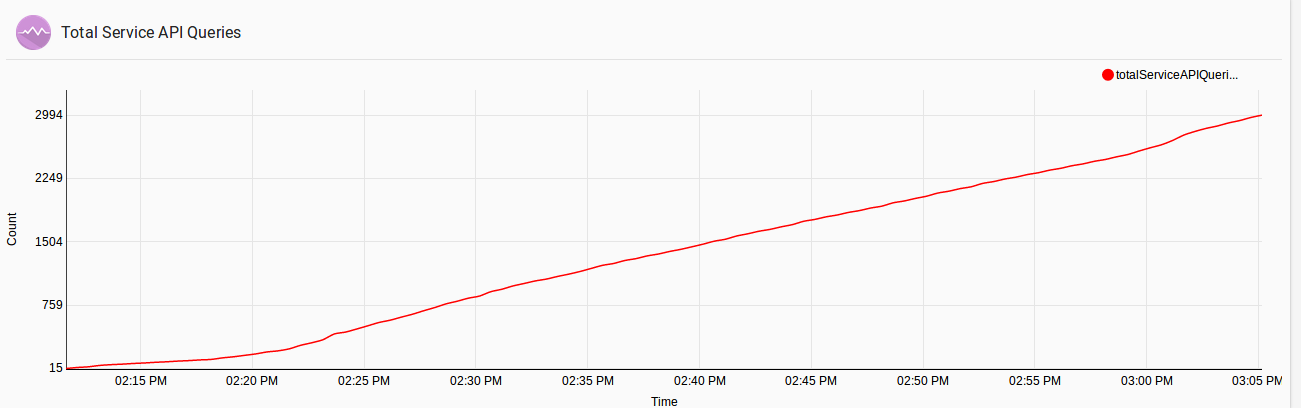

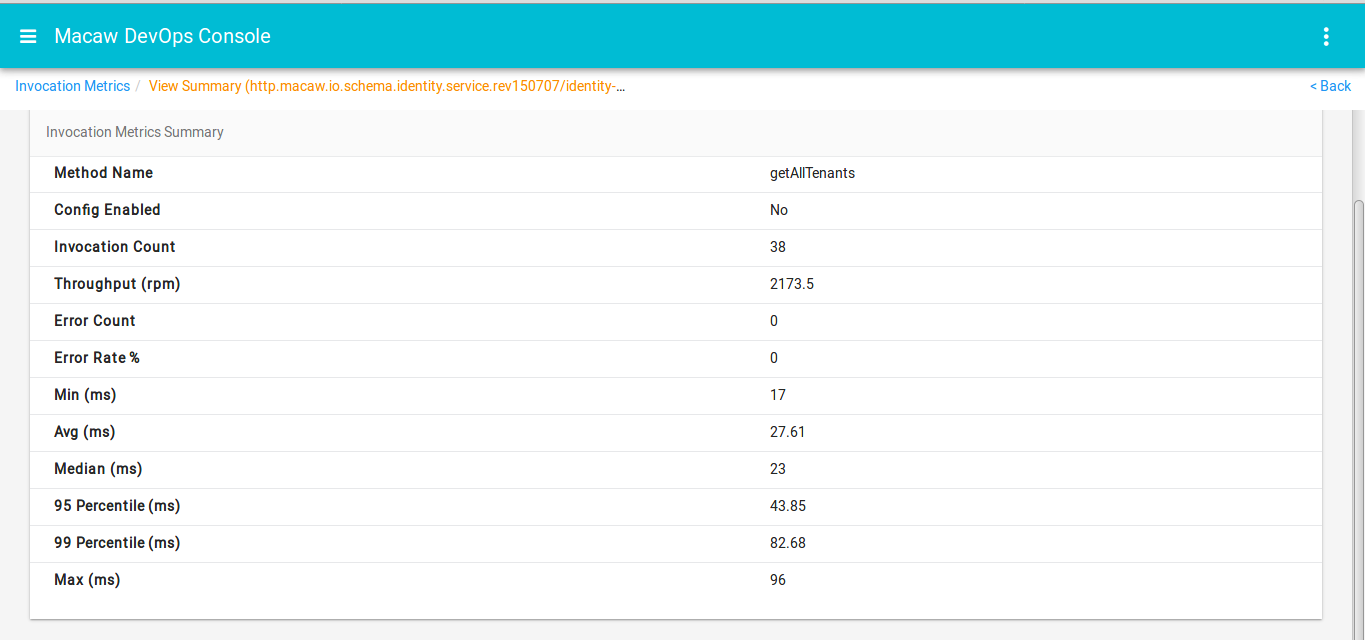

- Analytics: Yields essential intelligence about service operational behavior like service performance, life cycle events, usage stats, etc. through native analytics as well as pluggable external analytics providers like Apache Spark and more.

Macaw Runtime: Macaw platform core runtime is polyglot with support for multiple languages including Java, Javascript, Python etc.

Core Services: Macaw platform capabilities (i.e. service discovery, registry, provisioner, etc.) that are integral in a Microservices-based architecture.

- Service Registry: All Macaw microservices register with a centralized Service Registry regarding service listing, service lookups, and interactions among services or with external clients.

- Service Provisioner: Provisions any service on a Macaw node based on native placement and a load balancing algorithm. Configurable also to provision service on external container clusters through resource schedulers like Kubernetes or Docker Swarm

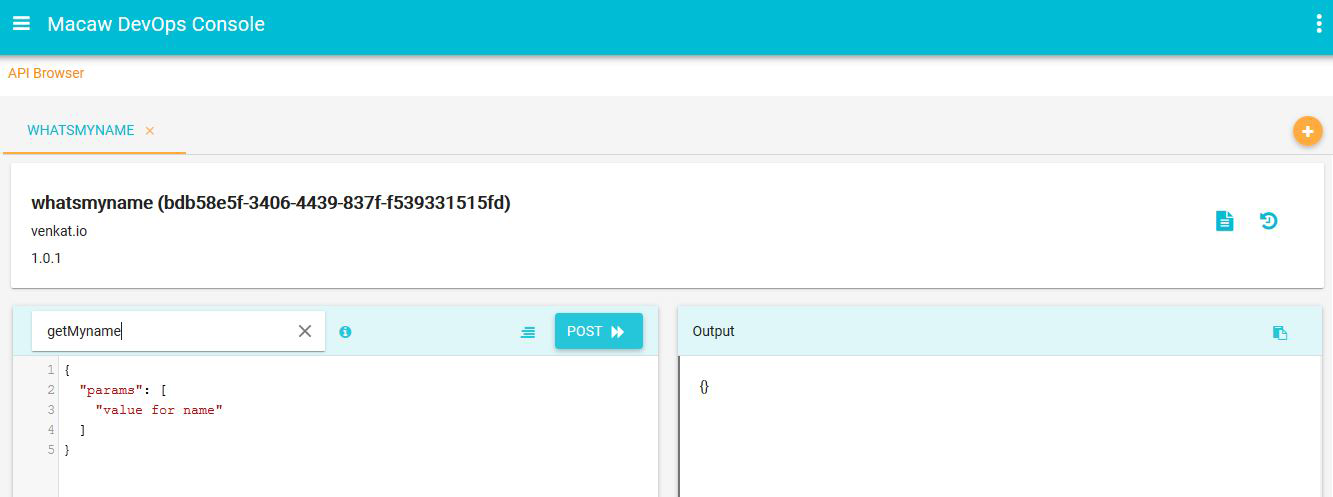

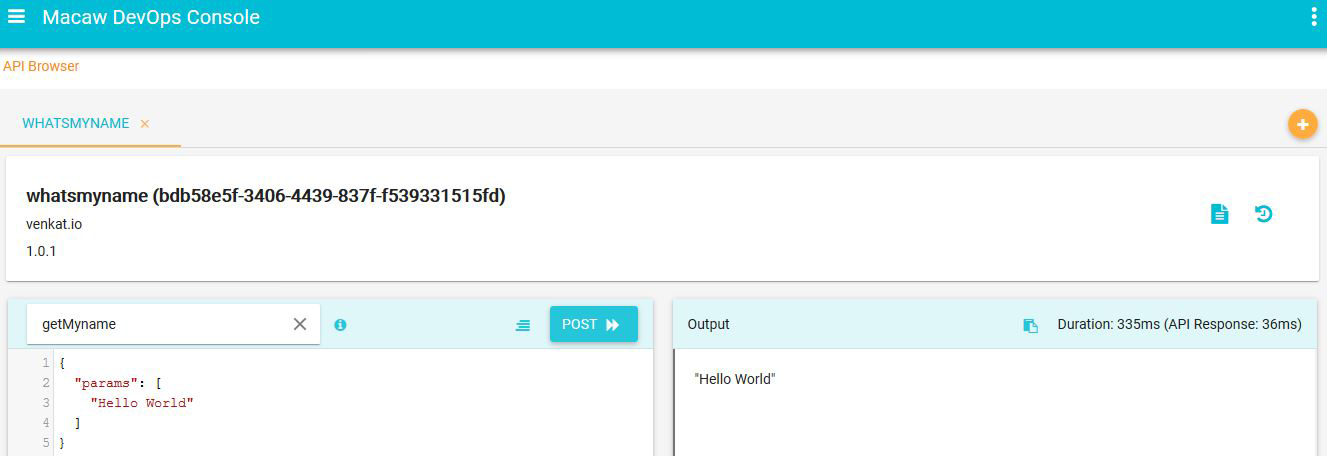

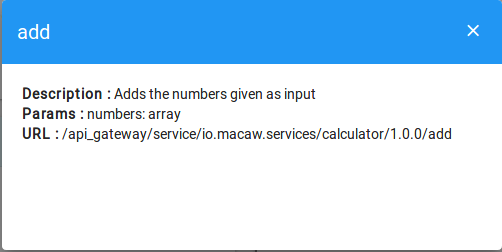

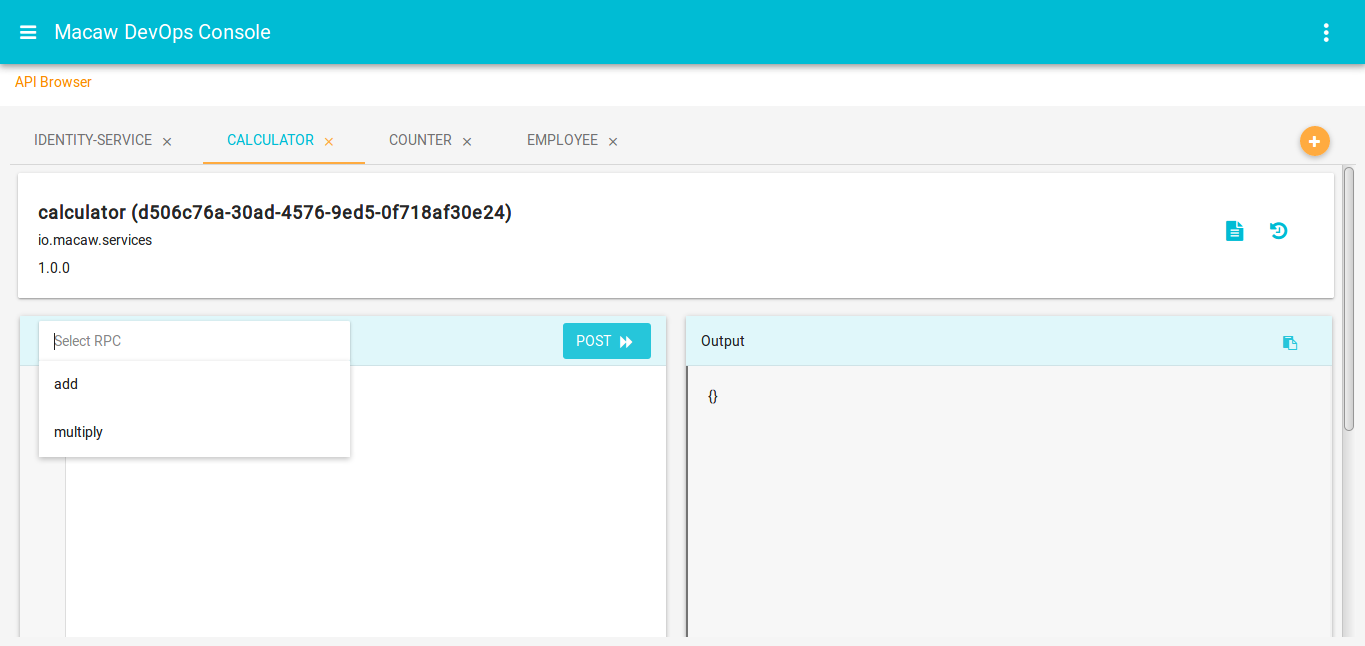

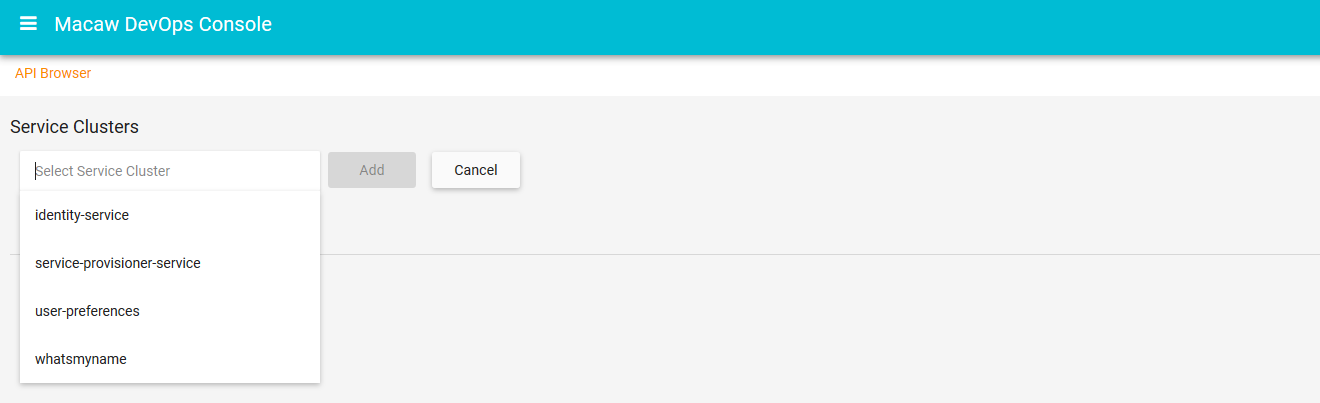

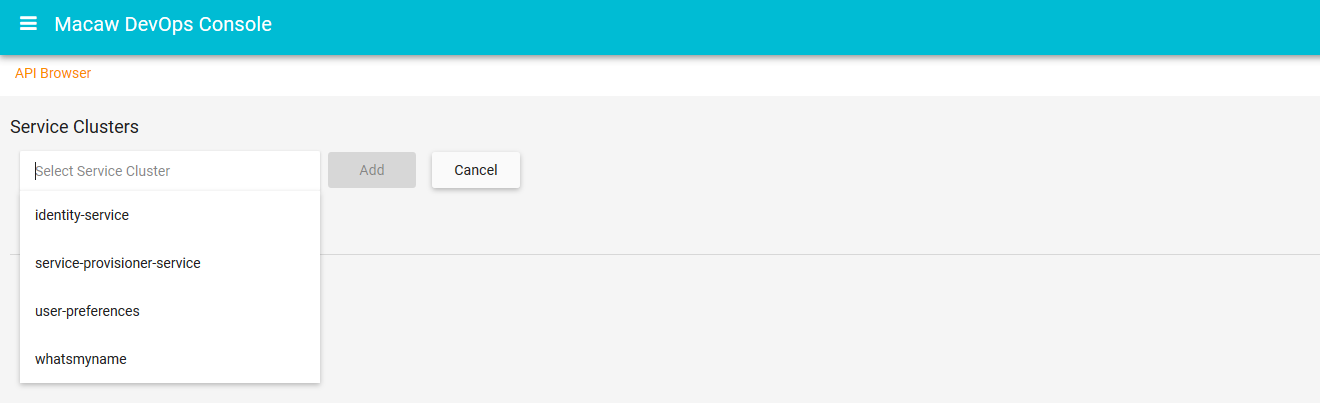

- API Gateway: Aggregates all Macaw microservices APIs as RESTful API endpoints and provides API browser, invocation capabilities through the DevOps console.

- Blueprints: Collection of related Macaw microservices along with their operational, placement, and deployment policies together constitute Macaw blueprint.

Advanced Services: Premium services consisting of additional applications that run on Macaw platform.

- Locker: A secure way to store and access credentials using multi-level encryptions and advanced security principles similar to that of popular cloud providers.

- Identity: Identity services to other Macaw microservices equipped with knowledge of all users, orgs, tenants.

- Service Governance: Service migration-based on load or behavior, service access governance-based on policies, approval injections at platform level are some of the capabilities provided by Service Governance

- Notification Manager: Responsible for sending notifications to interested clients about service lifecycle events.

Multi-Tenancy: Supports multiple tenants to operate on a single instance of Macaw platform through support for tenants, projects, users and roles.

Service Operations: Macaw catalog services that integrate with external applications to enhance the operational behavior of macaw applicationss.

- APM: Allows Macaw microservices to be monitored through existing APM tools by serving Macaw service data to target APM tools.

- Ticketing: Allows Macaw microservices to create/update/delete tickets in a target ticketing system-based on certain service lifecycle events.

- Shadow Services: Integration with legacy systems and external applications to bring them into Macaw’s operational environment to streamline the application modernization process.

Images & Repositories: Macaw microservices are packaged as Docker containers. MDR (Meta Data Repository) holds the service blueprints, Metadata information with available Docker tags for a specific service. Docker registry holds service container images.

CI/CD Plugins: Integration with CI/CD tools for accelerated release and feature velocity.

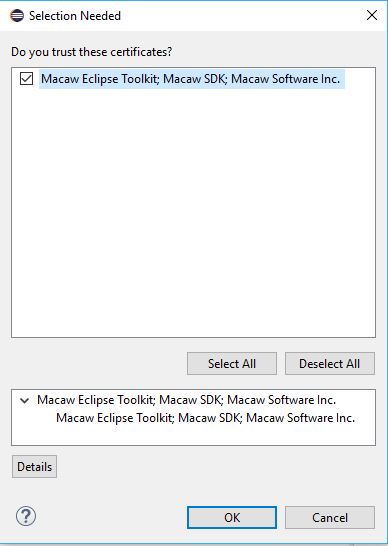

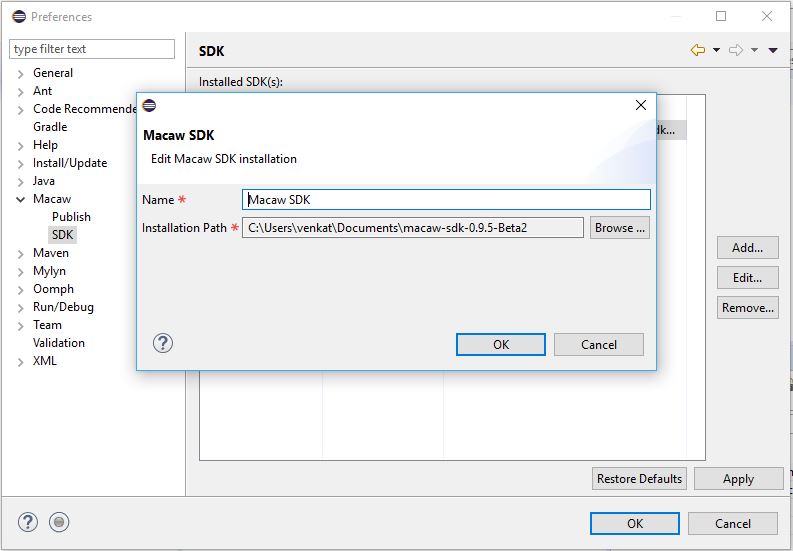

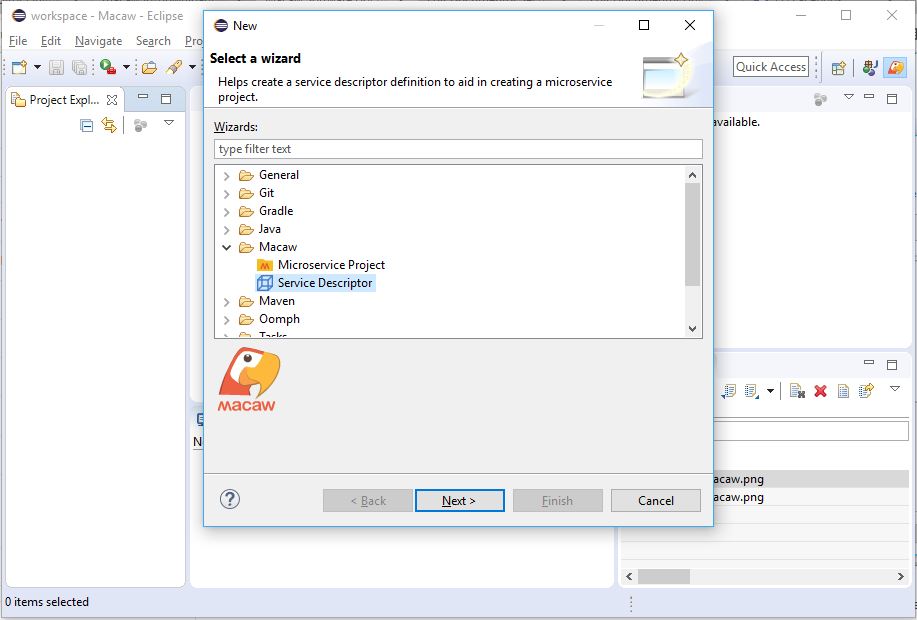

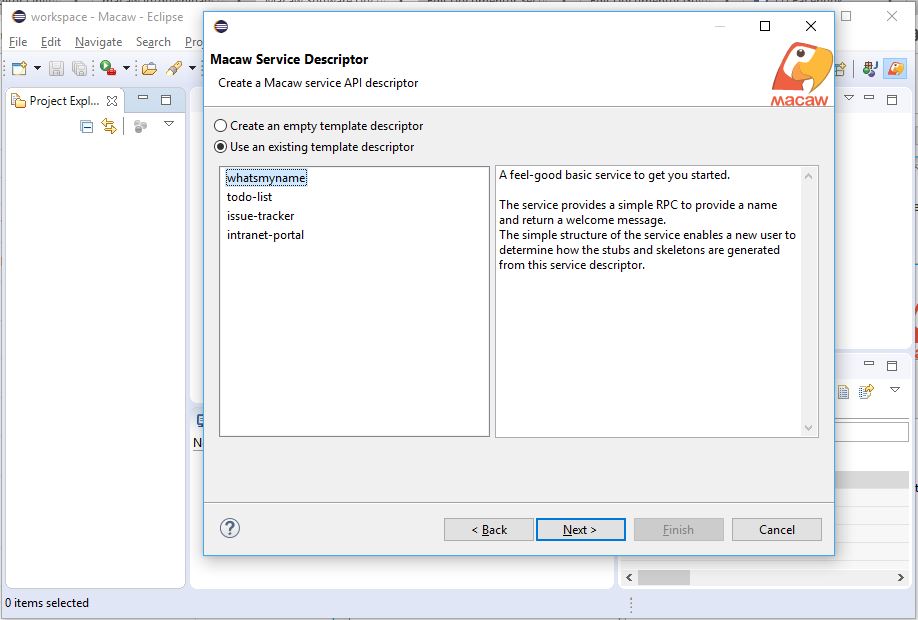

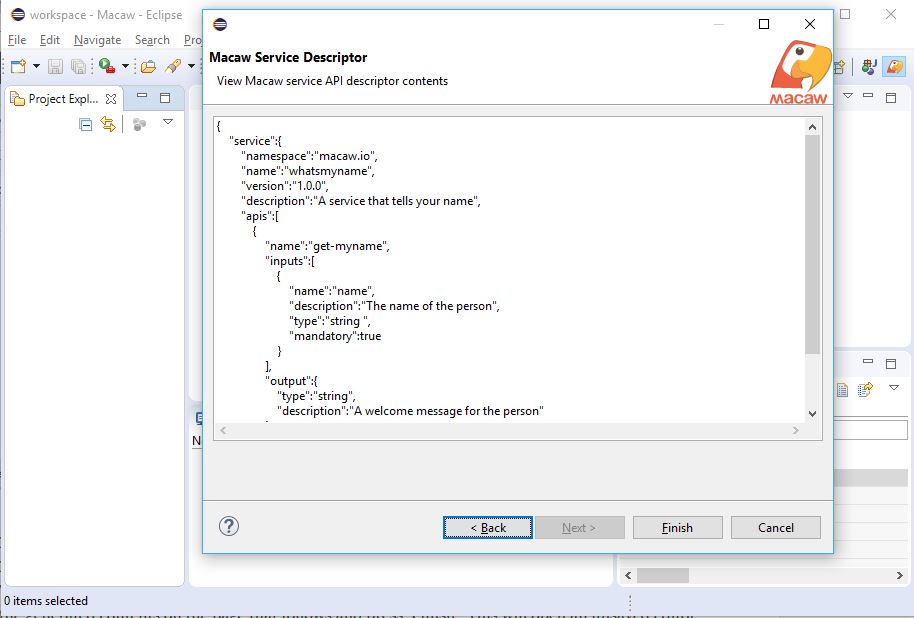

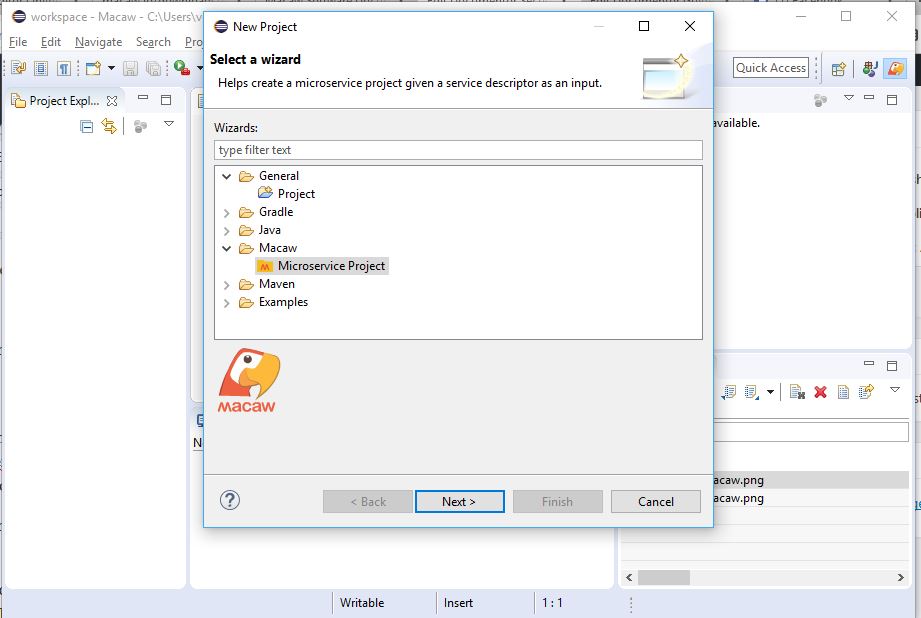

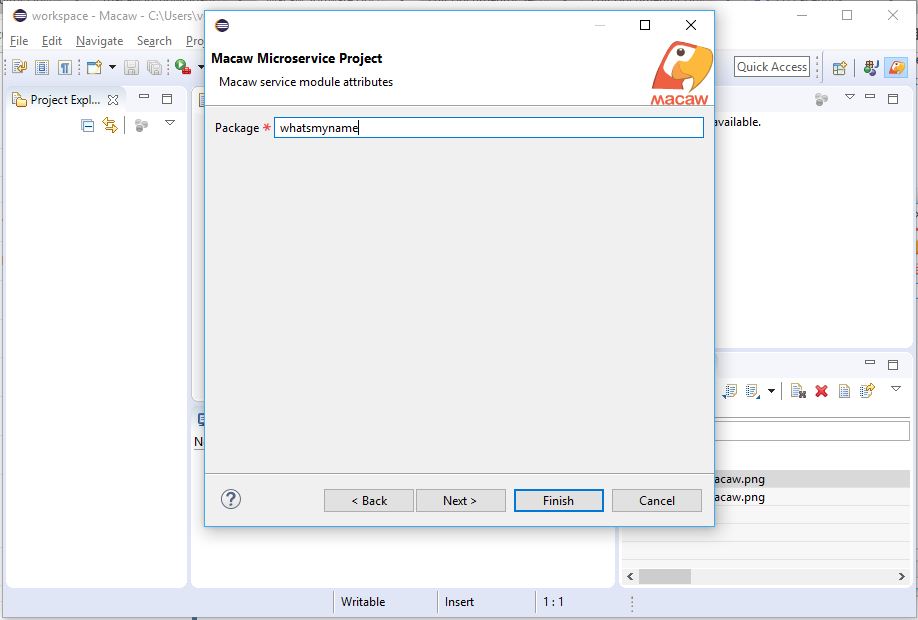

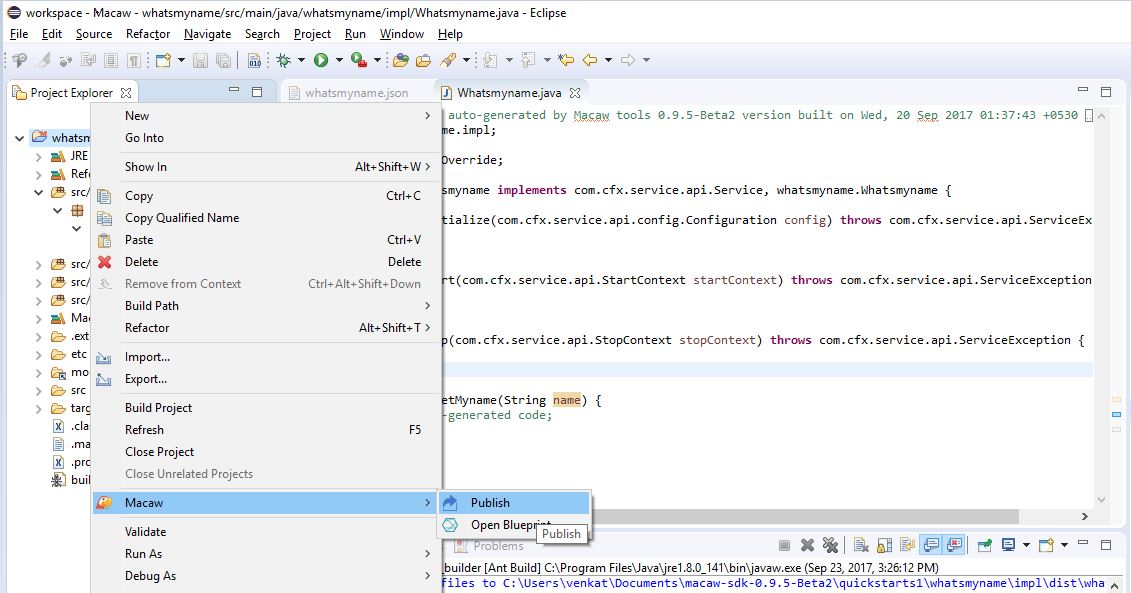

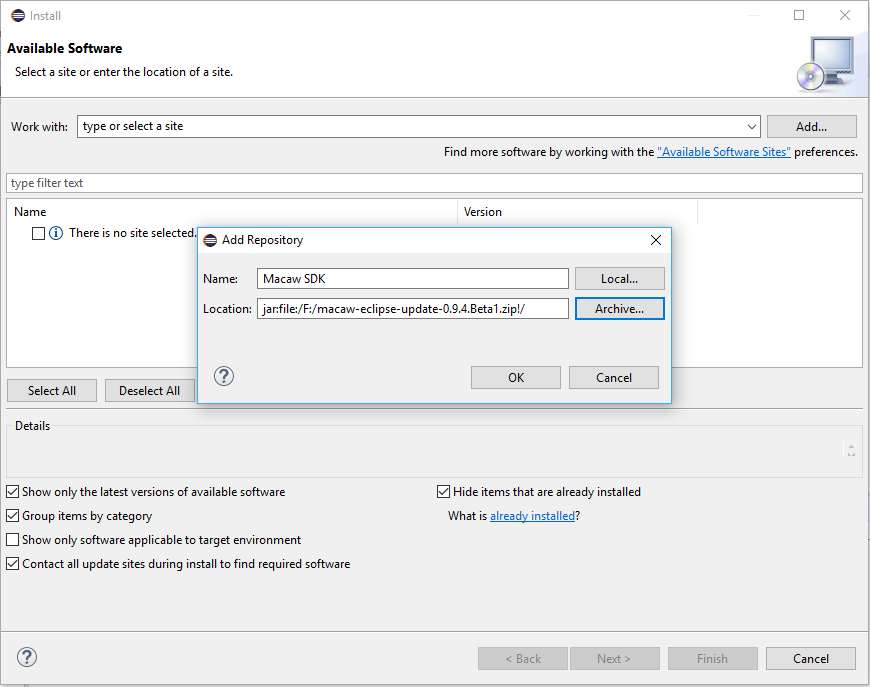

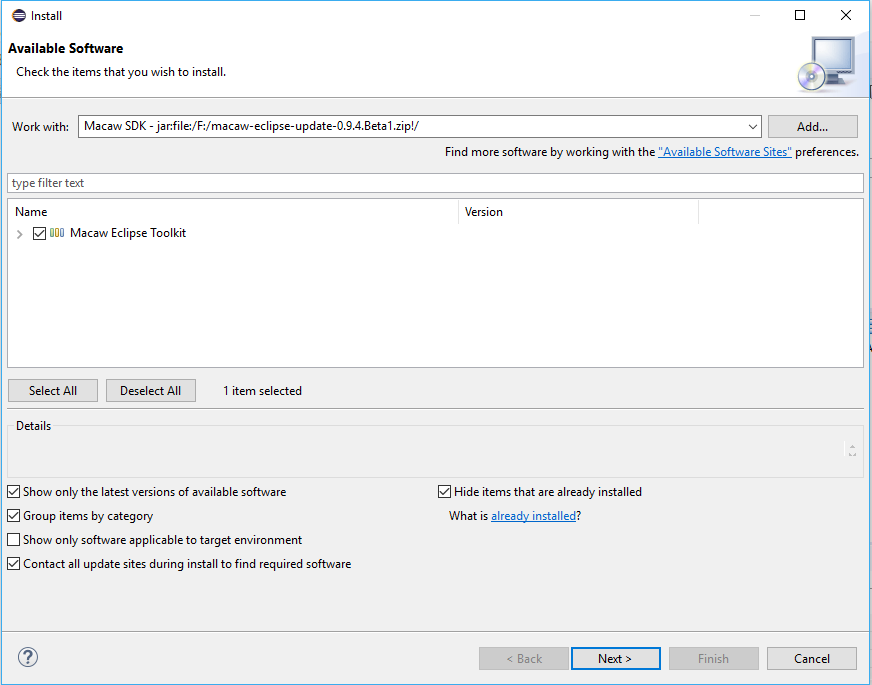

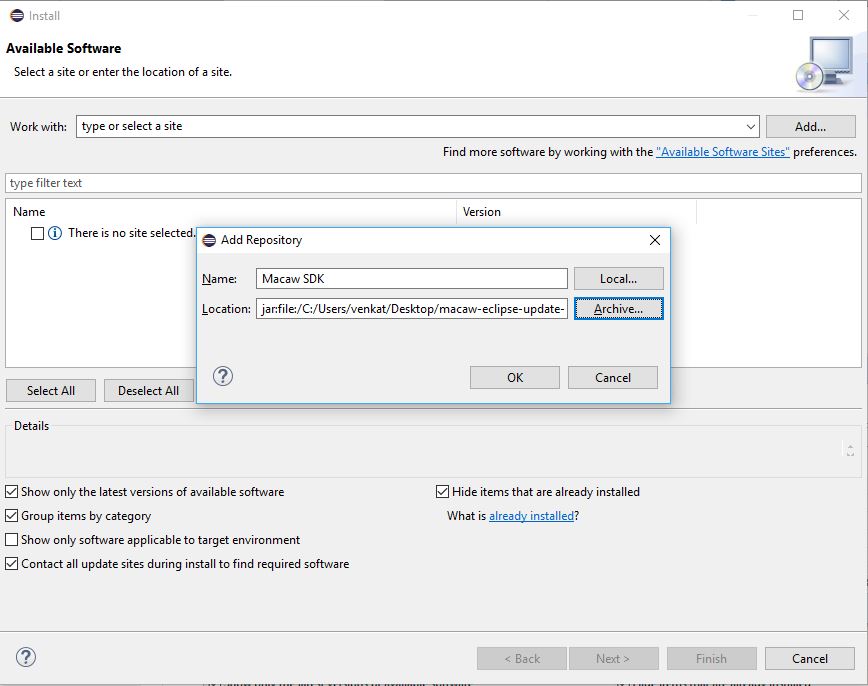

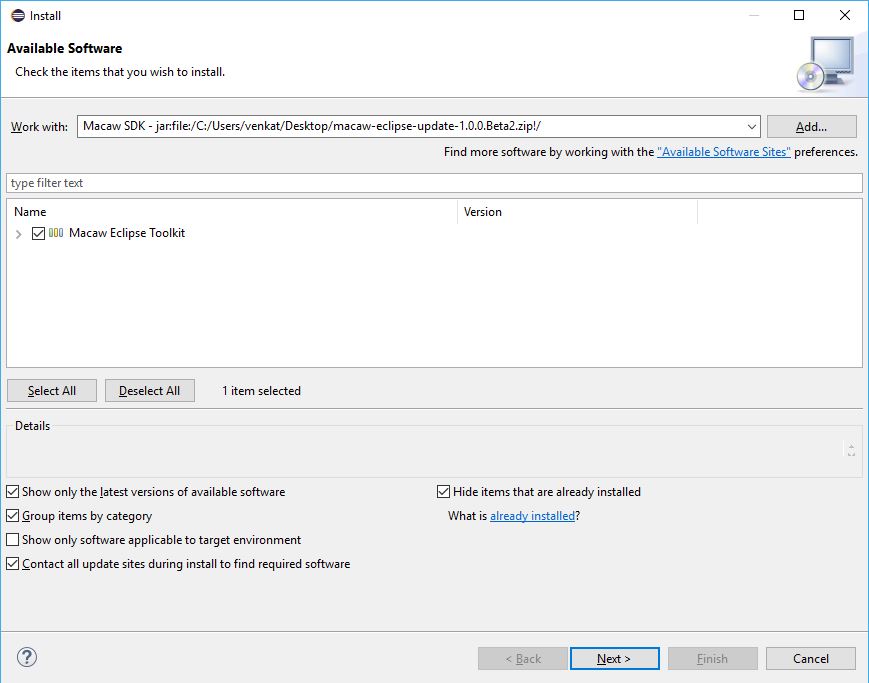

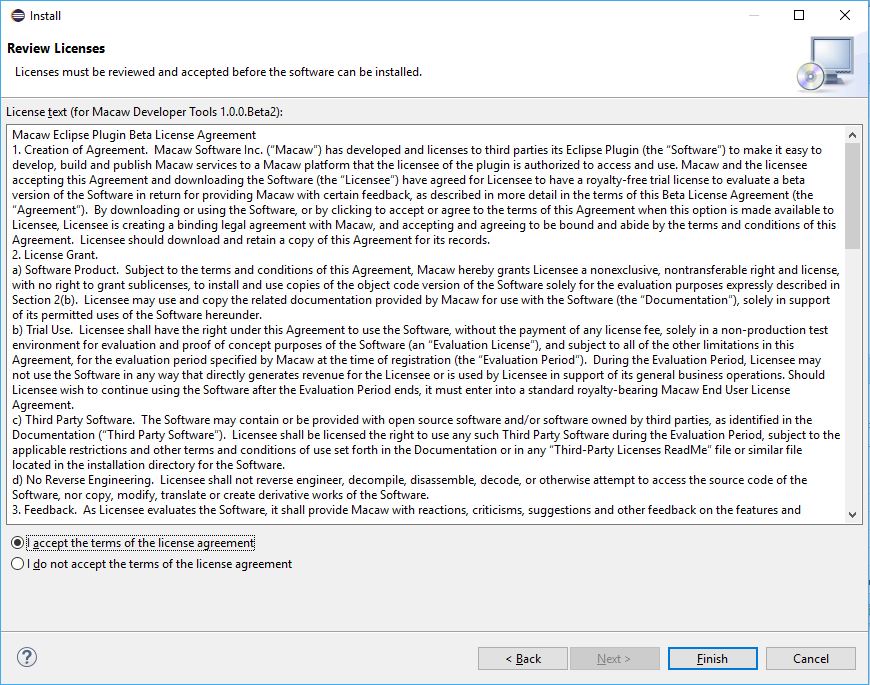

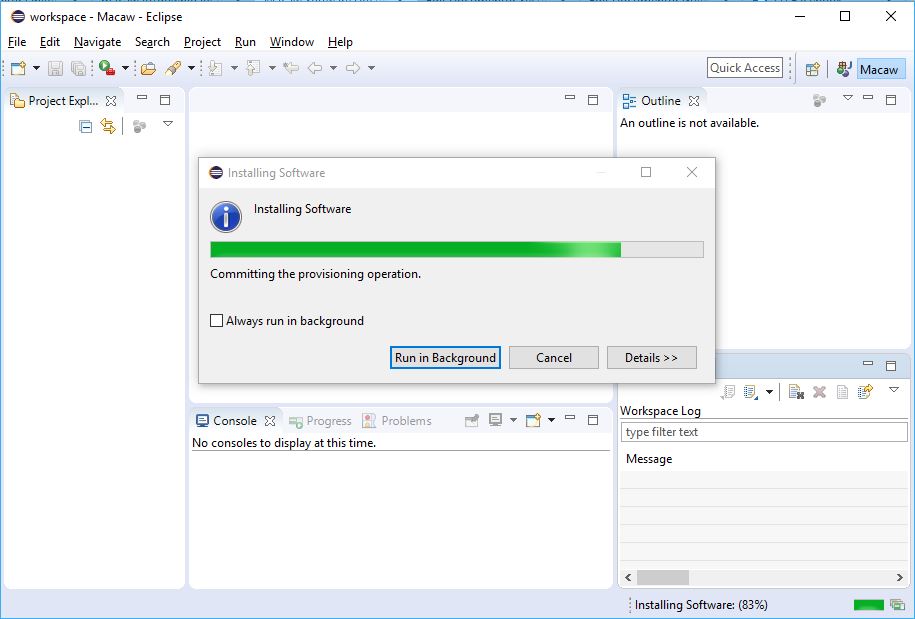

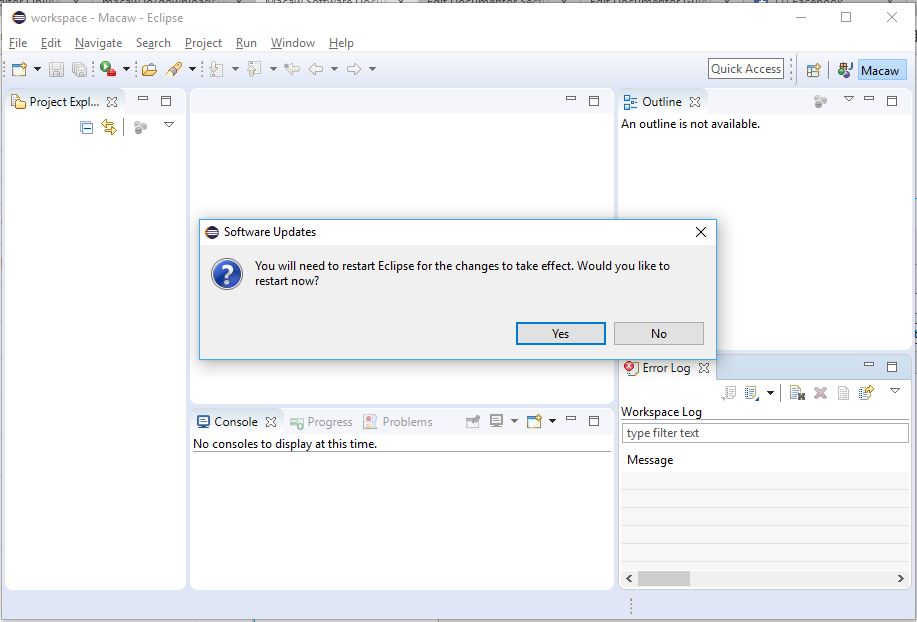

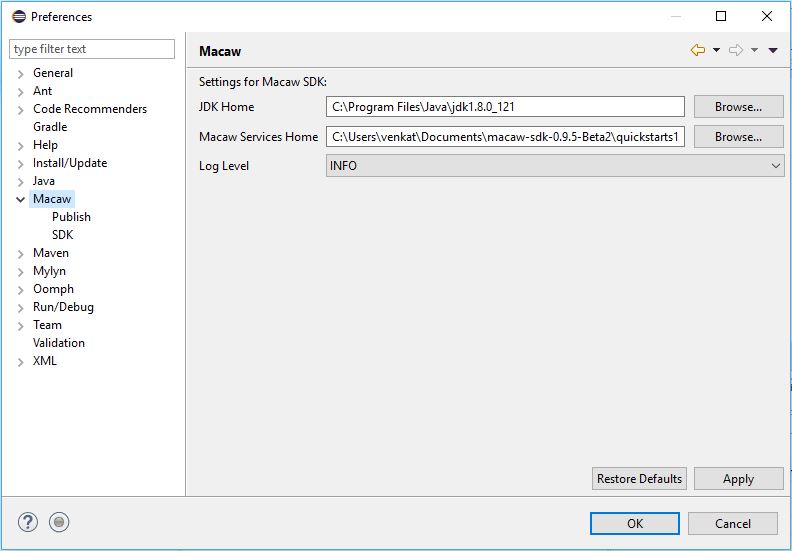

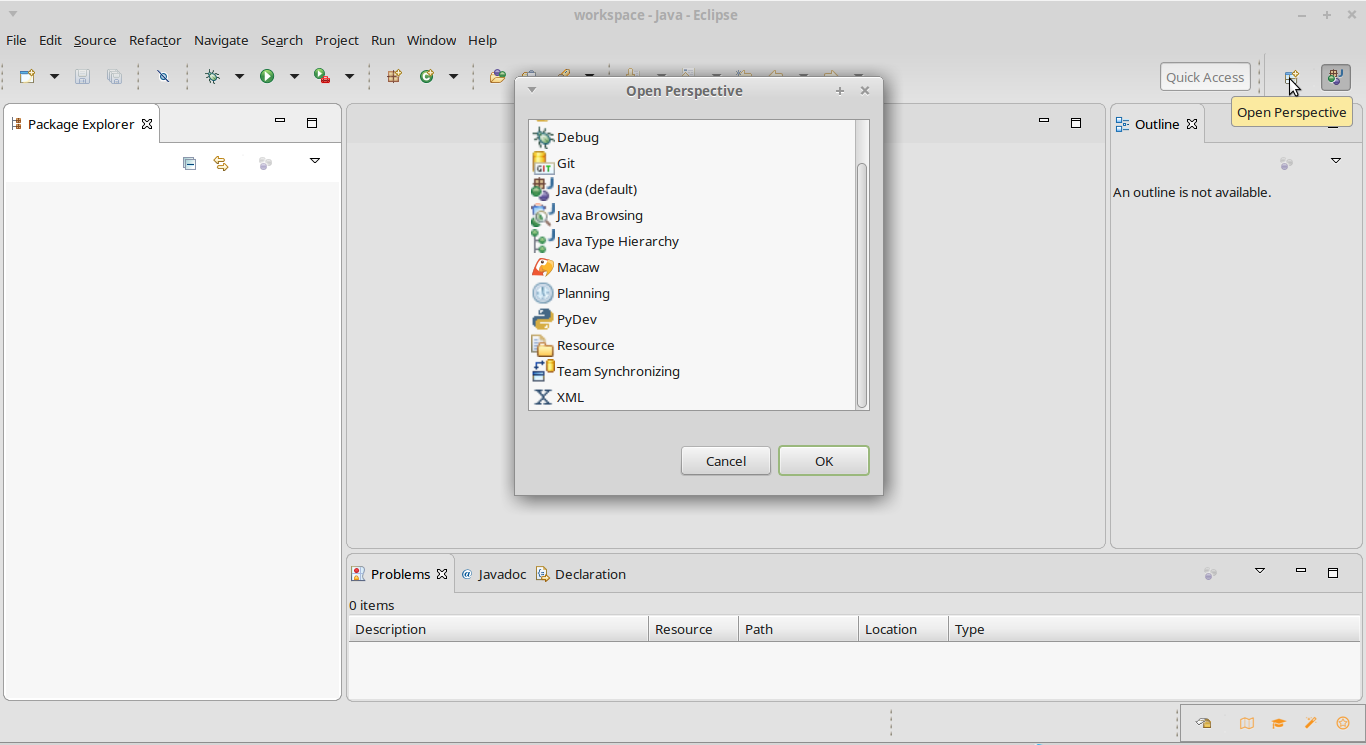

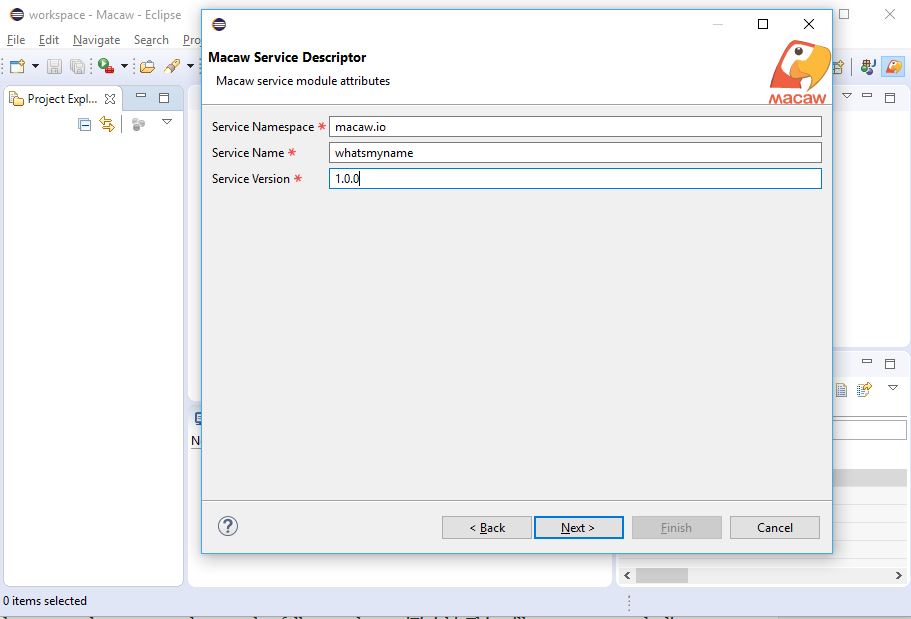

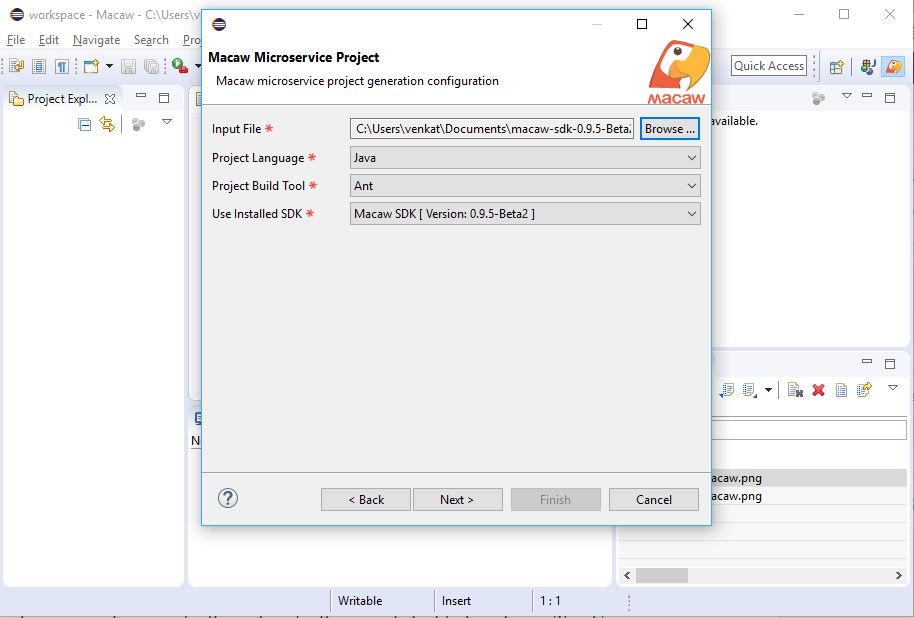

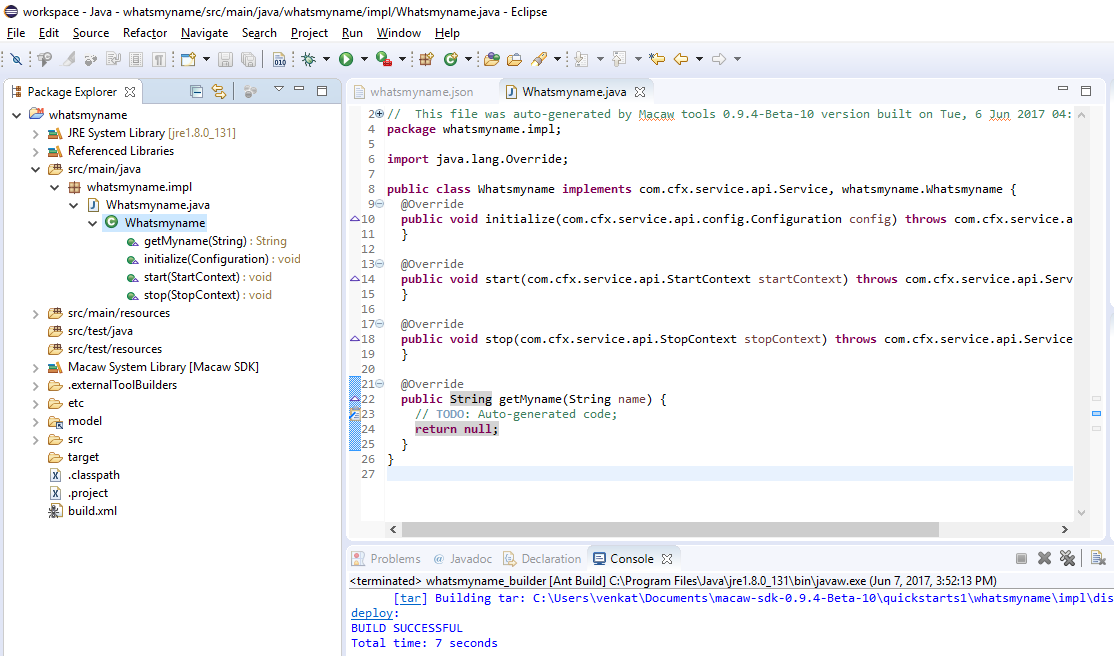

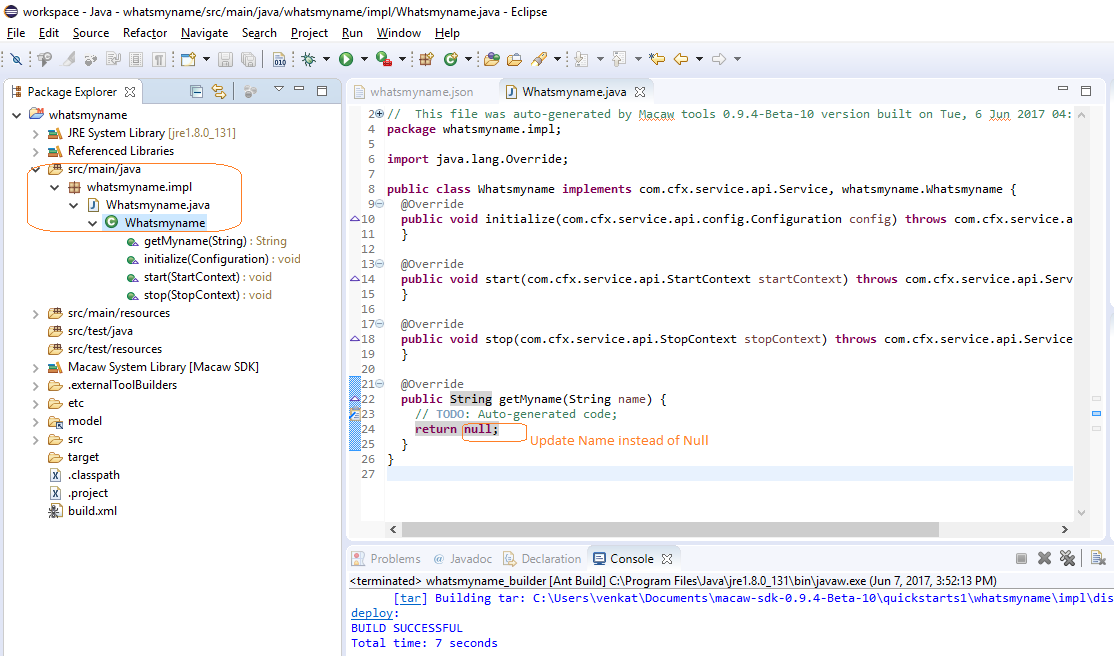

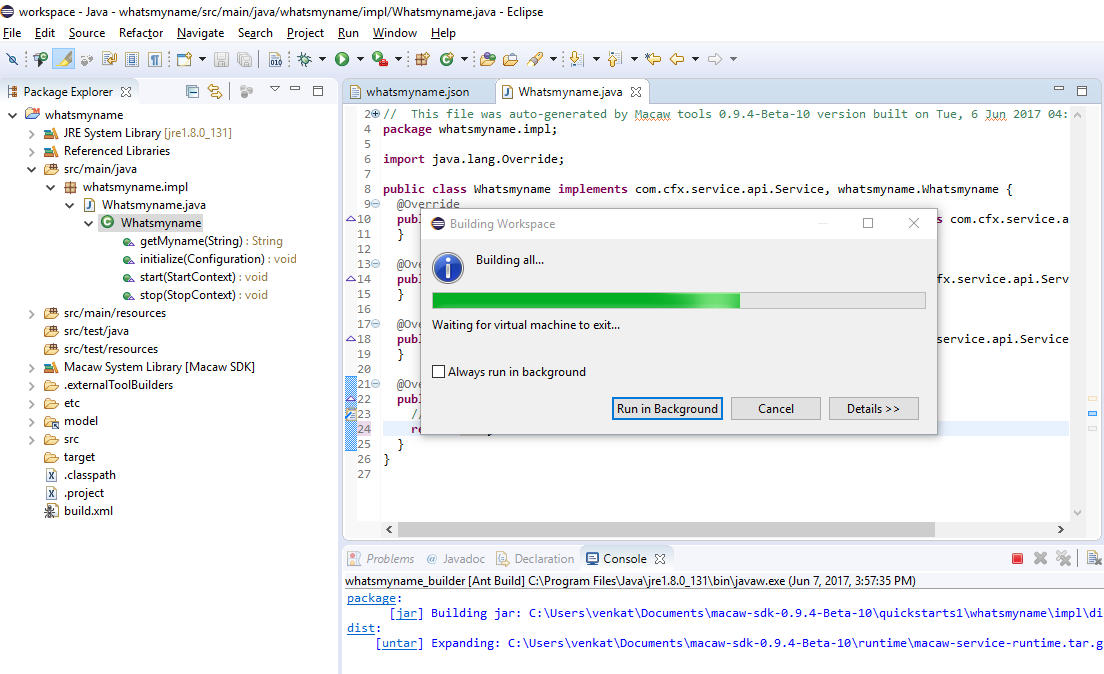

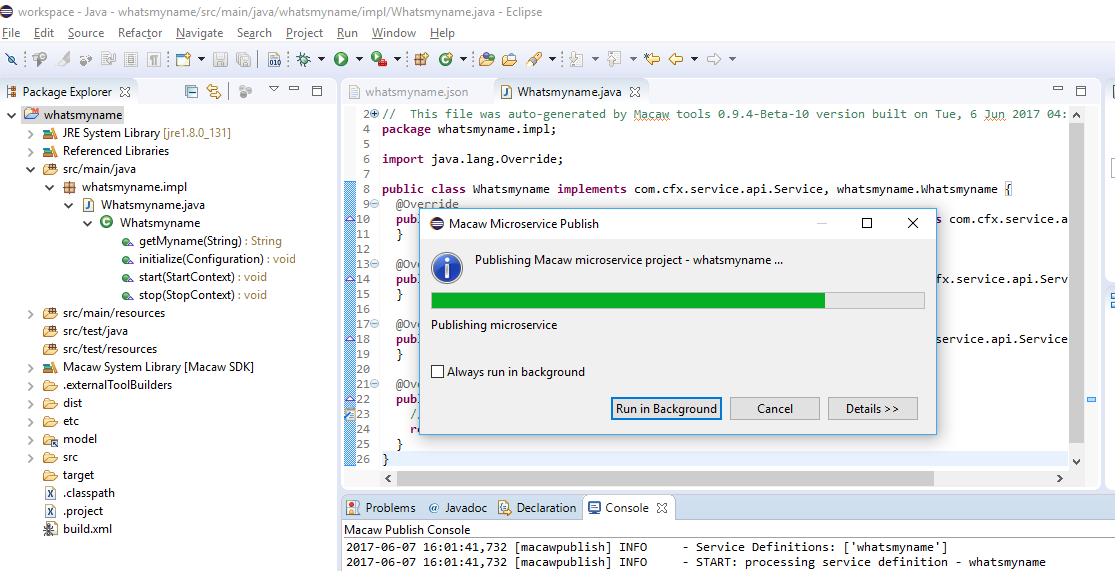

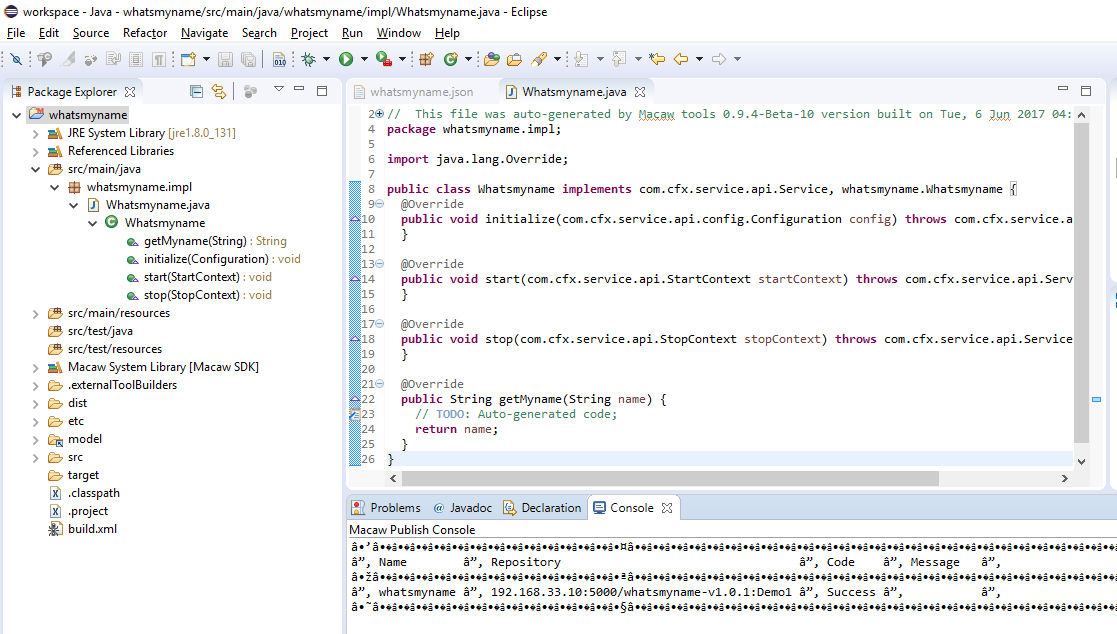

IDE Plugins: Improves developer productivity by providing plug-ins and project configuration files for popular IDEs like Eclipse.

Developer Toolset: A set of essential tools to accelerate service development and increase developer productivity.

- Modeling: Capture API model in YANG or JSON and Swagger documentation.

- Codegen: Generates Java artifacts such as public interfaces and class stubs.

- Build: Build entire Microservices and automatically build service and container images.

- Publish: CLI utilities to easily publish a Microservice to repository using an image tag.

Access and Administrative Interfaces: Macaw can be accessed and administered in multiple ways.

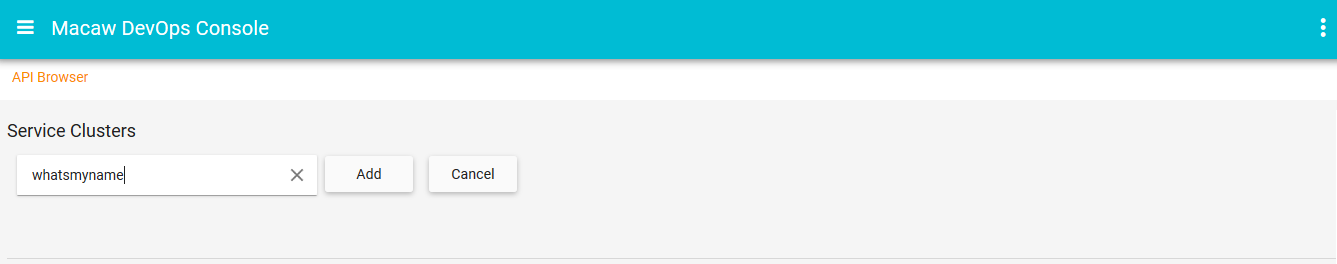

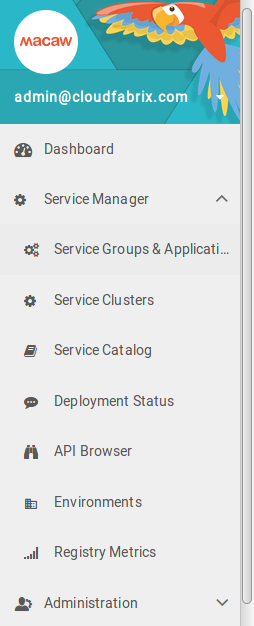

- DevOps Console: A slick web-based HTML 5 interface for DevOps professionals to operate Macaw microservices and the platform.

- Macaw CLI: CLI-based interface for platform and service operations.

- API Gateway: API-based service interactions and administrations suitable for automation and integrations with external tools.

Infrastructure: Infrastructure integration, management and orchestration layers allow Macaw to operate on various IaaS/Cloud platforms including BareMetal, VM, Cloud, or a developer laptop.

Detailed Installation

Terminology and Definitions

Before proceeding with installation, refer to the details listed below to directly understand Macaw’s terminologies and supported installation configurations.

Terminologies

In the installation documentation, the following terminologies are used. Comprehension of the terminologies, what they mean, and what role they play in the overall Macaw platform is crucial to the installation process.

| Type/VM | Purpose | Processes/Services |

| Macaw Infrastructure Services

Platform VM |

Macaw’s infrastructure layer provides the DB access, indexing/search capabilities, and service communication infrastructure. These are mandatory core infra services. | Zookeeper Kafka MYSQL Cassandra Elasticsearch Redis Tomcat |

| Macaw Platform Services

Platform VM |

Macaw platform layer includes the core essential foundation services. These platform services are shared across all tenants and provides critical services like Identity Management, Encryption/Decryption services for critical data, Provisioner for deploying microservices, Services Registry etc. These are mandatory core platform services. | Service Registry Notification Manager Identity Service Service Provisioner |

| Macaw ADPM Services (Performance Monitoring)

Platform VM |

Macaw platform provides performance monitoring capabilities for the deployed microservices. To enable the performance monitoring capability, optional performance monitoring services need to be installed. These services are optional and needs to be installed only if performance monitoring features are needed. | macaw-apm-agent macaw-apm-collector |

| MACAW Services

Service VM |

Macaw Services include various microservices and can be deployed on the Service Host. Services Hosts are logically grouped under an environment. You can have multiple environments with different services hosts and during provisioning user can select a specific environment for deployment. | Developed Microservices |

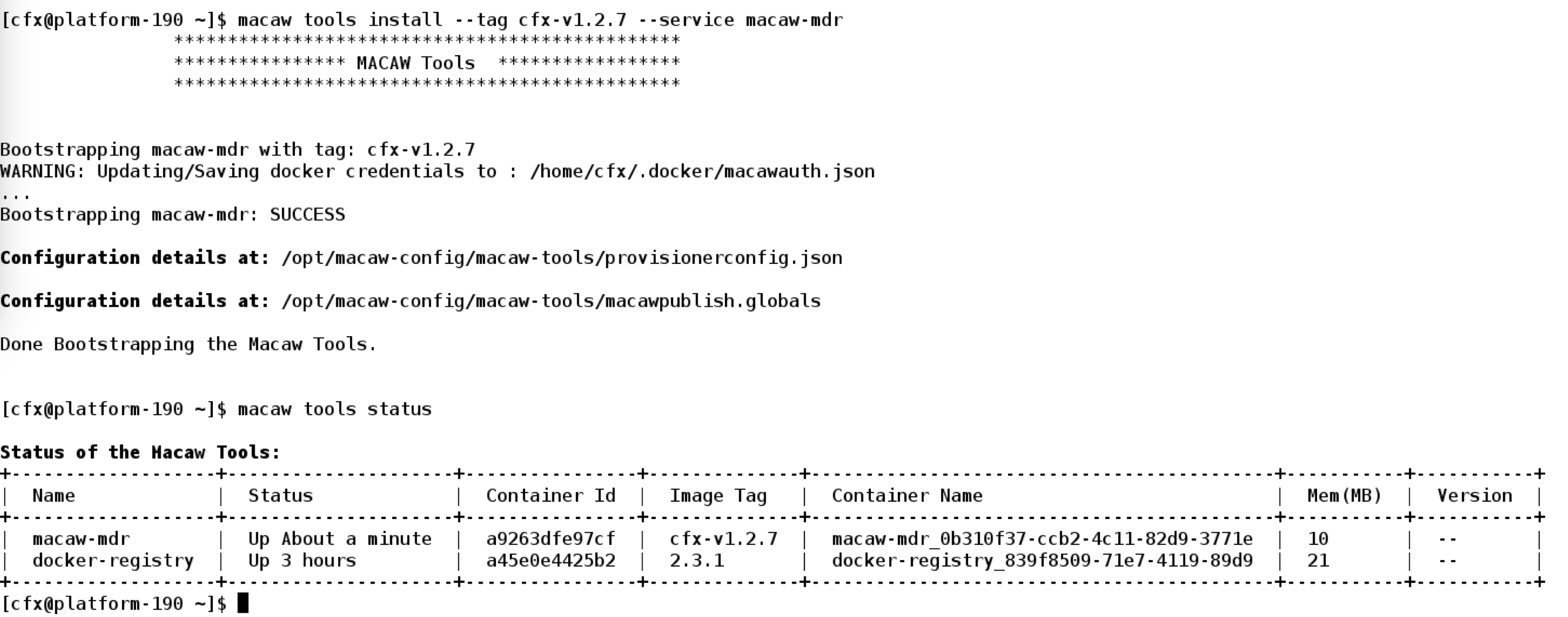

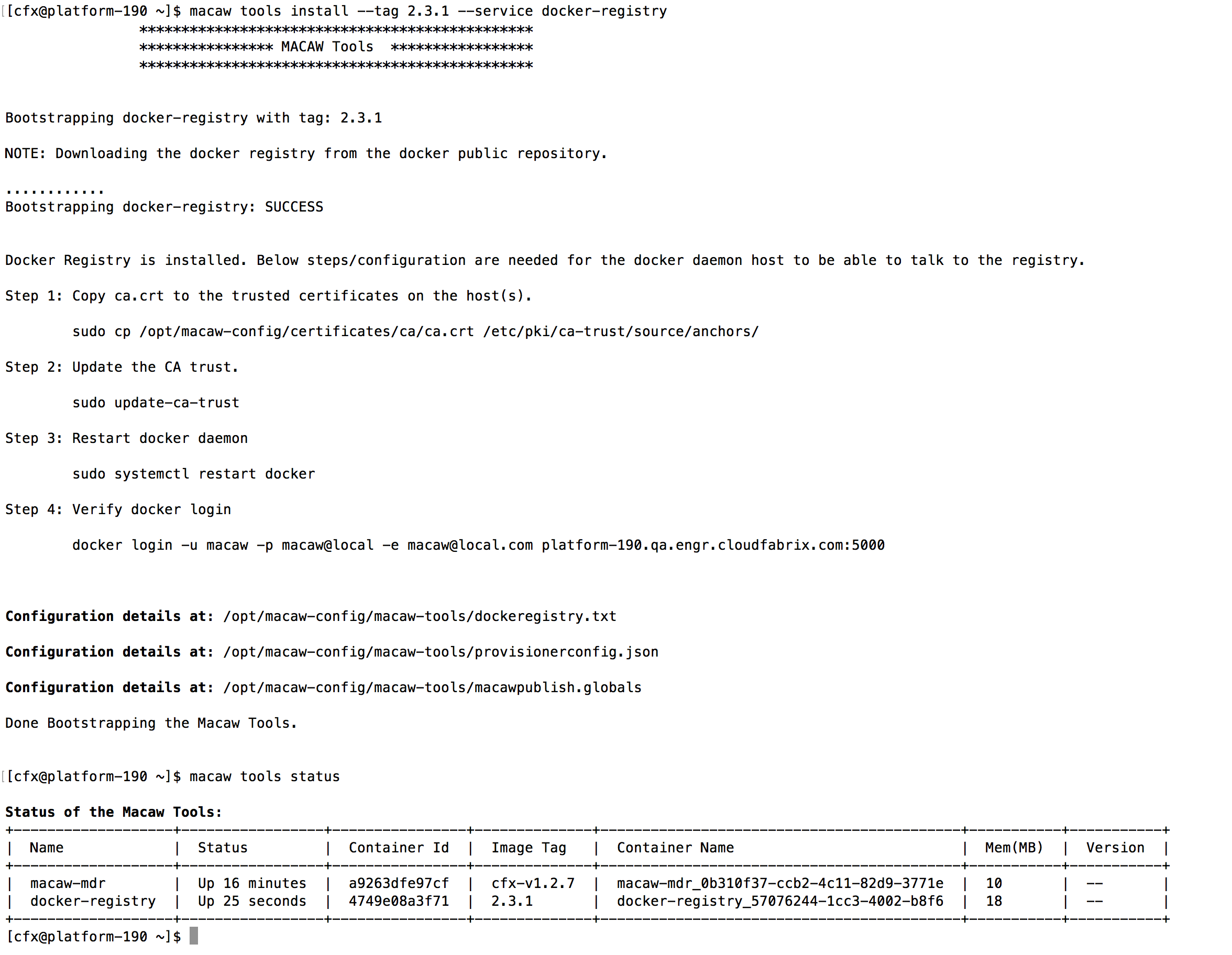

| Macaw Tools

Platform VM |

Macaw Tools are light weight containers providing the MDR (Meta Data Repository) and Docker Registry functionality. These are needed to publish and deploy user developed microservices.

Note: Docker Registry is installed from Central Docker and the version used is 2.3.1. For production deployments it is highly recommended to deploy and configure tools on a separate host. |

macaw-mdr docker-registry |

Environment Preparation

VMWare OVF

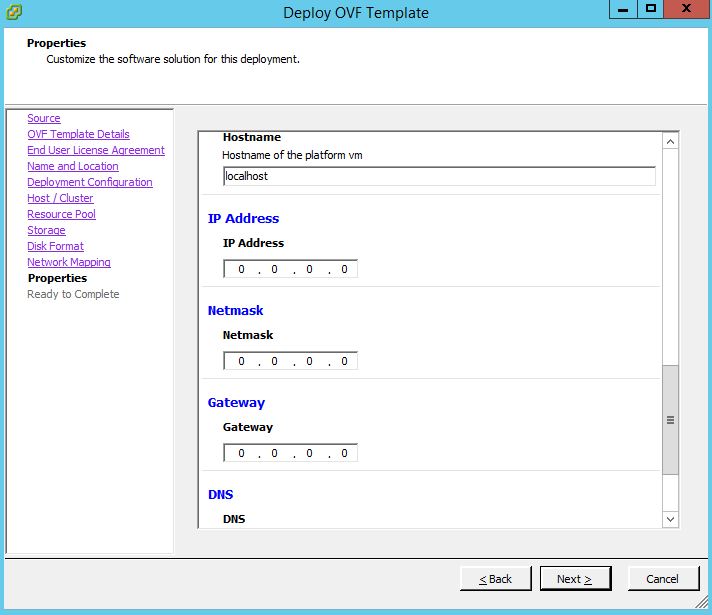

Platform VM and Service VM can be deployed as OVFs. In preparation to deploy the OVFs, keep the IP Addresses, Gateway, Netmask, DNS, NTP, and Hostname details for each VM handy. It is extremely important to have DNS mapping for the hostnames and all nodes to be timed synced with NTP.

Note: Macaw delivered OVFs are compatible with VMware vSphere 5.1 and above.

Prerequisites to deploy OVF’s are as follows.

| Macaw Platform VM | Macaw Services VM | |

| Operating System | CentOS 7.3.1611 (Core) | CentOS 7.3.1611 (Core) |

| RAM(GB) | 24 | 16 |

| vCPU | 8 | 8 |

| NICs | 1 | 1 |

| IP Address | IP, Subnet, Gateway,DNS | IP, Subnet, Gateway,DNS |

| NTP | NTP Server | NTP Server |

| Hostname | DNS Resolvable Hostname |

DNS Resolvable Hostname |

| OS | Tools Needed |

| Windows/Linux | 7z Utility |

| VMware vSphere | 5.1 or above |

| VMWare vSphere Client | 5.1 or above |

Note: For the rest of the document, the nodes will be referred to as displayed here (using the following convention to qualify the context):

Macaw Platform VM – platform.domain.com

Macaw Service VM – service.domain.com

3.1 Two VMware OVFs are delivered as a part of deliverables:

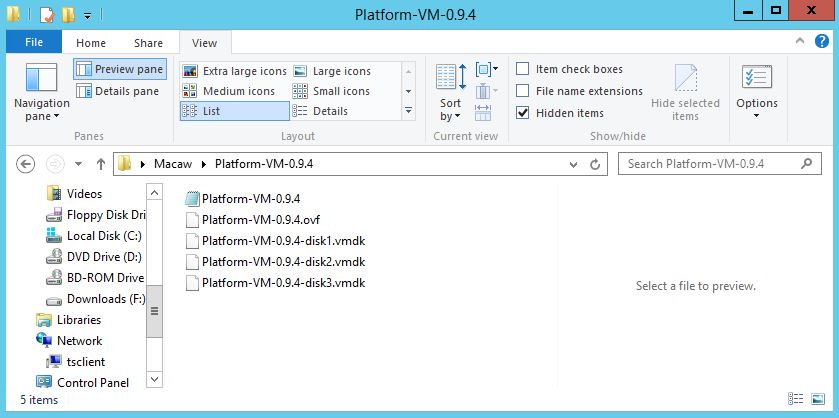

- Platform-VM-0.9.4.zip (Macaw Platform VM in OVF Format)

- Services-VM-0.9.4.zip (Macaw Services VM in OVF Format)

3.2 Download of OVFs

Download the OVFs using the following URLs (contact POC or Support contact at support@www.macaw.io) onto a machine where the vSphere client is available (e.g Windows box where the VMWare vSphere client/environment will be accessible to deploy the above downloaded OVFs.).

Platform OVF: <URL>

Services OVF: <URL>

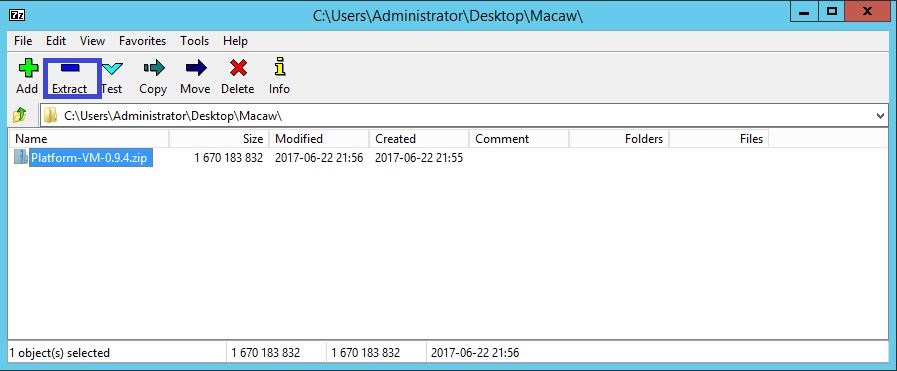

3.3 Extract or Unzip the above downloaded OVF’s using standard unzip utility ( 7z utility ) as depicted in following screen-shots.

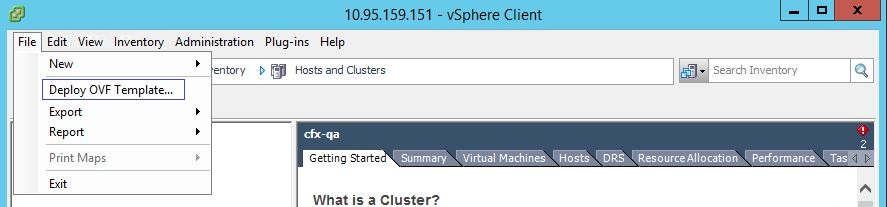

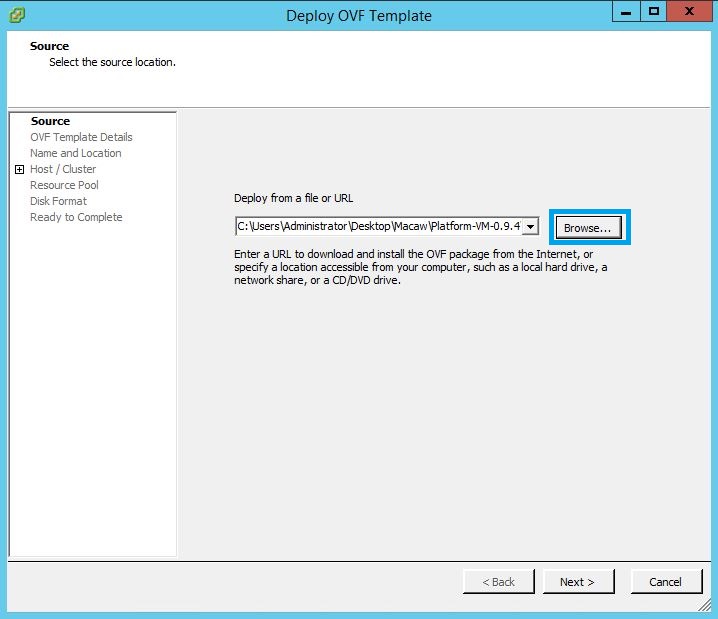

3.4 Deploy OVFs using VMware vSphere Client (using wizard):

The above step will prompt wizard to deploy the OVF’s. Please make sure you have all the prerequisites before deployment starts

Accept license window and follow the steps,

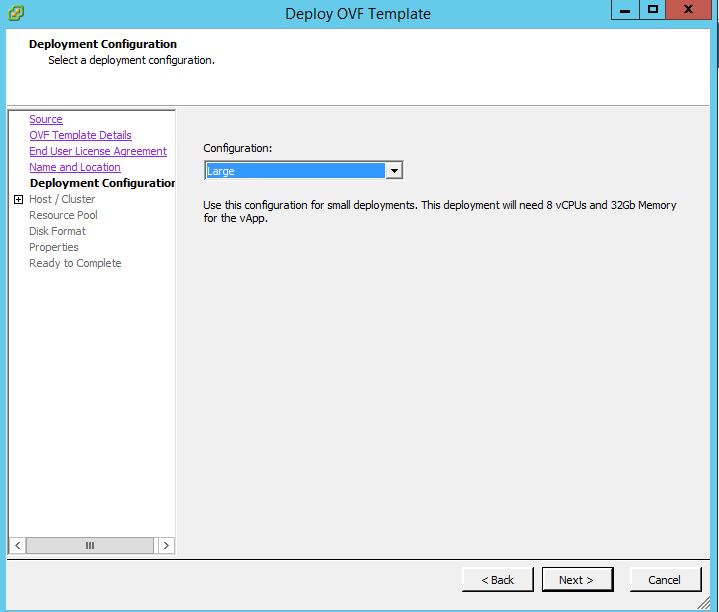

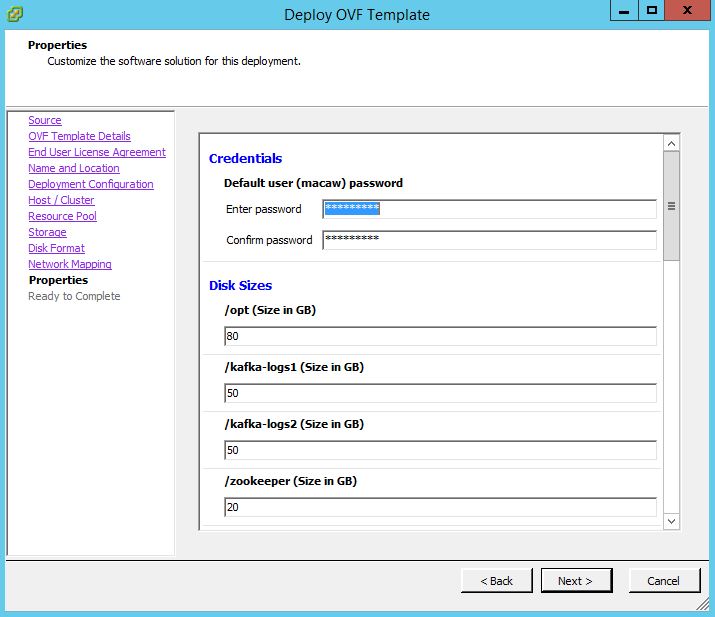

We have three types of deployments in OVF Configurations Small, Medium and Large .We need to select based on requirements,configuration for production is Large.

Select the “deploy OVF template” option and wizard will walk through the deployment process. Please make to have the following details ready before deploying the VMs as described in the prerequisite section of the document.

- Cluster: where to deploy these VMs

- Storage: to use for deploying VMs

- Network: to use for deploying VMs (Port Group details reachable to network)

- Hostname, IP Address, Gateway etc) as wizard will prompt to enter those values during the deployment process

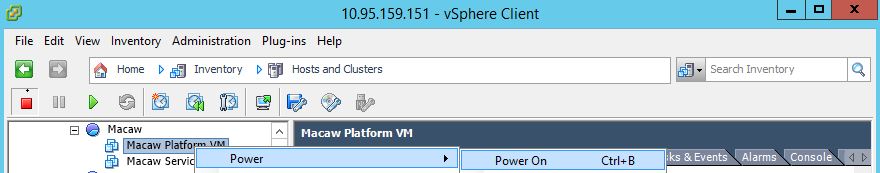

3.5 Power On VMs

Once the above step is completed (deployment of OVF templates into vSphere environment is completed using wizard), power on the VMs as shown below.

Once the VM’s are powered on, make sure to access the above VM(s) using the DNS/IP entries (via ping or ssh to connect to the above deployed and configured VMs over the network) that were provided by the user to the VMs during deployment.

3.6 Login Verification of deployed VMs (OVFs):

Verify the login via SSH client of choice to Platform VM and Service VM Nodes with the below credentials.

E.g :-

————————————————————————————-

ssh macaw@platform.domain.com <Enter>

User: macaw<Enter>

Password: appd1234<Enter>

————————————————————————————-

The above will login into Macaw Platform / Service VM shell.

Note: Another user ‘macaw’ belonging to group ‘macaw’ is also defined under whom the infrastructure services would be running. This user doesn’t have any password.

Once the above steps are done, move to the platform installation section.

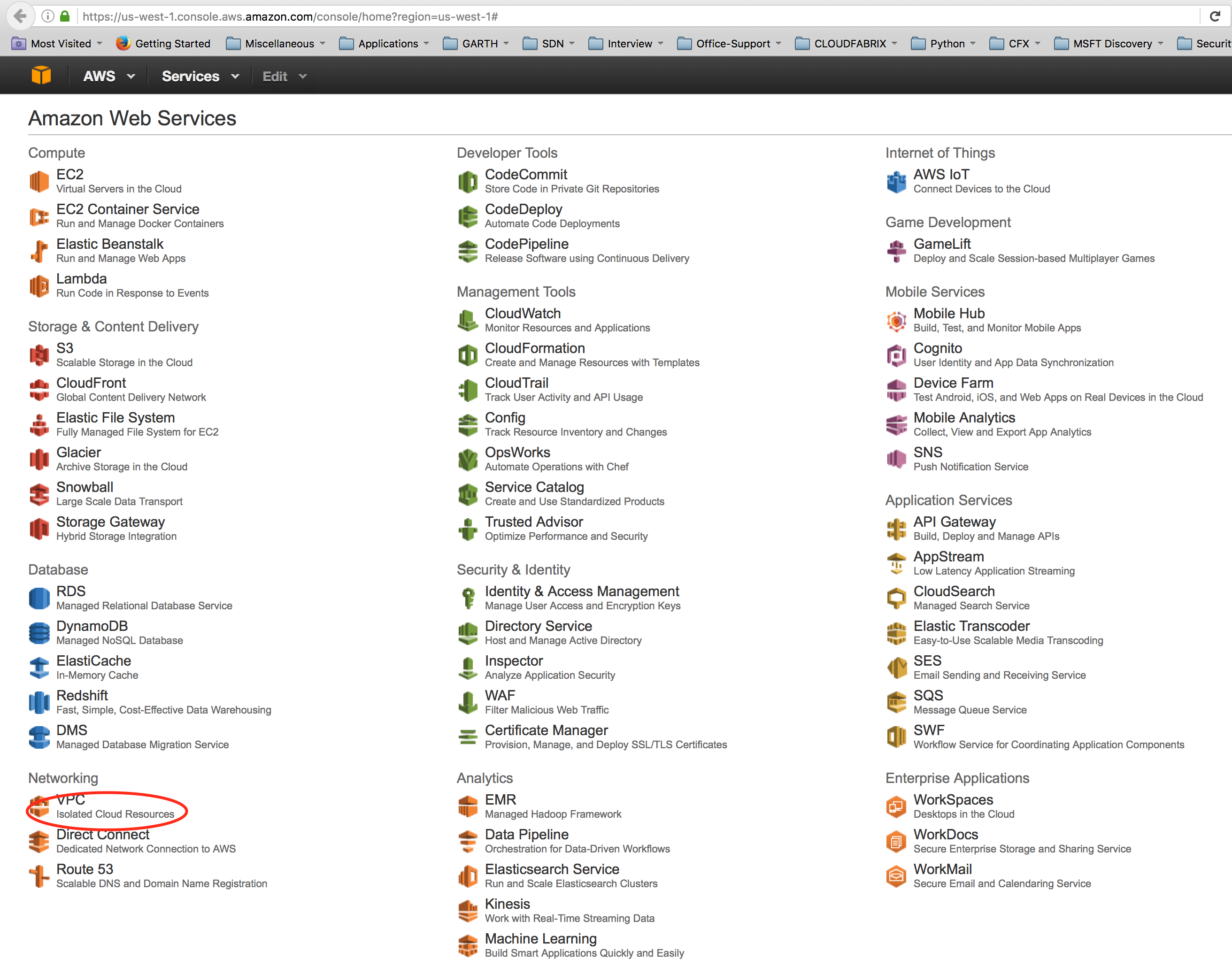

AWS

Prerequisites

The subsequent instructions assume that AWS is setup with basic or necessary configurations like VPC, Networks etc. Refer to the AWS link below on how to execute an AWS setup for a new account.

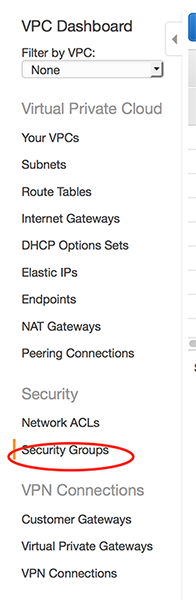

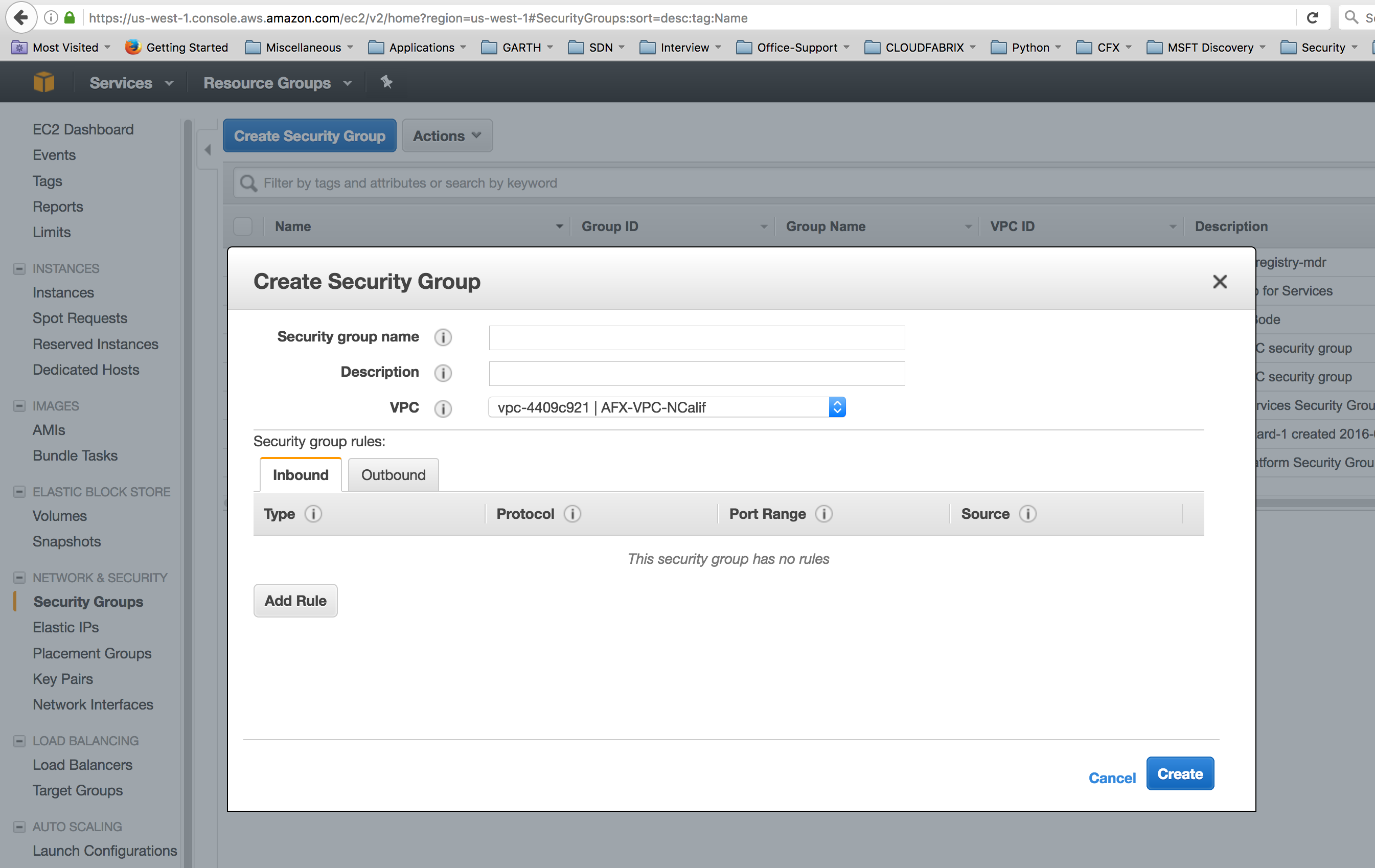

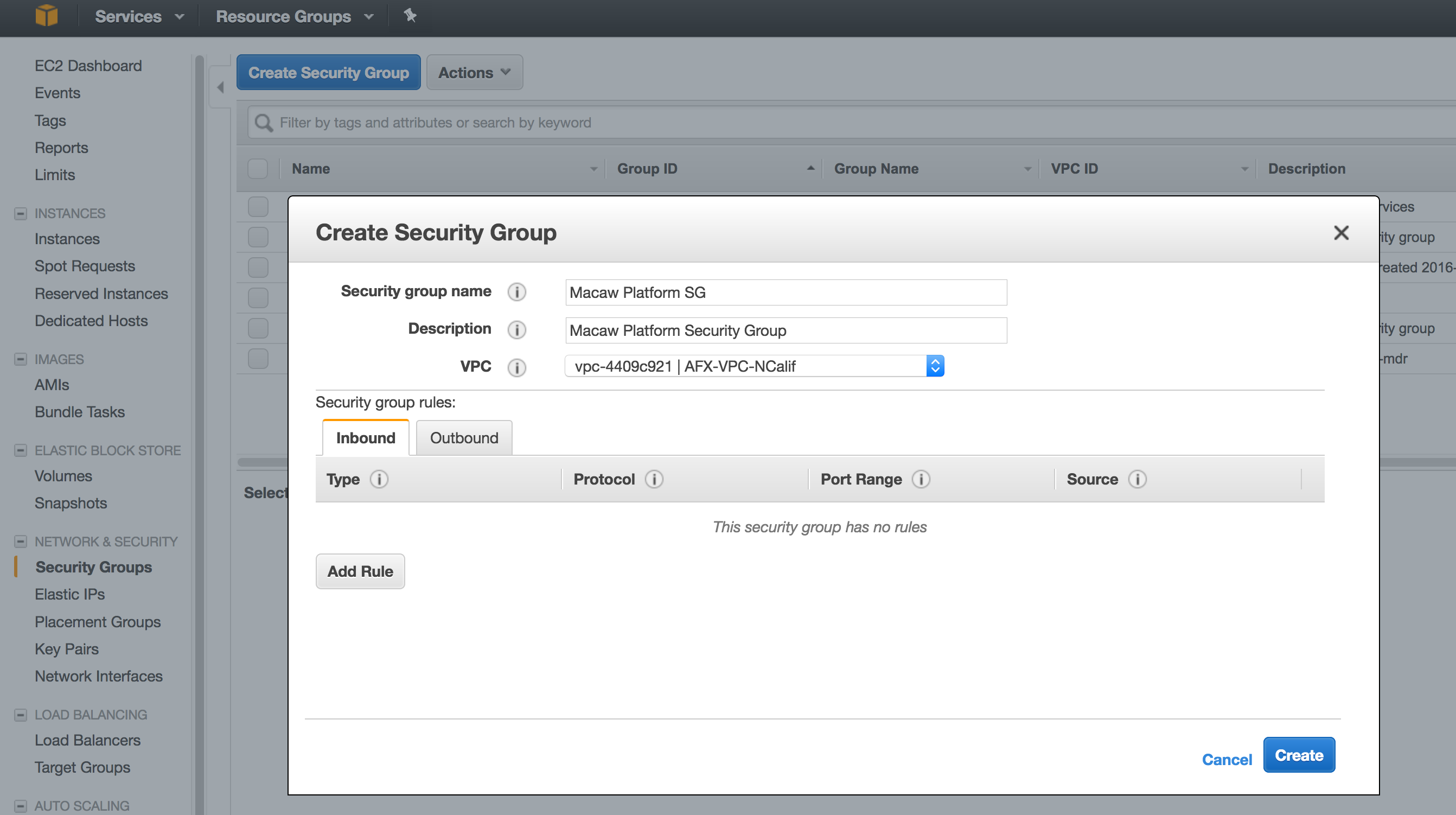

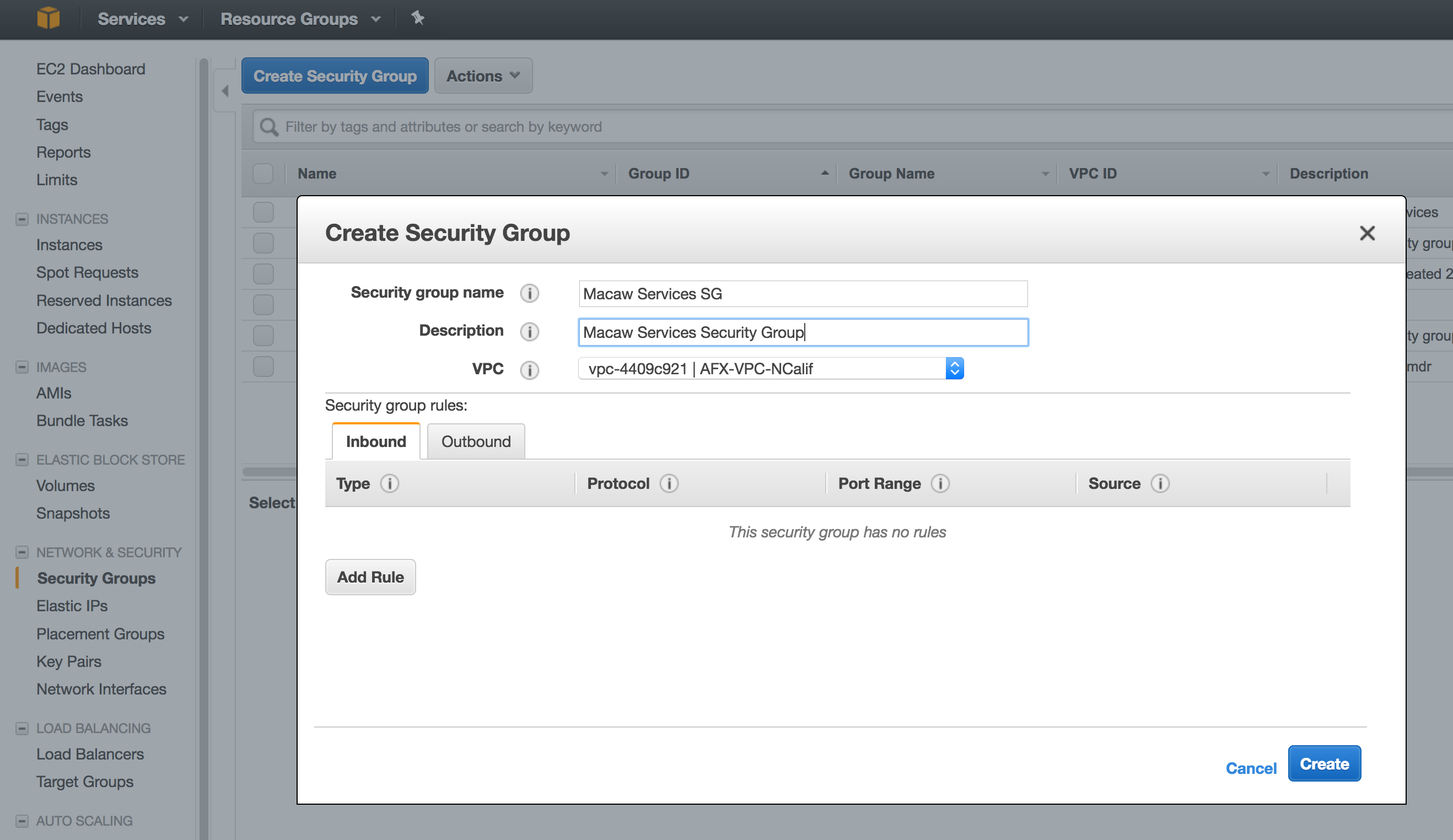

Security Group Creation

This section of the document explains how to create the AWS security group for platform and service VMs. Also refer to the AWS Security Group documentation for more details.

- Login to AWS EC2 and select the VPC under Networking.

- Select the option Security Groups under the Security.

- Click on ‘Create Security Group’ and create the group.

- Provide the Name Tag, Group Name and description. Select the VPC that you want this group to belong to. If you are not sure of the VPC, please check with the administrator of your AWS EC2 cloud account.

- Now click ‘Create Security Group’ again and create security group for Service Instances.

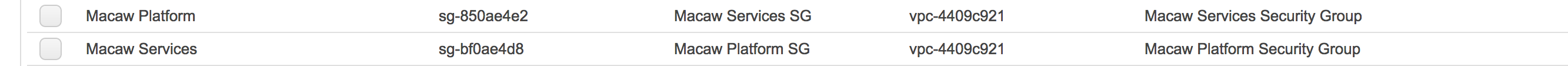

- You should now be seeing 2 security groups: one for platform, one for services like below.

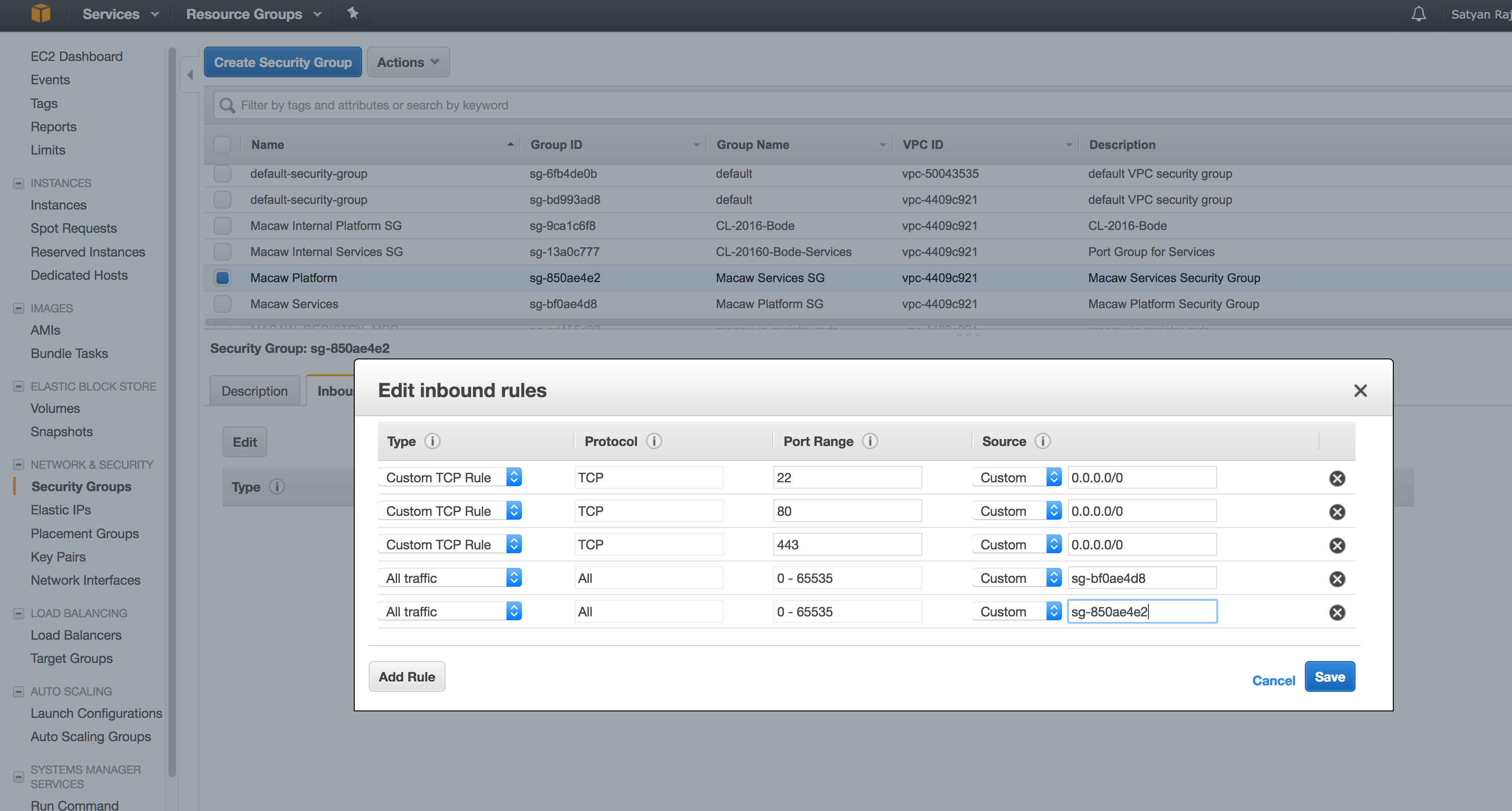

Security Group Configuration

- Select the platform security group created in the previous section and navigate to the Inbound rules. Click on Edit and add the below inbound rules to the platform security group. Once done, click on save.

Note:

sg-bf0ae4d8 – In the above rule, this refers to the Platform Security Group ID. This is to allow any traffic between the instances in this group.

sg-850ae4e2 – This refers to the Services Security Group ID. This is to allow any traffic between platform security group and services security group.

HTTP and HTTPS rules – This is to allow HTTPS access to macaw console portal. HTTP is redirected to HTTPS.

Note: If you are installing macaw tools (MDR and Docker Registry), allow the additional TCP ports 8637 and 5000 as well similar to 80/443/22.

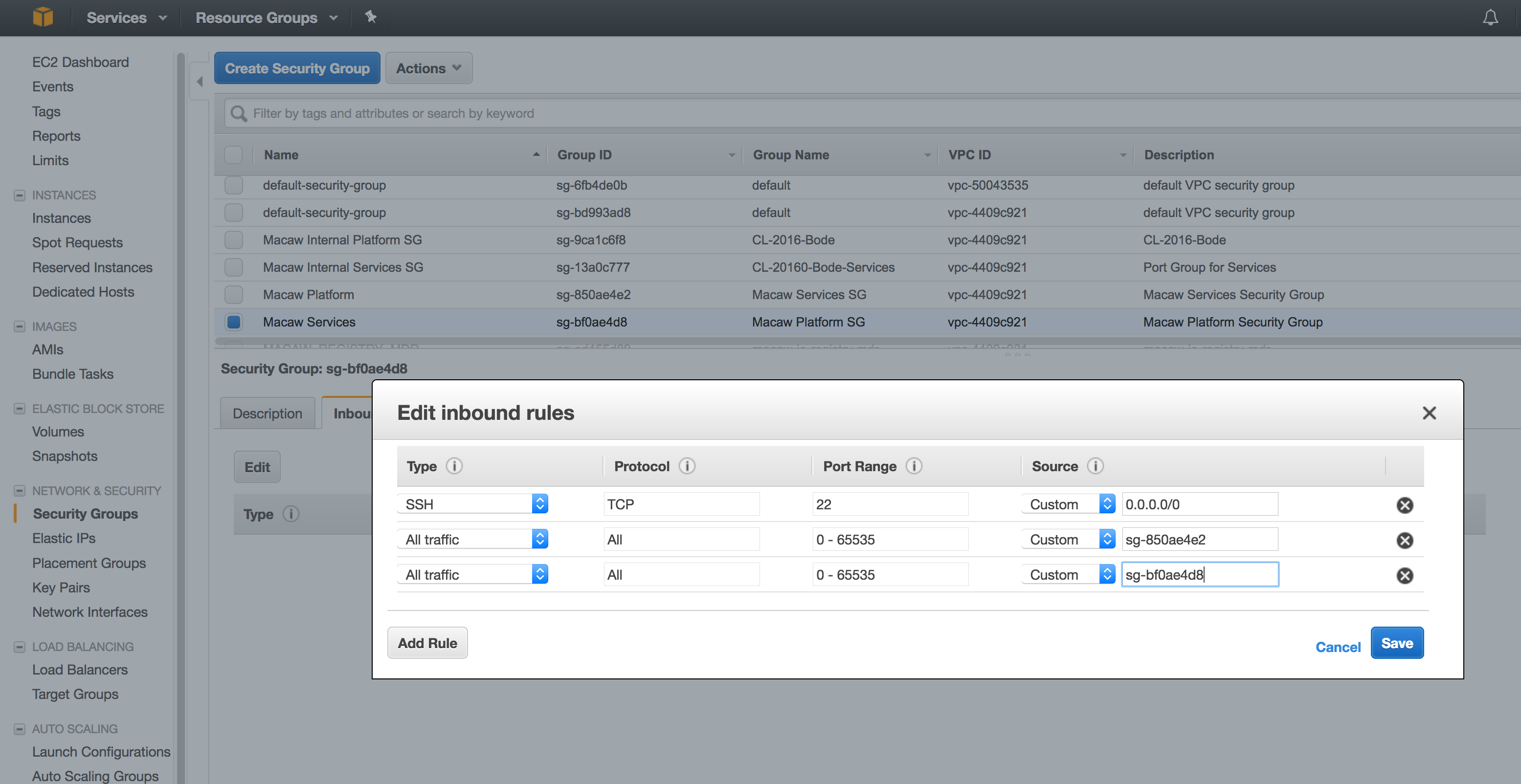

- Now select the services security group and add the below rules.

Note:

sg-bf0ae4d8 – In the above rule, this refers to the Platform Security Group ID. This is to allow any traffic between the instances in this group.

sg-850ae4e2 – This refers to the Services Security Group ID. This is to allow any traffic between platform security group and services security group.

Launching Platform Instance

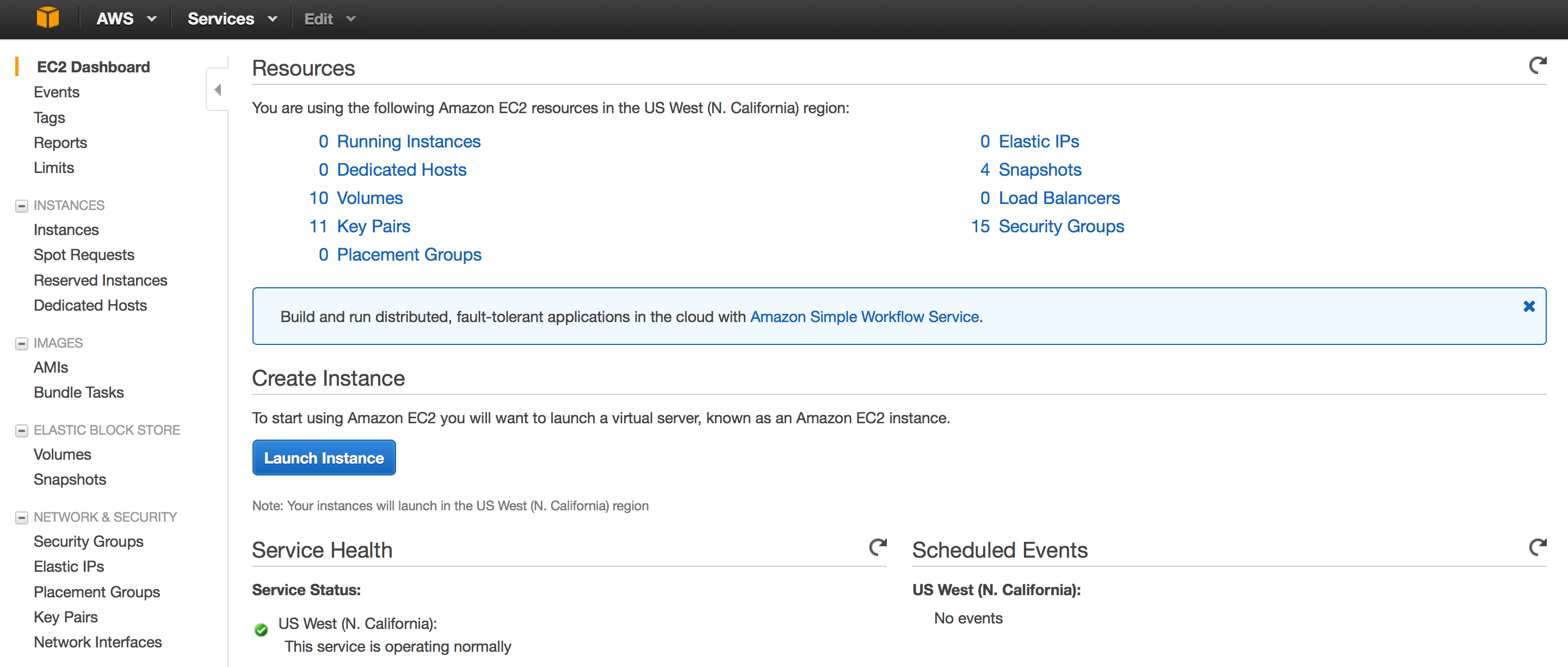

- Login to your AWS EC2 cloud and navigate to the EC2 dash board.

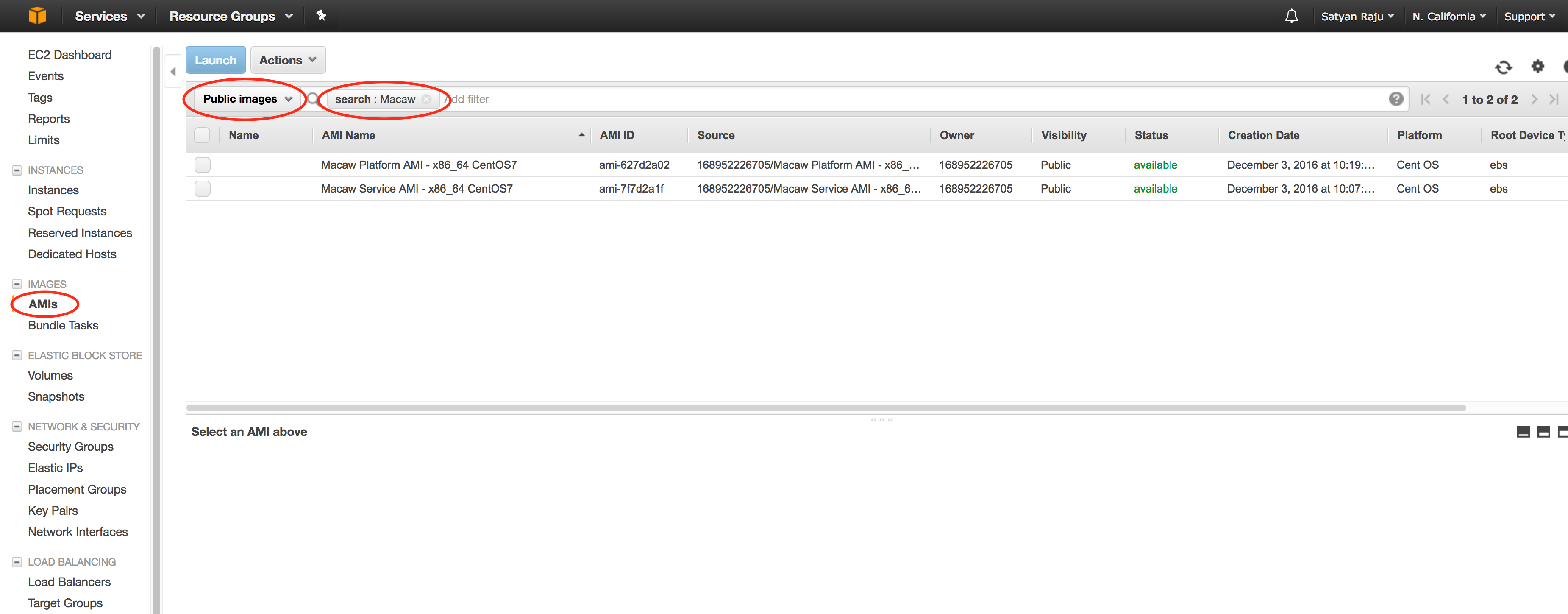

- Go to the AMIs and look for macaw Platform and Service Instances by typing ‘Macaw’ in the search filter like shown below. These are public AMIs currently. Contact POC or support contact at support@www.macaw.io to get further details on the AMI.

- Refer to the AWS link on how to find public AMIs. Refer to the below screen on how search.

- Select the Public Images view under the AMIs.

- Select the macaw Platform Instance and click on Launch.

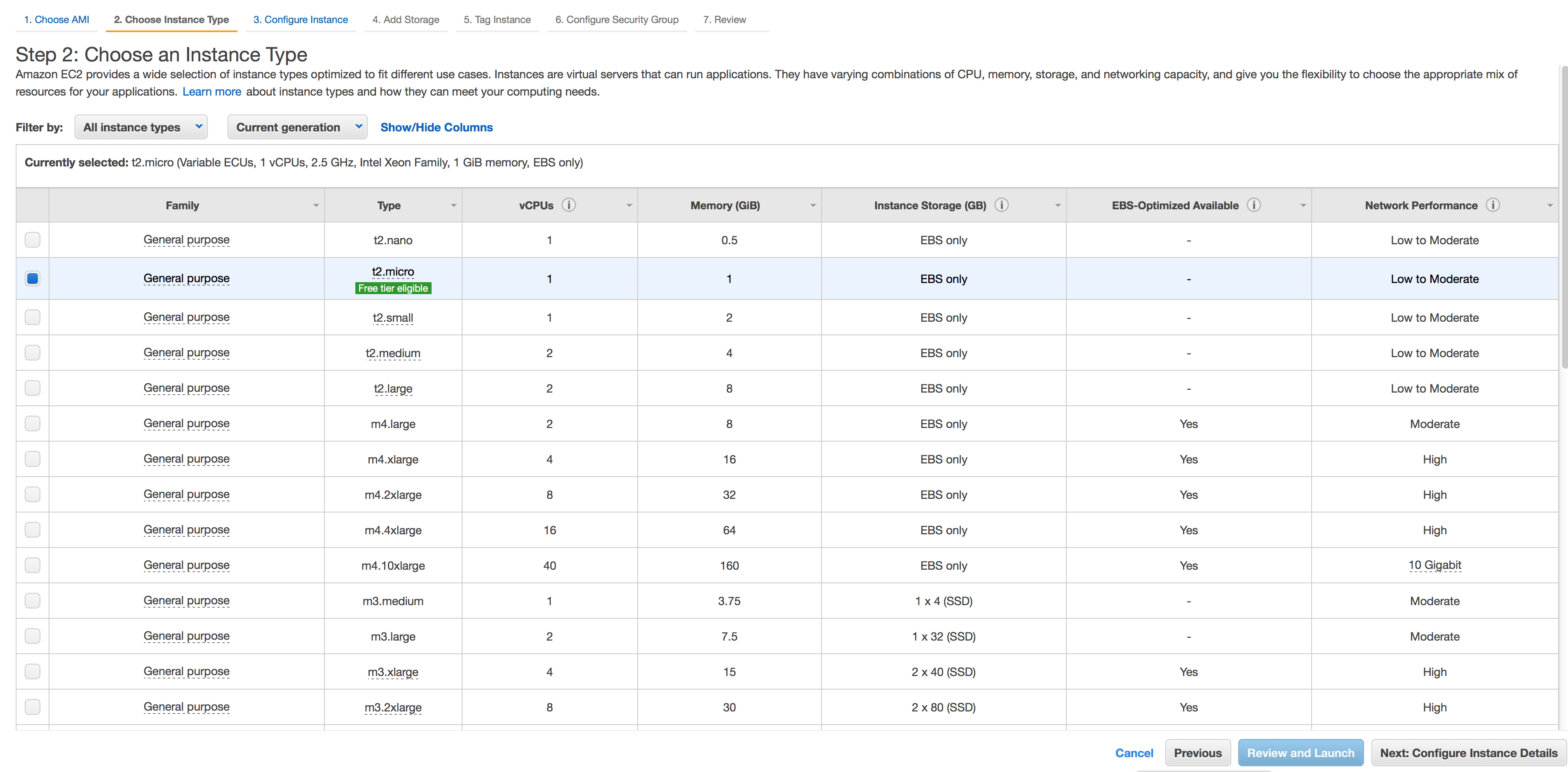

- Chose an Instance Type.

Note: Production installation of macaw Platform AMI requires minimum 8 vCPUs and 24 GIB of Memory.Select m4.2xlarge as the instance type for production installations and click on Configure Instance Details. For POCs you can chose a smaller type like t2.large or above. Minimum 8GB RAM is required for macaw platform.

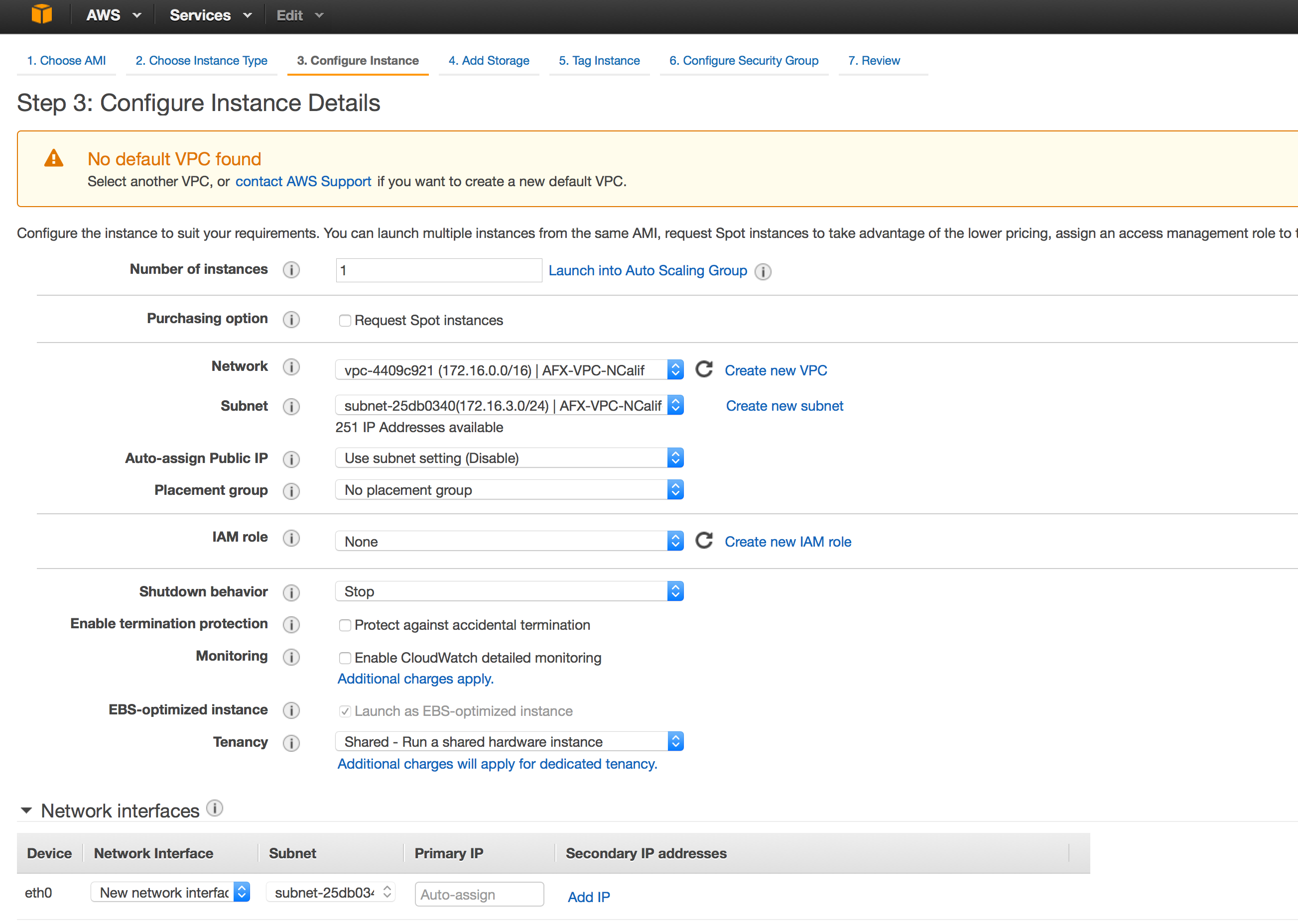

- The below settings in the next page are specific to your AWS environment. Brief explanation is provided below with some details.

Number of Instances: Select 1 Purchasing Option: <This is specific to your AWS Environment. If not sure, please leave it unchecked> Network: This is specific to your AWS environment. This is the VPC for AWS region your are operating in. Check with your admin on what VPC to use. Subnet: This is also specific to your AWS Environment. Try selecting a subnet for which public IP is assigned, so that you can access the machines from outside. Auto-assign Public IP: If you are not sure of Public IP assignment for the above subnet, you can select this to Enable. Placement group: <Check with AWS Admin. If not sure, leave default> IAM Role: <Check with AWS Admin. If not sure, leave default> Shutdown Behavior: <Check with AWS Admin. If not sure, leave default> <Rest All Options Leave Default>

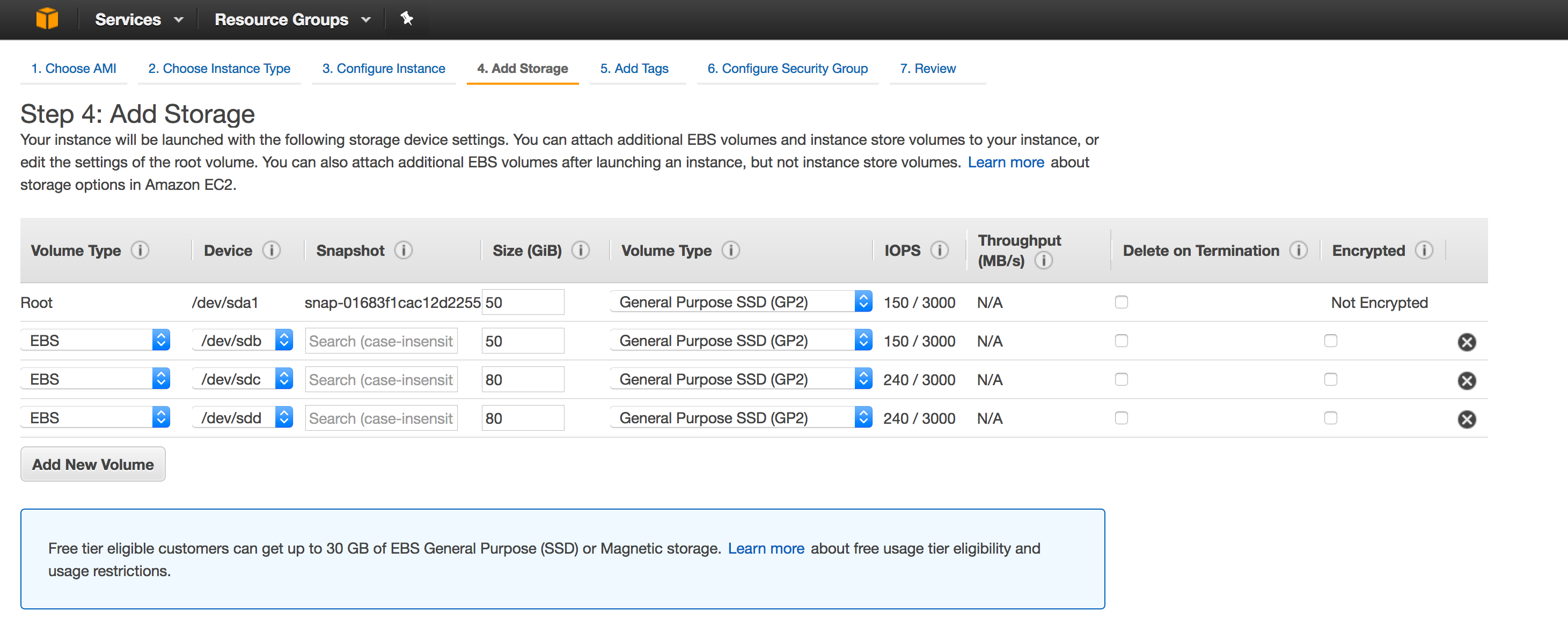

- Adding Storage

macaw Platform AMI runs disk intensive programs like kafka/zookeeper/cassandra. Hence it is absolutely necessary to have separate disks hosting the relevant data for these programs. The AMI also supports minimal installation mode, where extra disks are not needed and the user can select default disks with the AMI and just change the size.

Non Production Disk Configuration

| Disk | Purpose | Recommended Min Size | Details |

| sda1 | Root OS Disk | 50G | This is the root OS disk. |

| sdb | Docker data disk (/var/lib/docker) | 50G | This is the data disk for docker. |

| sdc | Docker LVM Volume | 80G | This is storage disk from which containers are provided the disks. Depending on the number of containers you plan to run, it is recommended to increase this size. This is thin volume and each container is assigned (thin) 10G. |

| sdd | For the macaw operational and configuration data. This disk is also used for serving a shared NFS volume. | 80G |

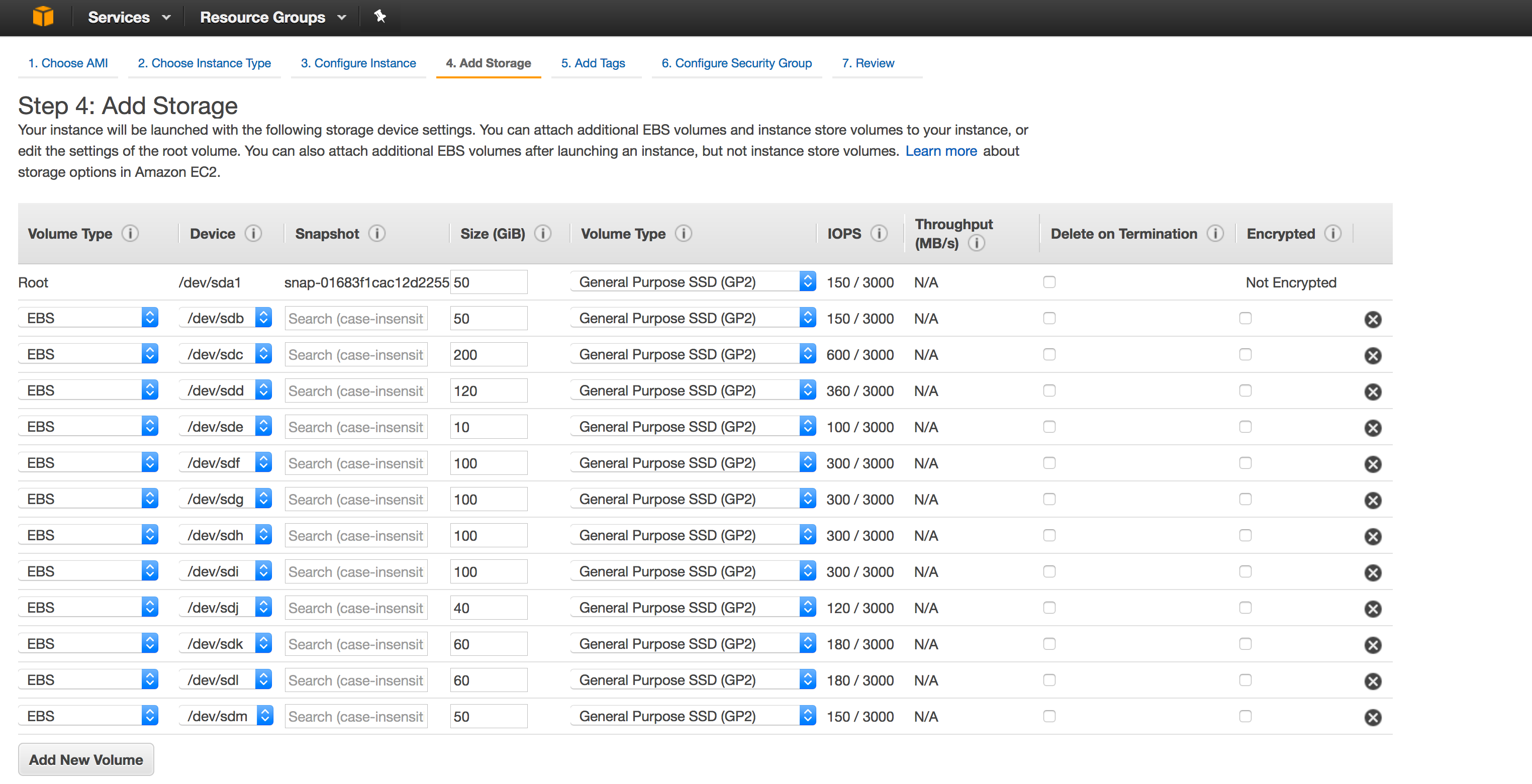

| Disk | Purpose | Recommended Min Size | Details |

| sda1 | Root OS Disk | 50G | This is the root OS disk. |

| sdb | Docker data disk (/var/lib/docker) | 50G | This is the data disk for docker. |

| sdc | Docker LVM Volume | 200G | This is storage disk from which containers are provided the disks. Depending on the number of containers you plan to run, it is recommended to increase this size. This is thin volume and each container is assigned (thin) 10G. |

| sdd | For the macaw operational and configuration data. This disk is also used for serving a shared NFS volume. | 120G | |

|

sde |

For Zookeeper Data (/zookeeper) | 20G | This for storing the macaw platform infra service ‘zookeeper’ data |

|

sdf |

For Kafka Logs (/kafka-logs1) | 100G to 200G | Kafka Logs are persisted on disk with periodic cleanup. It is recommended to increase this volume size, if you anticipate to run 100’s of micro services. |

|

sdg |

For Kafka Logs (/kafka-logs2) | 100G to 200G | Kafka Logs are persisted on disk with periodic cleanup. It is recommended to increase this volume size, if you anticipate to run 100’s of micro services. |

|

sdh |

For Cassandra (/cassandra-db-01) | 100G | This is for Cassandra DB Data. |

|

sdi |

For Cassandra (/cassandra-db-02) | 100G | This is for Cassandra DB Data. |

|

sdj |

For Cassandra Commit Logs (/cassandra-commit-logs) | 40G | This is for storing cassandra commit logs. |

|

sdk |

For Elasticsearch (/elasticsearch) | 60G | For Elastic search Data. |

|

sdl |

For Redis (/redis) | 60G | For Redis Data |

|

sdm |

For MySQL (/var/mysql) | 50G | For MySQL Data. |

Note: The Platform AMI auto mounts all these additional volumes.

Once the storage is configured, please click “Next: Tag Instance”

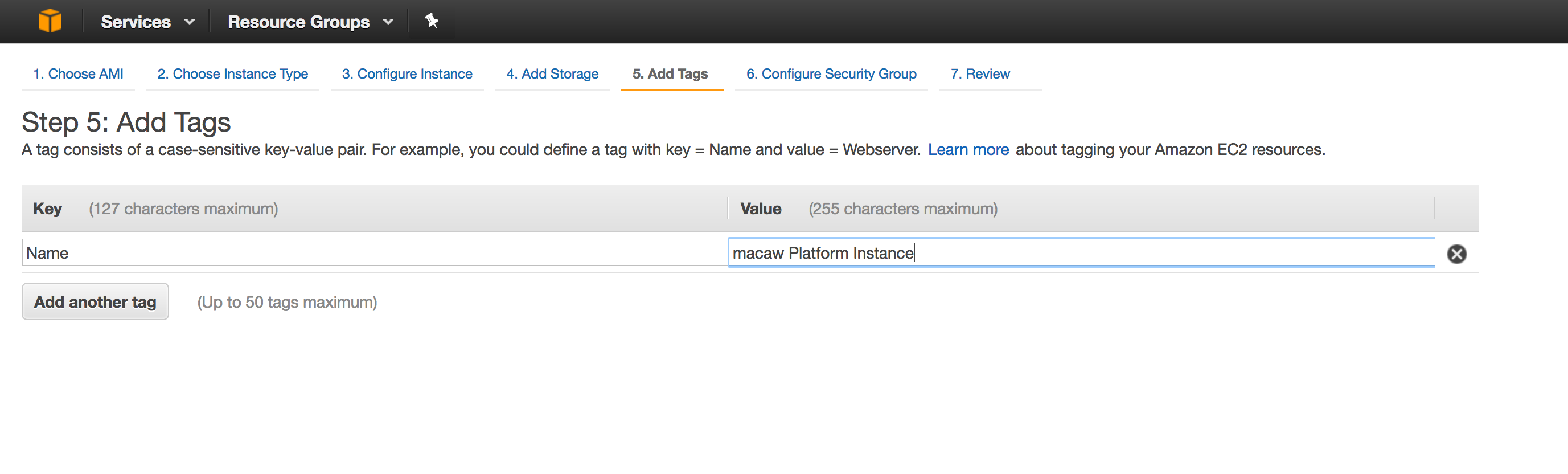

- Tag Instance

Provide a name to the platform instance and then click “Configure Security Group”

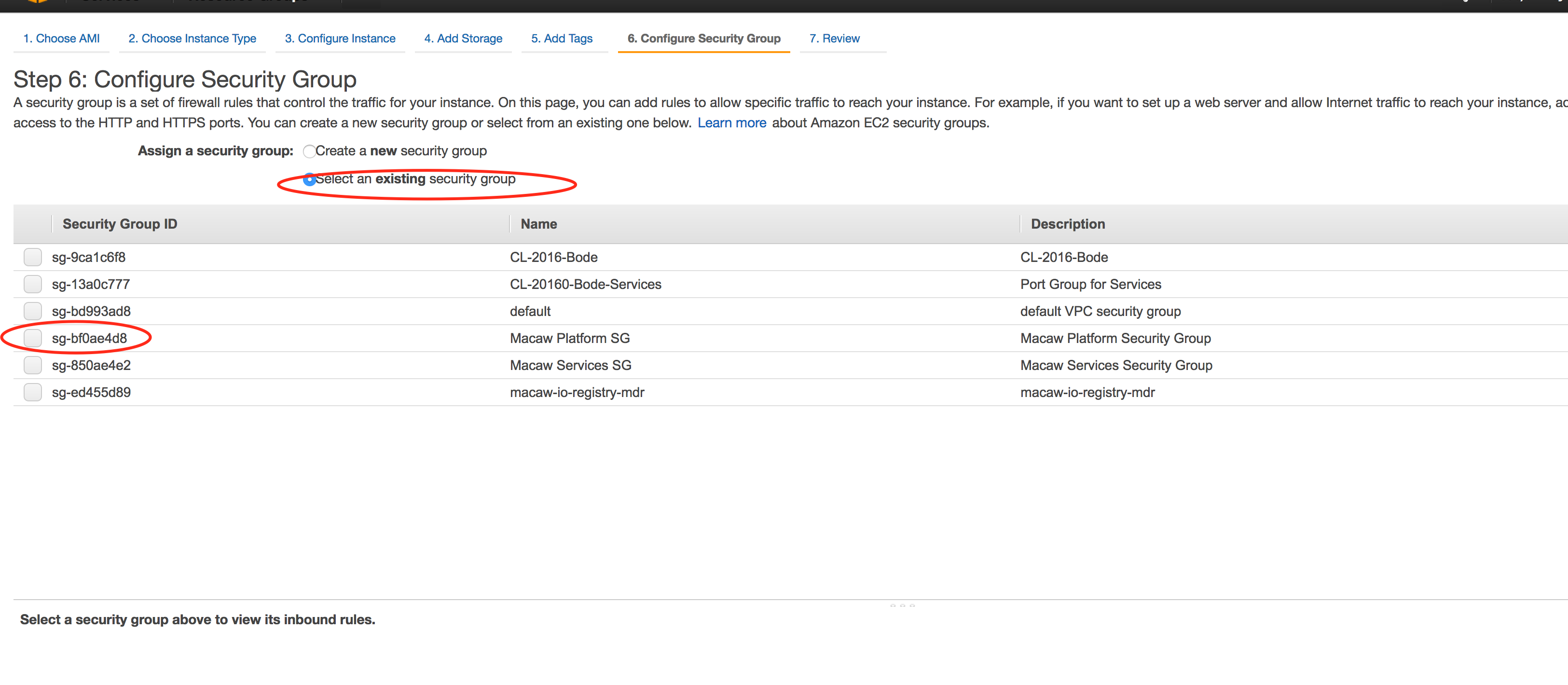

- Configure Security Group

Select the option “Select an existing security group” and then select the Platform Instance Security Group you defined earlier and click on “Review and Launch”.

- Review your selections and then click launch.

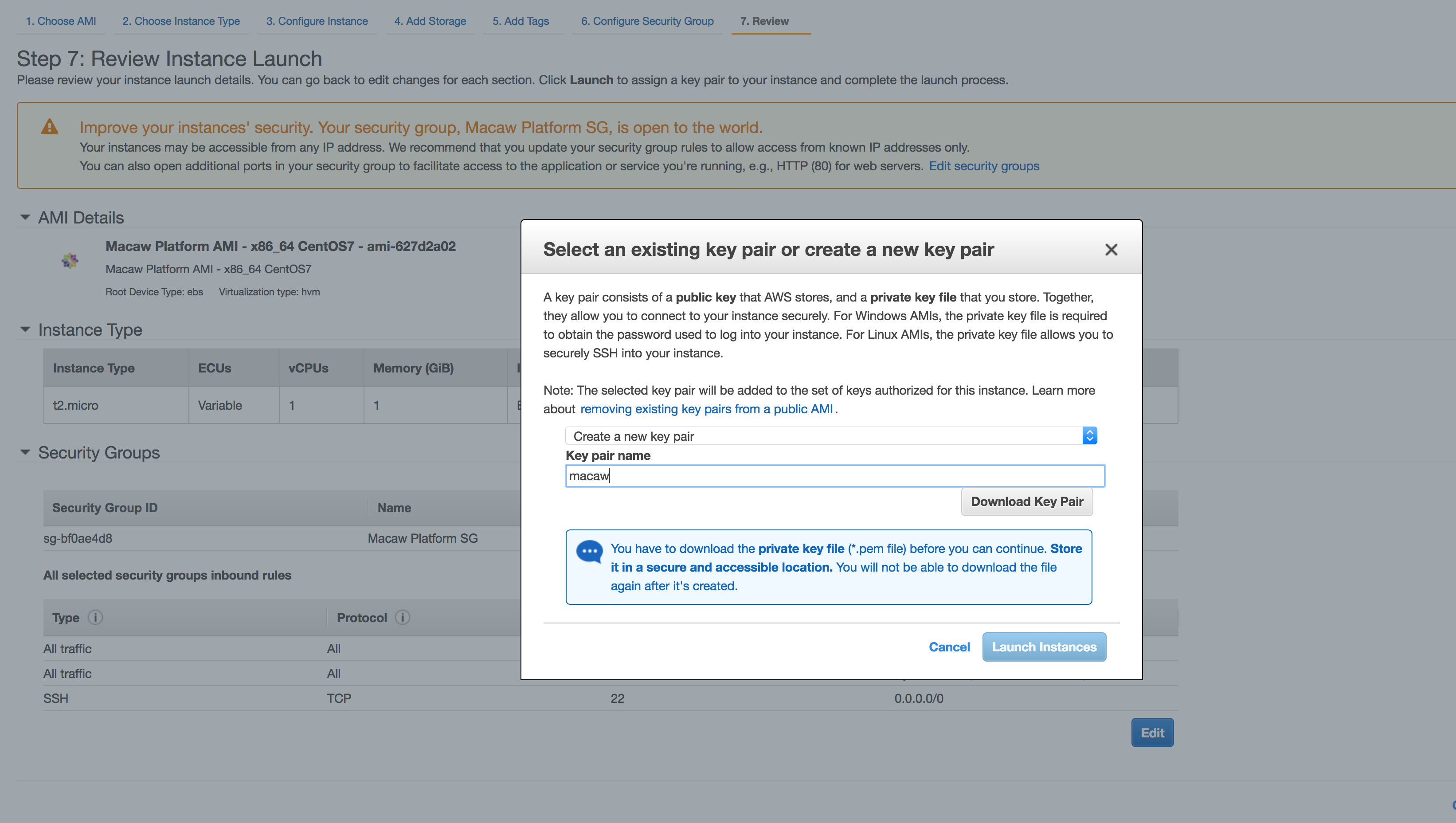

- Next your will be prompted for selection of keys. This is where AWS will let your chose an exiting key or to create a key pair and program your instance with the public key of the key pair. You are supposed to be holding the private key of the pair. If you lose the private key, it cannot be downloaded again. AWS only lets you download the key pair once when it is created.

Note: You can chose to create a new pair and use this for all macaw related AWS instances. Make sure to download the private key and store it securely.

- Click Launch and wait for instance to be available.

Launching Service Instance

If you need service instance as well, follow similar instructions on the launch service instance as well. The service instance AMI doesnt require any additional disks. For the pre-populated disks, chose the sizes as described above for the platform instance (These base mandatory disks follow the same recommendations). When selecting the security group, make sure to select the one which is defined for the Services.

Elastic IP

While AWS provides public DNS/IP to each of the instance you launch based on your network settings, it is highly recommended to use Elastic IP to the platform instance. You can refer to this document from AWS for more details on the Elastic IP.

Please follow the below instructions on how to get an Elastic IP and assign it to the platform instance.

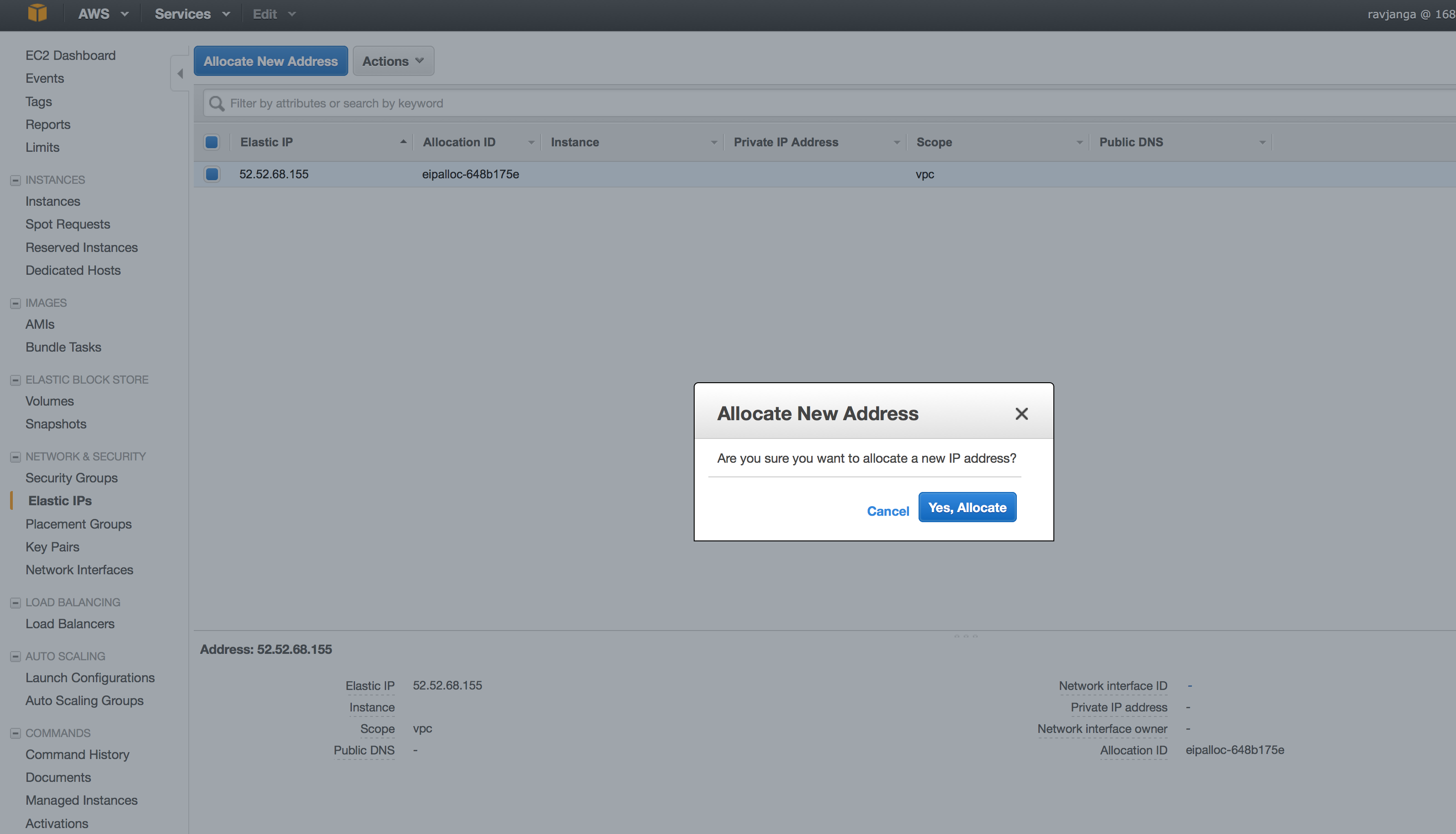

- Go to the EC2 Dashboard.

- Select the “Elastic IPs” from left side.

- Click on “Allocate New Address”

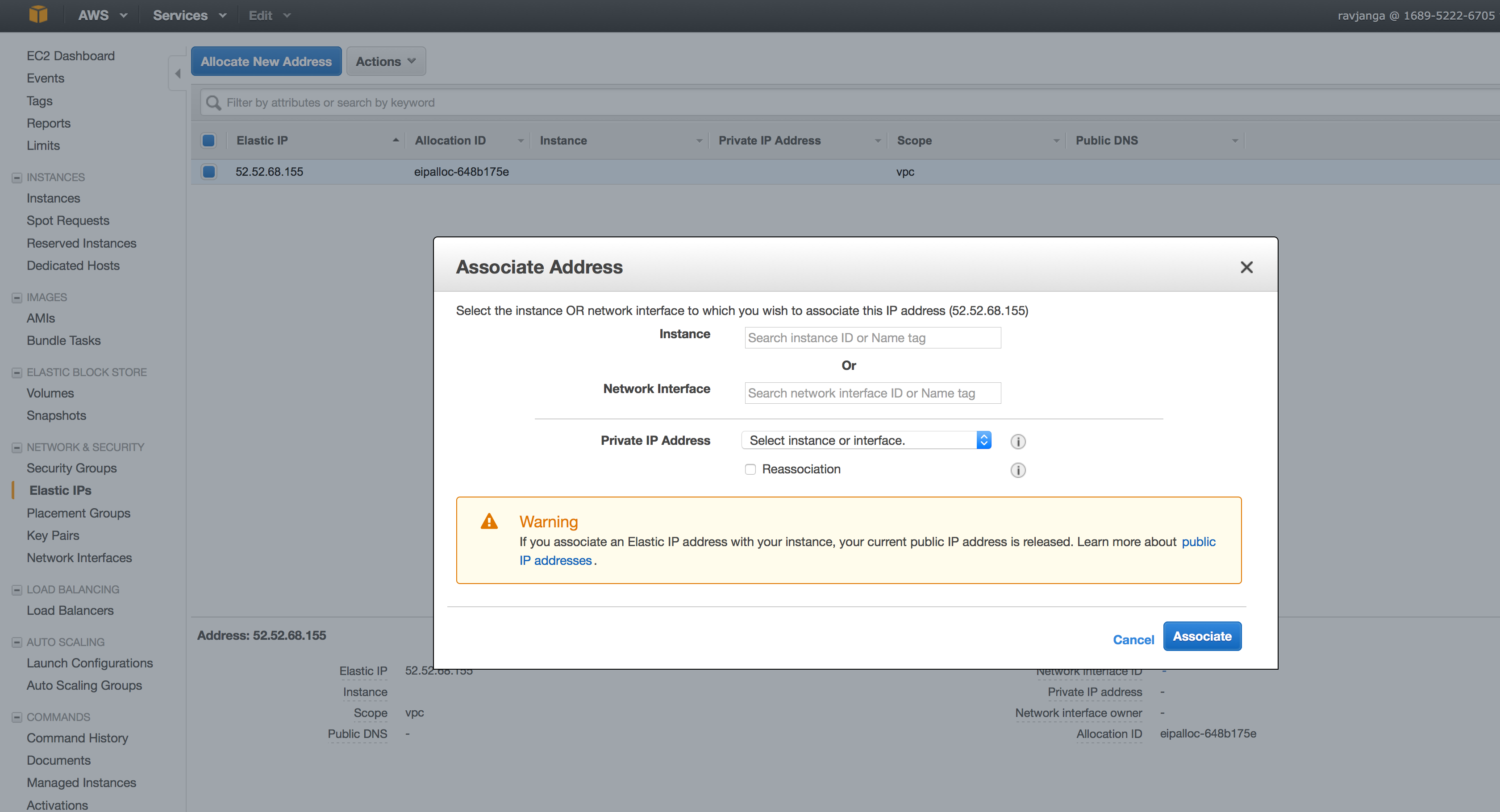

- Select the IP address and from the “Action” select the action “Associate Address”

In the Instance, find the platform instance and click “Associate”

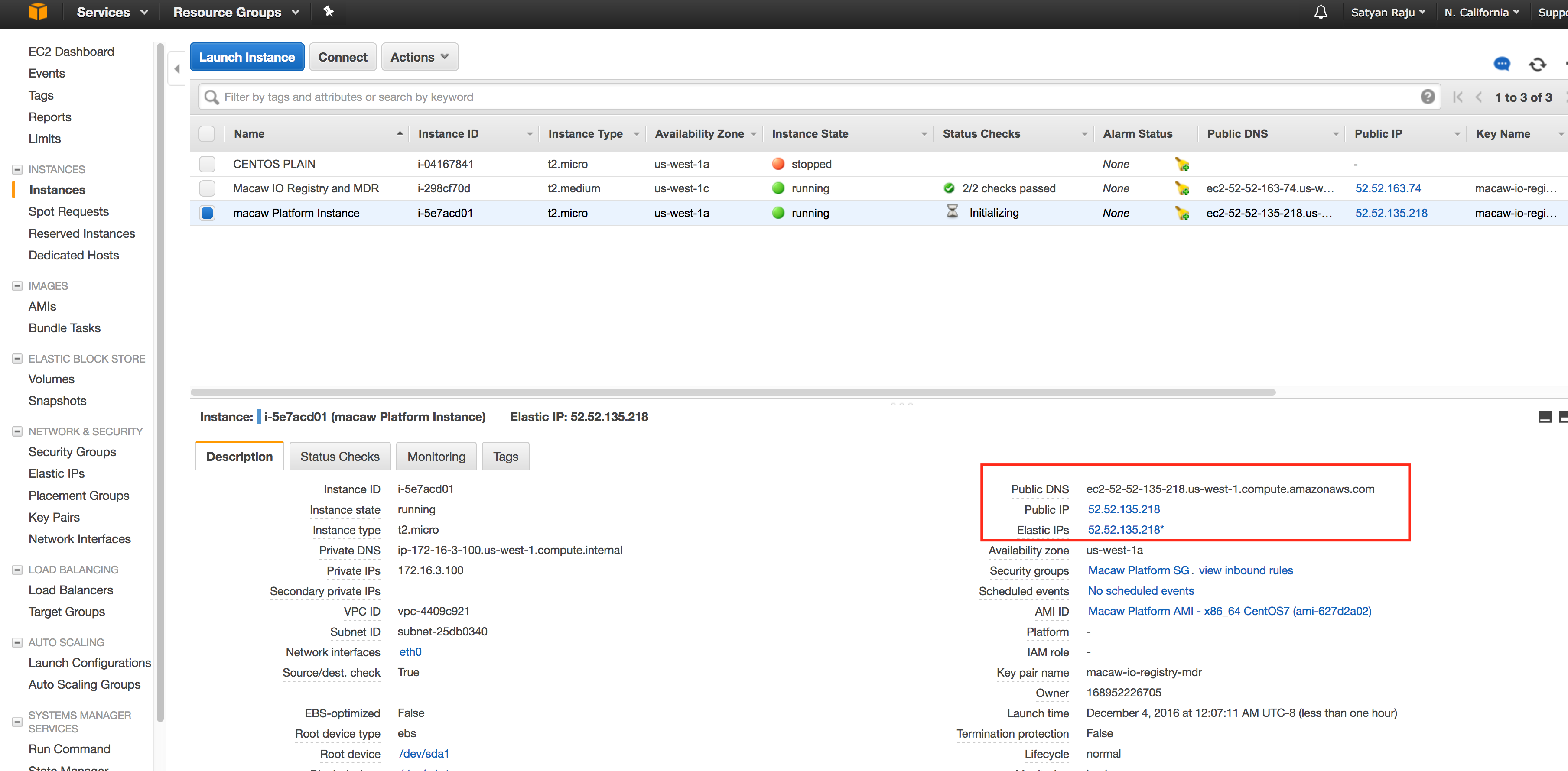

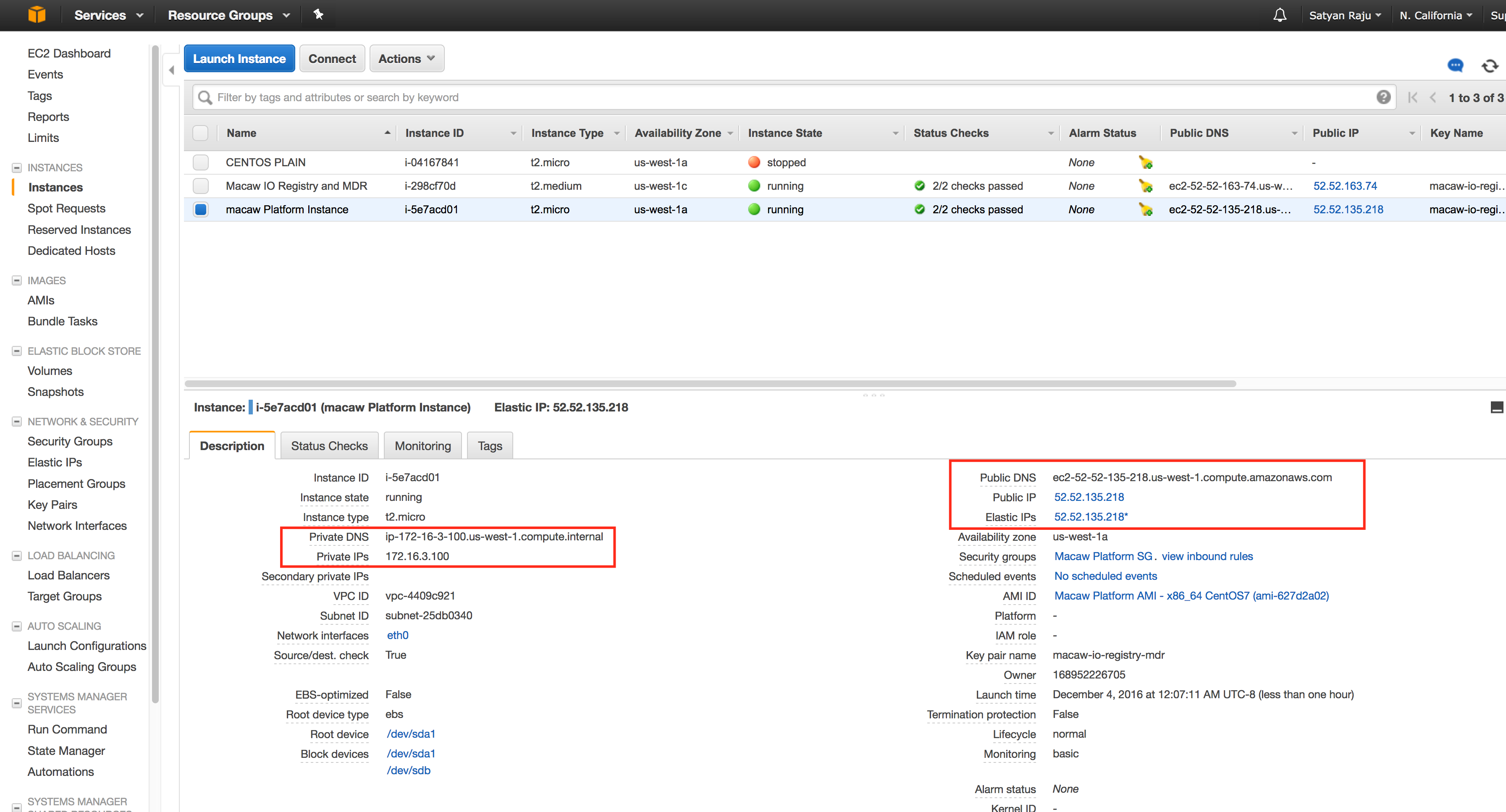

- Go to the instances option on the left side and select the platform VM to check the Elastic IP assignment.

- From here onwards, you can use this IP/DNS for accessing the platform Instance externally from anywhere.

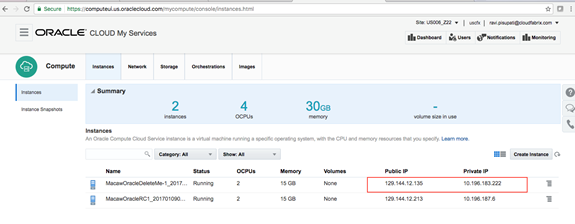

Internal IP and DNS

Each instance in EC2 would receive an internal private IP and DNS based on your VPC settings. You should be using this IP address or DNS of the platform Instance and service instances while setting up the necessary platform configuration using macaw setup describe at this link.

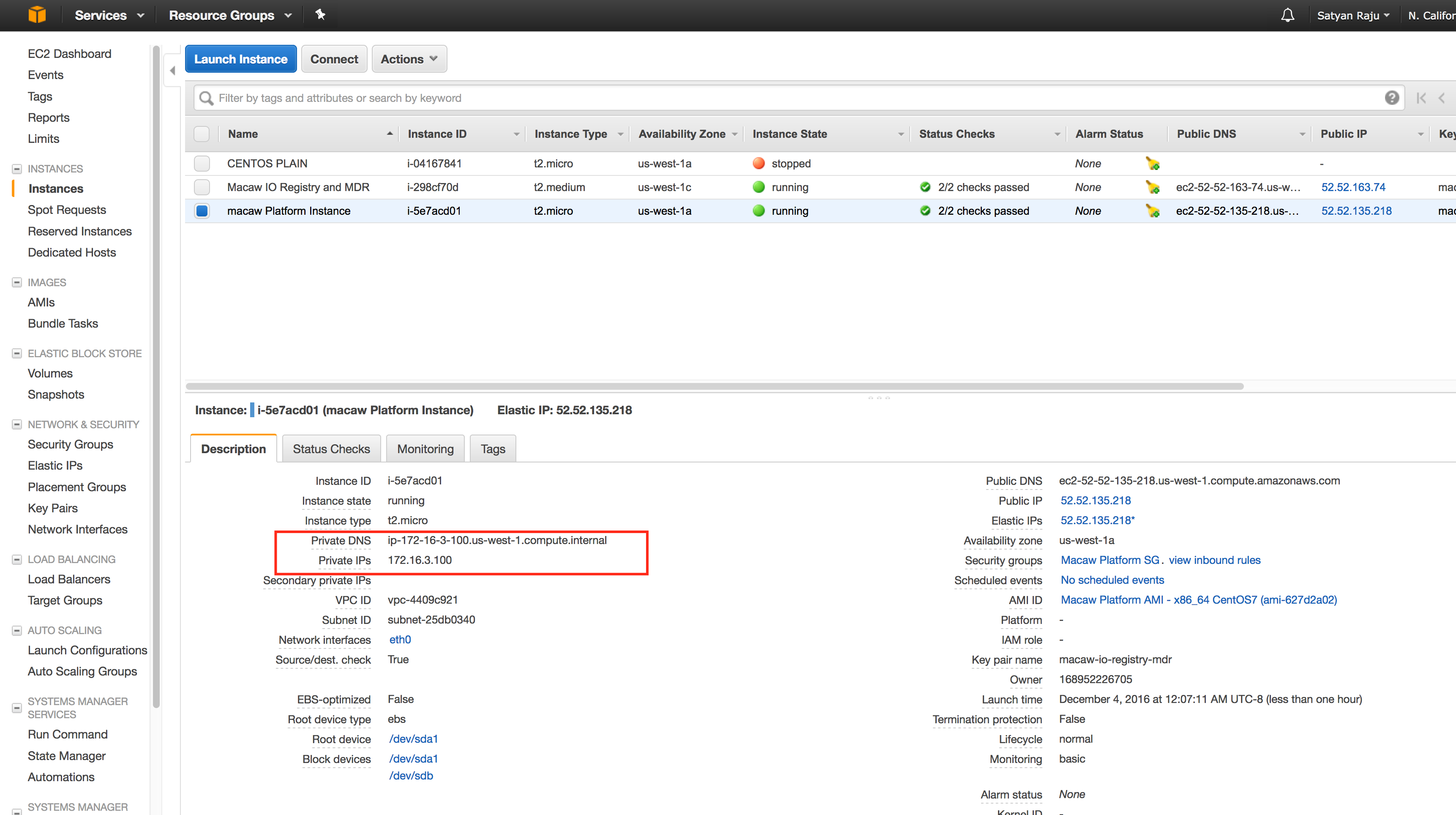

How to find my Internal IP/DNS of my AWS Instance

- Go to the EC2 Dashboard and select the Instances on the left side.

- On the right window, it would show all your instances. Identify your Platform/Services Instance and select the check box.

- You should be using either private DNS or IP for setting up the necessary platform configuration.

Note:

For Platform Instance, Elastic IP assignment is recommended so that it can be reached from the external world.

For Service Instances, Public IP assignment is optional. The only requirement is for the instances to reach the outside world. It is typically recommended to get the public IP assigned by default by the AWS so that the instance can be accessed externally for any troubleshooting.

Logging into Instances

Viewing Instance Details

- Click on the Instances in the EC2 dashboard view.

- In the list shown, select either Macaw platform or Service Instance.

The Public DNS/IP can be used to access the instance using SSH.

Accessing Instance

macaw AMI instances are based on standard x86_64 CentOS7. The AMIs are programmed with centos user and the public key of the key pair you have used during the launch of the AMIs, is programmed for this user. You can use the private key of the pair to login.

ssh -i <path to the private key> macaw@<Platform Instance Public IP>

ssh -i <path to the private key> macaw@<Service Instance Public IP>

Refer to the below AWS links for accessing the instance using the key.

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/putty.html

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AccessingInstancesLinux.html

The macaw AMIs are programmed with macaw user. Password based SSH is enabled for this specific user. The default password is “macaw”. It is strongly recommended to changed the password on all the instances.

ssh macaw@<Platform Instance Public IP>

ssh macaw@<Service Instance Public IP>

You can use putty or any other standard windows based SSH client to access the Instance using SSH. From linux, SSH client is standard utility and should be available on all flavors of Linux.

macaw CLI Tool Installation

Once you finished the launching of the required AMIs, you need to install macaw CLI tool on the platform Instance. Execute the below command to install the macaw CLI packge.

sudo pip install <Location or HTTP link of macawcli tar Package>

When you registered for the macaw software download, the email confirmation will provide you a link to the macaw CLI package. Download the package locally to platform instance or you can use the direct HTTP/HTTPS link in the above command.

Next Steps

Please refer to the platform installation and follow the guidelines..

Vagrant

Vagrant provides easy to configure, reproducible, and portable work environments built on top of industry-standard technology. Vagrant is controlled by a single consistent workflow to help maximize the productivity and flexibility of the user and their team. For more details on Vagrant Installation, refer to the Vagrant documentation.

Macaw platform can be installed locally on a Windows desktop/MAC running Vagrant/Virtual Box. The below documentation assumes that working installation of Vagrant and Virtual box on the system are in place. The recommended version of Vagrant and virtual box are listed below.

Vagrant Download Link | Virtual Box Download Link

Note: To install Vagrant on Windows , openssh for Windows must also be installed as a prerequisite.

$vagrant version Installed Version: 1.8.7 Latest Version: 1.8.7 You're running an up-to-date version of Vagrant! $ VBoxManage --version 5.1.10r112026

Download the macaw Box Image

- Get the http link for the box image. Please contact the Macaw support team to help provide an http link for the downloadable box.

- Execute the below command to download the macaw box image terminal window (Mac) or a Command Window (Windows).

$ vagrant box add <HTTP Link for macaw Box>

box: Loading metadata for box '<HTTP Link>'

box: Adding box 'macaw' (v1.0.0) for provider: virtualbox

box: Downloading: <HTTP Link>/boxes/macaw-1.0.0.box

box: Box download is resuming from prior download progress

box: Calculating and comparing box checksum...

box: Successfully added box 'macaw' (v1.0.0) for 'virtualbox'!

Note: Depending on network speed, the download may take some time.

3. Verify if the box has been downloaded properly using the below command.

$ vagrant box list centos/7 (virtualbox, 1609.01) macaw (virtualbox, 1.0.0)

Running the macaw Box Image

- Depending on the version of the operating system running, create a folder with any name like ‘macawplatform’. Change to that directory.

- Run the command below from either terminal window (Mac) or a Command Window (Windows)

$ mkdir macawplatform $ cd macawplatform/ $ vagrant init macaw A `Vagrant file` has been placed in this directory. You are now ready to `vagrant up` your first virtual environment! Please read the comments in the Vagrant file as well as documentation on `vagrantup.com` for more information on using Vagrant.

You need to define an environment variable MACAW_PLATFORM_VERSION=<platform Version> For Example, if you are using 0.9.4, please provide like below.

$ export MACAW_PLATFORM_VERSION=0.9.4

Note: For Windows you can use the below. Make sure to exit the Powershell Window and re-open after executing this.

The environment variable doesnt take into effect in the current powershell window.

[Environment]::SetEnvironmentVariable("MACAW_PLATFORM_VERSION", "0.9.4", "User")

$ vagrant up Bringing machine 'default' up with 'virtualbox' provider... ==> default: Importing base box 'macaw'... ==> default: Matching MAC address for NAT networking... ==> default: Checking if box 'macaw' is up to date... ==> default: Setting the name of the VM: macaw-platform-vm-0.9.4 ==> default: Fixed port collision for 22 => 2222. Now on port 2200. ==> default: Clearing any previously set network interfaces... ==> default: Preparing network interfaces based on configuration... default: Adapter 1: nat default: Adapter 2: hostonly ==> default: Forwarding ports... default: 22 (guest) => 2200 (host) (adapter 1) ==> default: Running 'pre-boot' VM customizations... ==> default: Booting VM... ==> default: Waiting for machine to boot. This may take a few minutes... default: SSH address: 127.0.0.1:2200 default: SSH username: macaw default: SSH auth method: private key default: Warning: Remote connection disconnect. Retrying... default: Warning: Remote connection disconnect. Retrying... default: Warning: Remote connection disconnect. Retrying... default: Warning: Authentication failure. Retrying... default: default: Vagrant insecure key detected. Vagrant will automatically replace default: this with a newly generated keypair for better security. default: default: Inserting generated public key within guest... default: Removing insecure key from the guest if it's present... default: Key inserted! Disconnecting and reconnecting using new SSH key... ==> default: Machine booted and ready! ==> default: Checking for guest additions in VM... ==> default: Configuring and enabling network interfaces... ==> default: Mounting shared folders... default: /vagrant => /Users/ravjanga/Documents/0.9.4.b7 ==> default: Running provisioner: macaw Registry Login (shell)... default: Running: script: docker login to registry.macaw.io ==> default: Login Succeeded ==> default: Running provisioner: macaw Infra (shell)... default: Running: script: Downloading macaw infra components ==> default: Pulling registry.macaw.io/zookeeper:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/kafka:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/cassandra:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/elasticsearch:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/mysql:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/haproxy:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/macaw-tomcat:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/redis:macaw-v0.9.4 ==> default: Running provisioner: macaw Platform (shell)... default: Running: script: Downloading macaw platform components ==> default: Pulling registry.macaw.io/service-registry:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/notification-manager:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/identity:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/service-provisioner:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/user-preferences:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/console-ui-webapp:macaw-v0.9.4 ==> default: Pulling registry.macaw.io/macaw-dbinit:macaw-v0.9.4 ==> default: Running provisioner: Installing macawcli Tool (shell)... default: Running: script: Downloading macawcli ==> default: Installing macaw CLI packge from https://macaw-amer.s3.amazonaws.com/tools/macawcli-0.9.4.tar.gz ==> default: Macawcli Tool package Installed Successfully ==> default: Running provisioner: IP/Hostname Mapping (shell)... default: Running: script: IP/Hostname Mapping ==> default: IP/Hostname Mapping in /etc/hosts successful ==> default: Machine 'default' has a post `vagrant up` message. This is a message ==> default: from the creator of the Vagrantfile, and not from Vagrant itself: ==> default: ==> default: Congratulations!! You are now ready to login to the Macaw Platform VM

Note: The vagrant Macaw box is customized with the properties listed here. Overriding the defaults can be done with a few supported environment variables.

- Memory default is set to 8GB.

- CPU count is set to 2.

- Network is set to private_network with hardcoded IP address of 192.168.33.10. This IP is reachable from the Host only. The VM will be able to access the internet. The documentation of further steps assume this IP address in installing the Macaw platform.

| Environment Variable | Purpose | Mandatory | Default Value |

| MACAW_PLATFORM_VERSION | This is to define what version of the platform version the user would like the vagrant provisioner to bootstrap. | Yes | |

| MACAW_VAGRANT_DISABLE_UPDATE_CHECK | This is to prevent vagrant from checking any updates to the box image. | No | True |

| MACAW_VAGRANT_MEMORY | Memory allocated to the user’s Vagrant Platform VM | No | 8192 |

| MACAW_VAGRANT_CPUS | CPUs allocated to the user’s Vagrant Platform VM | No | 2 |

| MACAW_VAGRANT_NW_MODE | Network Mode – Supports only “private_network” or “public_network” | No | private_network with IP 192.168.33.10 |

| MACAW_IP_ADDRESS | If private_network is selected, then the IP Address can be changed by setting this environment variable. | No | 192.168.33.10 |

| MACAW_SUBNET_MASK | If private_network is selected, then subnet mask can be changed by setting this environment variable. | No | 255.255.255.0 |

Verifications

Once the above command is successful, the user can login to the Macaw platform VM and be able to check a few things to confirm if the installation was performed smoothly.

$ vagrant ssh Last login: Sat Nov 12 23:26:12 2016 [vagrant@localhost ~]$ ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:40:c1:5d brd ff:ff:ff:ff:ff:ff inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic enp0s3 valid_lft 85741sec preferred_lft 85741sec inet6 fe80::a00:27ff:fe40:c15d/64 scope link valid_lft forever preferred_lft forever 3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 08:00:27:97:8d:6c brd ff:ff:ff:ff:ff:ff inet 192.168.33.10/24 brd 192.168.33.255 scope global enp0s8 valid_lft forever preferred_lft forever inet6 fe80::a00:27ff:fe97:8d6c/64 scope link valid_lft forever preferred_lft forever 4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:cb:86:21:94 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 scope global docker0 valid_lft forever preferred_lft forever [vagrant@localhost ~]$ ping 8.8.8.8 -c 5 PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data. 64 bytes from 8.8.8.8: icmp_seq=1 ttl=63 time=18.9 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=63 time=16.1 ms 64 bytes from 8.8.8.8: icmp_seq=3 ttl=63 time=19.6 ms 64 bytes from 8.8.8.8: icmp_seq=4 ttl=63 time=297 ms 64 bytes from 8.8.8.8: icmp_seq=5 ttl=63 time=14.5 ms --- 8.8.8.8 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4007ms rtt min/avg/max/mdev = 14.558/73.298/297.207/111.969 ms [vagrant@localhost ~]$ [vagrant@localhost ~]$ nproc 2 [vagrant@localhost ~]$ free -m total used free shared buff/cache available Mem: 5807 148 4040 8 1617 5426 Swap: 2047 0 2047 [vagrant@localhost ~]$ macaw -v Version: macawcli-0.9.4

Note: The Macaw tool version can be different from what is shown above.

After successful execution of the above steps, move forward with the platform installation. Follow the link for Macaw platform installation. For installing the Macaw platform, during setup the user would need to provide FQDN of the platform host. In this case, IP address 192.168.33.10 can be used for the platform IP as well as the service host IP.

Linux Host

Macaw platform is supported on generic 64 Bit Linux OS which supports Docker. The steps below provide an automated way of installing the necessary packages needed for Macaw platform and also manual steps if required. This installed script is only supported on CentOS7 64-bit OS and version 3.10 or higher of the Linux kernel.

Prerequisites

- Check for CentOS Version. It should be minimum 7.

$ more /etc/centos-release CentOS Linux release 7.3.1611 (Core) $ uname -r 3.10.0-514.16.1.el7.x86_64

- Check availability of the command curl. This command is available on most linux installations by default.

$ which curl /usr/bin/curl

- DNS and Hostname

Make sure the platform host is configured with the fully qualified host name with DNS resolution. It is highly recommended to have proper DNS entry for the host name and avoid using the/etc/hosts mapping of the host name to IP.

If DNS is not available in your network, you can use the IP Address of the host. This is not recommended in production deployments. In this have the below entry in your /etc/hosts file on the platform Host.

<Platform HostIP> <Platform Hostname>

- Firewall Settings

If firewall is disabled, the user can ignore this pre-requisite. If firewall is enabled on the Linux Host, then for platform installation the ports/services listed below need to be opened up.

firewall-cmd --list-ports 443/tcp 80/tcp 8181/tcp 4000/tcp 2181/tcp 9200/tcp 3306/tcp 8443/tcp 8637/tcp 9042/tcp 9300/tcp 9092/tcp 7000/tcp 6379/tcp 5000/tcp 9160/tcp firewall-cmd --list-services rpc-bind nfs ssh mountd

Install with Automated Script

- Log into the machine as a user who has password-less

sudo permissionsorrootprivileges. - Make sure the existing packages are up-to-date.

sudo yum update

- If yum updates the kernel, it is recommended to restart the machine.

- Run the Macaw installation script.

Note: When the user registered for the Macaw software download, the confirmation email would have a link to the MacawInstall script, a shell script.

$ bash <(curl -s https://s3.amazonaws.com/macaw-amer/tools/macawInstall.sh) --role <Role of the VM/Host> Note: Once the installation is done, make sure logout and login back again so that group permissions get updated for the user. To Prepare a Platform VM/Host pass --role platform To Prepare a Service VM/Host pass --role service

- Install the Macaw CLI Python package. The location of the macaw CLI package should be part of the Macaw download/setup email.

sudo pip install <URL / Location of the macawcli Package>

- Verify the Macaw tool by issuing the command “macaw –version”

Once the installation/verification is done, the user can move to the Platform Installation section.

Manual Installation Steps – Platform VM/Host

If installer is not used or cannot be used in your environment, the below steps can be executed manually.

-

Yum Update

Update the OS to get latest security patches.

yum update -y

Note: Please reboot the system after doing this operation.

-

NFS Client Utils

Macaw platform mandates NFS shared mount between platform and service hosts. To be able to mount NFS, the user needs to install NFS client utilitiess.

sudo yum install -y nfs-utils sudo systemctl enable rpcbind sudo systemctl start rpcbind

-

Install autofs

Autofs module is used to mount NFS volumes.

sudo yum install -y autofs sudo sed -i 's/.*auto.misc.*/\/- \/etc\/auto.macawnfs/g' /etc/auto.master sudo touch /etc/auto.macawnfs sudo systemctl enable autofs sudo systemctl start autofs

-

Install macaw Trusted certificate

sudo wget -q -P /etc/pki/ca-trust/source/anchors/ https://s3.amazonaws.com/macaw-amer/thirdparty/__macaw_io.ca-bundle sudo update-ca-trust

-

Install Docker

Follow the docker installation instructions at the below link.

https://docs.docker.com/engine/installation/linux/centos/

Below are simple instructions to install docker 1.13.1 release. It is highly recommended to follow production installation guidelines for Docker.

sudo yum-config-manager --add-repo https://s3.amazonaws.com/macaw-amer/thirdparty/docker.repo sudo yum makecache fast sudo yum -y install docker-engine-1.13.1 docker-engine-selinux-1.13.1 sudo systemctl daemon-reload sudo systemctl enable docker sudo systemctl start docker

Once the docker installation is done, please perform the below necessary post-installation steps.

sudo usermod -aG docker <current user ID>

Please logout of the current session and login again so that the groups permissions is taken into account.

Note: Current user ID is the user who would be executing the Macaw installation later as well. The user should be using this user ID across the Macaw installation steps.

-

Install JAVA

Java 1.8 must be installed under /opt/java

sudo yum install -y wget /bin/rm -rf /tmp/java-linux.tar.gz sudo mkdir -p /opt/java sudo wget -q -O /tmp/java-linux.tar.gz https://s3.amazonaws.com/macaw-amer/thirdparty/jdk-8u102-linux-x64.tar.gz sudo tar xf /tmp/java-linux.tar.gz -C /opt/java --strip-components 1 /bin/rm -rf /tmp/java-linux.tar.gz

Now update the PATH of the current user so that Java is found in the PATH. Add the below line to the ~/.bash_profile

PATH=/opt/java/bin:$PATH export PATH

Note: Make sure to logout and login back. Verify that Java is found in the PATH by executing

$ which java /opt/java/bin/java $ java -version java version "1.8.0_102" Java(TM) SE Runtime Environment (build 1.8.0_102-b14) Java HotSpot(TM) 64-Bit Server VM (build 25.102-b14, mixed mode)

-

Enable NFS Server

If the user doesn’t have an external NFS server, they can install and enable NFS on the platform host. Once installed, they can NFS export a mount from the platform host and all the service hosts can NFS mount the directory. The exporting/mounting is all done as part of the Macaw setup.

sudo yum install -y nfs-utils libnfsidmap sudo systemctl enable rpcbind sudo systemctl enable nfs-server sudo systemctl start rpcbind sudo systemctl start nfs-server sudo systemctl start rpc-statd sudo systemctl start nfs-idmapd

-

Install utilities – mysql client, wget

Install mysql client.

sudo yum install -y mysql wget

-

python environment setup

Macaw tool set supports standard Python PIP-based installation. Follow the below instructions to enable pip.

Make sure the Python version is Python 2.7.X

python --version

Enable python PIP tool and make sure it is 8.1.2 or above.

curl --silent --show-error --retry 5 https://bootstrap.pypa.io/get-pip.py | sudo python sudo pip --version

Macaw tool set uses certain Python libraries which would get installed as part of the Macaw tool installation. However, it would require certain packages to be present on the system. You can remove the package once the installation is done.

sudo yum install -y gcc kernel-devel libffi-devel python-devel openssl-devel sudo pip install <Full Local or Remote path location of macawcli.tar.gz>

Verify the successful installation of macaw tool using the below command.

macaw -v

Now the user can remove the additional packages that the user may have added above by using the below command.

sudo yum remove -y gcc kernel-devel libffi-devel python-devel openssl-devel

Once the installation is done, the user can move to the Platform Installation section.

Manual Installation Steps – Service VM/Host

If installer is not used or cannot be used in your environment, the below steps can be executed manually.

-

Yum Update

Update the OS to get latest security patches.

yum update -y

Note: Please reboot the system after doing this operation.

-

NFS Client Utils

Macaw platform mandates NFS shared mount between platform and service hosts. To be able to mount NFS, the user needs to install NFS client utilities.

sudo yum install -y nfs-utils sudo systemctl enable rpcbind sudo systemctl start rpcbind

-

Install autofs

Autofs module is used to mount NFS volumes.

sudo yum install -y autofs sudo sed -i 's/.*auto.misc.*/\/- \/etc\/auto.macawnfs/g' /etc/auto.master sudo touch /etc/auto.macawnfs sudo systemctl enable autofs sudo systemctl start autofs

-

Install macaw Trusted certificate

sudo wget -q -P /etc/pki/ca-trust/source/anchors/ https://s3.amazonaws.com/macaw-amer/thirdparty/__macaw_io.ca-bundle sudo update-ca-trust

-

Install Docker

Follow the docker installation instructions at the below link.

https://docs.docker.com/engine/installation/linux/centos/

Below are simple instructions to install docker 1.13.1 release. It is highly recommended to follow production installation guidelines for Docker.

sudo yum-config-manager --add-repo https://s3.amazonaws.com/macaw-amer/thirdparty/docker.repo sudo yum makecache fast sudo yum -y install docker-engine-1.13.1 docker-engine-selinux-1.13.1 sudo systemctl daemon-reload sudo systemctl enable docker sudo systemctl start docker

Once the docker installation is done, please perform the below necessary post-installation steps.

sudo usermod -aG docker <current user ID>

Please logout of the current session and login again so that the groups permissions is taken into account.

Note: Current user ID is the user who would be executing the Macaw installation later as well. The user should be using this user ID across the Macaw installation steps.

-

Install JAVA

Java 1.8 must be installed under /opt/java

sudo yum install -y wget /bin/rm -rf /tmp/java-linux.tar.gz sudo mkdir -p /opt/java sudo wget -q -O /tmp/java-linux.tar.gz https://s3.amazonaws.com/macaw-amer/thirdparty/jdk-8u102-linux-x64.tar.gz sudo tar xf /tmp/java-linux.tar.gz -C /opt/java --strip-components 1 /bin/rm -rf /tmp/java-linux.tar.gz

Now update the PATH of the current user so that Java is found in the PATH. Add the below line to the ~/.bash_profile

PATH=/opt/java/bin:$PATH export PATH

Note: Make sure to logout and login back. Verify that Java is found in the PATH by executing

$ which java /opt/java/bin/java $ java -version java version "1.8.0_102" Java(TM) SE Runtime Environment (build 1.8.0_102-b14) Java HotSpot(TM) 64-Bit Server VM (build 25.102-b14, mixed mode)

Platform Installation

Setup

Macaw platform configuration is done through Macaw utility. The setup section documents the full details of the platform’s configuration steps along with details for each specific configuration item.

MACAW Setup

Macaw setup assists in generating the necessary platform and provisioner configuration. This is a mandatory step for the Macaw platform deployment. The section below provides the Macaw setup sequence with a detailed explanation on various prompts.

Note: If using Macaw setup for the first, the user will be prompted for the EULA agreement. For most of the questions, the user can choose the default and make progress.

The below section shows the typical Macaw setup prompts with contextual help. Refer to additional documentation highlighted in blue (The highlighted text is not part of the Macaw setup output).

$ macaw setup @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ The macaw setup will guide you through sequence of steps prompting you with default values and auto @ @ generate platform and provisioner configuration. The default values are picked up from your existing @ @ platform configuration (if any) or suggested based on your host configuration. @ @ @ @ If you are manually editing the platform configuration after auto generation, please refer to the @ @ documentation. @ @ Location of Platform configuration file: ~/platform.cfg @ @ Refer to ~/platform.README for more details on the platform configuration options. @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ Please confirm to continue. [yes/no]: yes +--------------------+----------------------------------------+ | System | Details | +--------------------+----------------------------------------+ | OS | Linux | | Kernel | 3.10.0-327.36.2.el7.x86_64 | | Distribution | ('CentOS Linux', '7.2.1511', 'Core') | | Memory Total | 32774672 KB | | Memory Available | 31925112 KB | | CPU Cores | 8 | +--------------------+----------------------------------------+ This is the FQDN of the platform host (your current host). FQDN needs to be fully DNS resolvable. Adding to /etc/hosts will not be sufficient. If you do not have DNS configured properly, you can provide IP address. It is strongly suggested to use FQDN names. Since the services are containerized it is not going to work if you are simply adding the hostname and IP address mapping in the /etc/hosts file. The DNS resolution should be through a proper DNS server. FQDN of platform Host [platform-190.qa.macaw.io]: platform-190.qa.macaw.io Provide Service Hosts. If you have multiple hosts, please provide them comma separated. DNS resolvable FQDN is recommended. Adding to /etc/hosts will not be sufficient. If you do not have DNS configured properly, you can provide IP address. The user needs to provide comma separated service hosts. Same recommendation on FQDN applies here as well. This is the zookeeper endpoint. Default is chosen below. This assumes the user is running zookeeper on the platform host. FQDN of services hosts (, separated if more than 1): platform-190.qa.macaw.io This is the zookeeper endpoint. Default is chosen below. This assumes the user is running zookeeper on the platform host. This is the user's zookeeper end point. Zookeeper can be run on any machine. By default macaw tool provisions the zookeeper container on the platform. Hence, the user sees the default selection as the platform host. If zookeeper is configured on a different host, provide the same host followed by the zookeeper port. Same recommendation on FQDN applies here as well. Zookeeper endpoint [platform-190.qa.macaw.io:2181]: This is the kafka endpoint. Default is chosen below. This assumes the user is running kafka on the platform host. This is the user's kafka end point. kafka can be run on any machine. By default macaw tool provisions the zookeeper container on the platform. Hence the user sees the default selection as the platform host. If kafka is configured on a different host, provide the same host followed by the kafka port. Same recommendation on FQDN applies here as well. Kafka endpoint [platform-190.qa.macaw.io:9092]: This is the MYSQL DB endpoint. Default is chosen below. This assumes the user is running MYSQL on the platform host. MYSQL endpoint [platform-190.qa.macaw.io:3306]: MYSQL User. If the user is already using an existing mysql installation, please make sure this user has the right access permissions. If the user is installing mysql via 'macaw infra install', this user will be automatically programmed for access. Also all default users of the system will be deleted. MYSQL Credentials. If the user is provisioning the mysql through macaw tool, the mysql is automatically provisioned with this user. If not, this user is expected to be configured with read/write privileges to mysql and also enable remote connection for the same user. MYSQL user [root]: MYSQL password for the above user. MYSQL password [Nl71dm31@12]: This is the cassandra DB endpoint. Default is chosen below. This assumes the user is running cassandra on the platform host. Cassandra endpoint [platform-190.qa.macaw.io:9042]: Cassandra User. If the user is already using an existing cassandra installation, please make sure this user has the right access permissions. If the user is installing mysql via 'macaw infra install', this user will be automatically programmed for access. Also all default users of the system will be deleted. Cassandra DB credentials. cassandra/cassandra are the default credentials. If the user prefers to change this is the time to change. The user will be configured by the macaw tool in the cassandra DB. Cassandra user [cassandra]: Cassandra password for the above user. Cassandra password [cassandra]: This is the Elasticsearch DB endpoint. Default is chosen below. This assumes the user running Elasticsearch on the platform host. Elasticsearch host (DB) [platform-190.qa.macaw.io:9300]: This is the Elasticsearch Log endpoint. Default is logging to elastic search is disabled. Please refer to documentation on how to enable this before bootstrapping the macaw platform. Elasticsearch host (Logging) []: MACAW platform uses common NFS mount across the platform and service VMs to be able to share the data on the mount points. Please provide an end NFS mount point. By default this is pointing to platform VM. If the user selects the default option, then the user needs to have the necessary NFS Server packages installed on the platform VM. The below config would be reflected in the user's platform configuration. [nfs] host = <NFS Host> remote_mount = <Shared Mount on NFS> local_mount = <Local mount point to NFS mount> Note: If NFS utilities are not installed on the platform and Service VM, select the defaults and then skip autosetup of the NFS mount and the user can update the ~platform.cfg manually once they setup the NFS mounts manually by following the documentation. NFS Server [platform-190.qa.macaw.io]: NFS Server Mountpoint [/opt/macaw-shared]: Local NFS Mount [/opt/appd-shared-9ba77ea521865282]: This is deployment config directory where we store certificates and configurations. The sensitive data like certificates/keys are given restrictive permissions and can only be read & written by the current user. Certificate Repository: $deploymentDir/certificates Common Configuration: $deploymentDir/common Provisioner Configuration: $deploymentDir/provisioner Systems Keys: $deploymentDir/keys Deployment config directory [/opt/macaw-config]: When self-signed certificates are generated, Macaw uses this as the pass phrase for the truststores. If the user is providing their own certificates (Please refer to documentation on how to) and if the trust store is protected by passphrase please provide that passphrase here. Pass-phrase for certificate Trust stores [macaw@1234]: Platform configuration saved to: /home/macaw/platform.cfg Creating the configuration directory: /opt/macaw-config Service Provisioning Environment configuration saved to: /opt/macaw-config/provisioner/macaw-service-provisioner.properties Creating the platform macaw user Platform and Provisioner configuration are sync'ed. Key already exists at: /home/macaw/.ssh/id_rsa. Deploying SSH Key: /home/macaw/.ssh/id_rsa.pub to remote hosts SSH already deployed to: platform-190.qa.macaw.io for user: macaw Deploying self-signed certificates. Follow help/documentation for replacing them. Certificate Repository: /opt/macaw-config/certificates macaw-info: Certificate for: Self Signed CA macaw-info: Location: /opt/macaw-config/certificates/ca macaw-info: Generating Self-Signed CA certificate macaw-info: Importing Trusted CAs into Trust store macaw-info: Certificate generation - Success macaw-info: Certificate for: haproxy macaw-info: Location: /opt/macaw-config/certificates/haproxy macaw-info: Now generating the haproxy server certificate macaw-info: Changing permissions and granting restrictive access macaw-info: Certificate generation - Success macaw-info: Certificate for: serviceregistry macaw-info: Location: /opt/macaw-config/certificates/serviceregistry macaw-info: Now generating the serviceregistry server certificate macaw-info: Changing permissions and granting restrictive access macaw-info: Certificate generation - Success macaw-info: Certificate for: mdr macaw-info: Location: /opt/macaw-config/certificates/mdr macaw-info: Now generating the mdr server certificate macaw-info: Changing permissions and granting restrictive access macaw-info: Certificate generation - Success macaw-info: Certificate for: dockerregistry macaw-info: Location: /opt/macaw-config/certificates/dockerregistry macaw-info: Now generating the dockerregistry server certificate macaw-info: Changing permissions and granting restrictive access macaw-info: Certificate generation - Success macaw-info: Certificate for: kafka macaw-info: Location: /opt/macaw-config/certificates/kafka macaw-info: Now generating the kafka server certificate macaw-info: Changing permissions and granting restrictive access macaw-info: Certificate generation - Success Do you want NFS setup to be configured? This will require sudo access to the current user. Please confirm to continue. [yes/no]: yes Enabling NFS Server on the platform VM NFS Server enabled on Platform VM Verifying NFS mount... NFS mount successful: platform-190.qa.macaw.io Copying trust store to shared location for services: /opt/appd-shared-9ba77ea521865282/certificates/truststore MACAW Setup done. $

Once the Macaw setup is complete, the user should see platform.cfg, macaw-service-provisioner.properties and macaw-resource-profiles.json. If they need to make any modifications, they should be made before installing infrastructure and platform configurations. For particular information on platform and provisioner configurations, the user can read the README files that reside in the same directory as configuration files.

Refer to the Configuration section on a precise explanation of platform and provisioner configuration. It further elaborates on what changes can be made and what needs to be done after changing the auto-generated configuration.

$ ls -lat ~/platform.cfg -rw-------. 1 macaw macaw 3478 Nov 25 12:11 /home/macaw/platform.cfg $ ls -lat ~/platform.README -rw-r--r--. 1 macaw macaw 9298 Nov 25 12:11 /home/macaw/platform.README $ $ ls -lat /opt/macaw-config/provisioner/macaw-service-provisioner.properties -rw-------. 1 macaw macaw 3152 Nov 25 12:11 /opt/macaw-config/provisioner/macaw-service-provisioner.properties $ ls -lat /opt/macaw-config/provisioner/macaw-service-provisioner.README -rw-r--r--. 1 macaw macaw 6327 Nov 25 12:11 /opt/macaw-config/provisioner/macaw-service-provisioner.README $ $ ls -lat /opt/macaw-config/provisioner/macaw-resource-profiles.json -rw-------. 1 cfx cfx 7955 Feb 4 14:24 /opt/macaw-config/provisioner/macaw-resource-profiles.json

Platform Configuration

Macaw setup auto-generates the platform configuration. Once the platform configuration is auto-generated, if you make any changes to the configuration, make sure to execute the command ‘macaw sync’ which keeps the platform configuration and provisioner configuration in sync.

To under details of the platform configuration, refer to the platform.README file under the home directory.

Provisioner Configuration

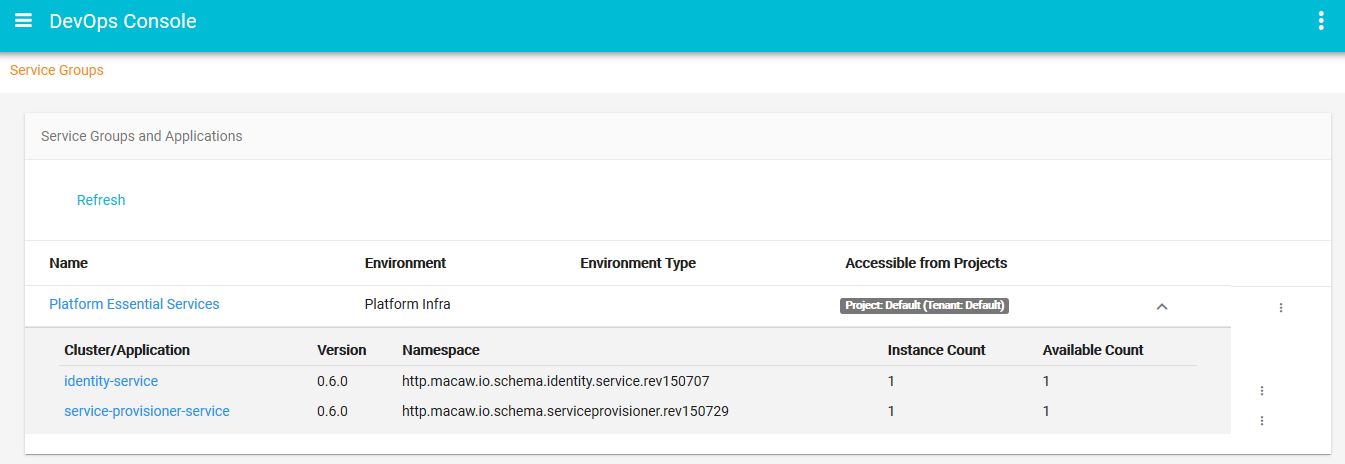

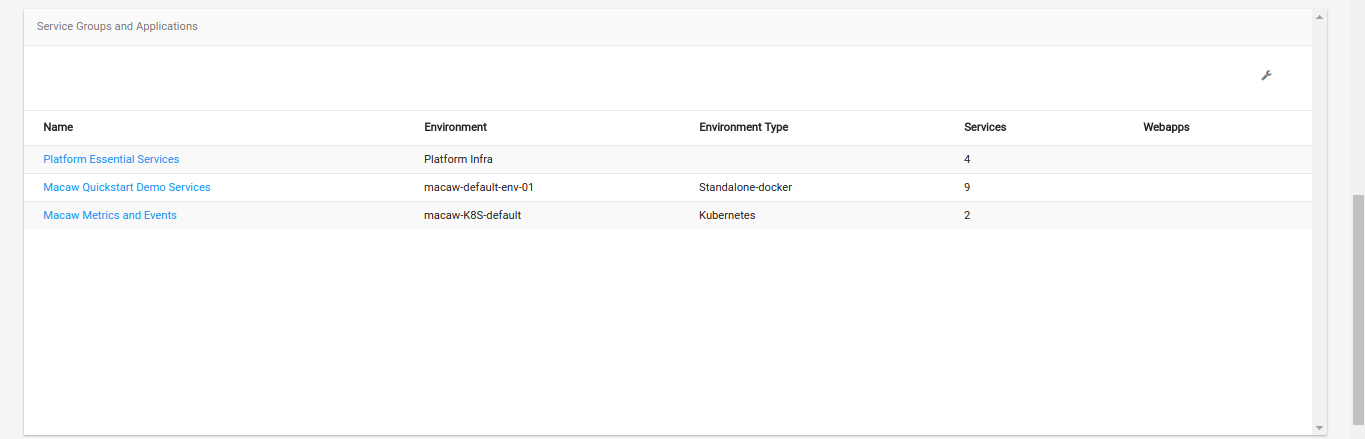

macaw-service-provisioner.properties is a JSON file which feeds Macaw service provisioner with environments where the user can provision their microservices. The JSON specification is an array of environment definitions. A default environment is mandated and created during the macaw setup with inputs provided for service hosts.

Each environment is required to provide this group of mandatory details:

- Fields of ID, name, type.

- Docker enabled Service End Hosts where your microservices are provisioned. Instead of providing service end hosts, the environment can also refer to a swarm cluster manager or Kubernetes master which in turn manages the cluster of docker nodes. (Refer to How to Enable Kubernetes Environment)

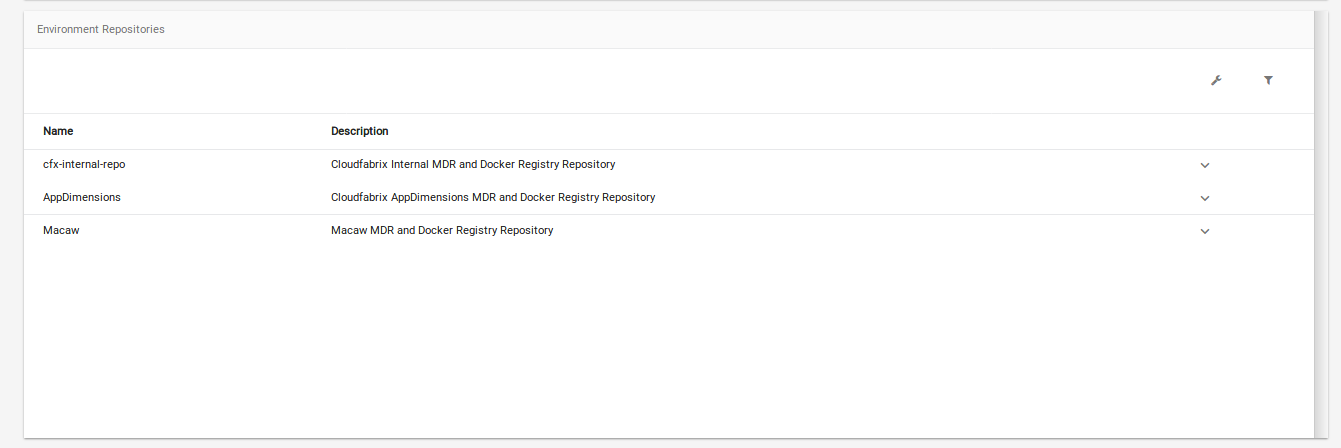

- Repositories: Array of MDR/Docker Registry pairs: Each repository is a pair of MDR (Service Meta Data Repository) and Docker Registry End point. During the provisioning time from the macaw-console, an option will be given to select a specific repository. Once a repository is selected, the user will be shown the available service blueprints from the MDR. When a provisioning request is issued after selecting a specific blueprint, the Macaw provisioner will be using the docker registry in that selected repo and will be downloading the container images. This data is absolutely necessary to be able to provision service blueprints via Macaw console. For more details on MDR, please refer to the Macaw documentation.

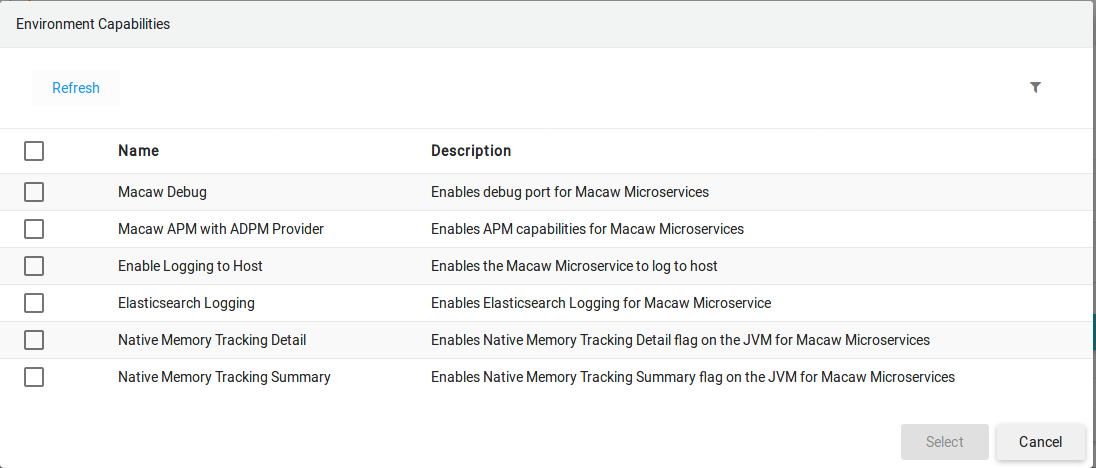

- List of capabilities. Below are the various capabilities an environment can provide.

restart-policy – This is the docker container restart policy setting. Any container deployed into this environment will inherit this policy.

https://docs.docker.com/engine/reference/run/#/restart-policies-restart

dns-configuration for containers – This is automatically appended to the capabilities during the macaw setup based on the user’s DNS settings defined in the platform configuration. The user can override this if needed.

log-configuration for containers – This setting controls the JSON log settings for the deployed containers into the environment.

volumes – These are the mandatory values that would be attached to every container deployed in this environment. For example, the user can store the truststore on a common NFS location and mount this to every container. The Service Blueprint doesn’t need to know about this specific mount point. These volumes are controlled and mandated by the environment.

storage – This defines the various mount/volumes that your environment can provide. Based on the name, the user’s service can request that it needs the specific volume. The provisioner/macaw console does the check before the provisioning if the user service blueprint is requesting volumes which are supported by the environment. If not, an error would be shown to the user. More details on the storage would be found under the Service Blueprints section.

env-variables – Mandatory environment variables for any container deployed in this environment.

resource-profiles – The list of resource profiles this environment can support. The resource profiles are defined in macaw-resource-profiles.json. Essentially through the resource profiles the user’s control the memory/cpu reservations/limits to the container. The environment enforces a default resource profile setting. If a service doesn’t request any specific resource profile the provisioner applies the default resource profile. For more details refer to macaw documentation. Sample JSON file.

opt-in-capabilities – This provides unique capabilities to the macaw platform to enable optional features like Debug, Memory Tracking for the deployed features. During the Service Blueprint deployment into a specific environment, the user can select any one or multiple of these optional capabilities to be applied to the deployed service. This JSON object defines each unique optional capability of the environment. Within the capability, you can specify resources like volumes, ports, labels, environment variables. If the capability is selected during the provisioning time, then volumes, ports, labels, environments defined under this capability are applied to the deployed services. Using this unique way user can define custom optional services.

{

"environments": [

{

"type": "standalone-docker",

"id": "dda6e47c-e594-5a0a-9637-c747f090cc24",

"machines": [

{

"pass-phrase": "",

"ip": "macaw-s1.engr.cloudfabrix.com",

"login": "macaw",

"version": "7.1",

"os": "centos"

},

{

"pass-phrase": "",

"ip": "macaw-s2.engr.cloudfabrix.com",

"login": "macaw",

"version": "7.1",

"os": "centos"

}

],

"ui-pairs": [

{

"url": "https://macaw-p.engr.cloudfabrix.com",

"name": "platform_uipair",

"description": "Platform HAProxy and Tomcat",

"volumes": [

{

"path": "/warfiles",

"sub-path": "platform_uipair",

"name": "MACAW_PLATFORM_MOUNT",

"read-write-mode": "rw"

}

],

"port": 443

}

],

"name": "macaw-default-env-01",

"repositories": [

{

"mdr": {

"protocol": "https",

"name": "Internal MDR",

"host": "10.95.100.10",

"repo": "dev",

"token": "6f009a42-9a9a-4cfa-a248-fb82deff8a12",

"version": "v2",

"port": 8639,

"description": "This is the internal development MDR"

},

"docker-registry": {

"username": "macaw",

"protocol": "https",

"name": "Internal Docker Registry",

"port": 5000,

"host": "cfx-docker-01.engr.cloudfabrix.com",

"password": "password",

"email": "macaw@www.macaw.io",

"description": "This is the internal development docker registry"

},

"name": "cfx-internal-repo",

"description": "Development MDR and Docker Registry Repository"

},

{

"mdr": {

"protocol": "http",

"name": "macaw onprem MDR",

"token": "dda6e47c-e594-5a0a-9637-c747f090cc24",

"repo": "dev",

"host": "macaw-p.engr.cloudfabrix.com",

"version": "v2",

"port": 8637,

"description": "This is the onprem MDR"

},

"docker-registry": {

"username": "macaw",

"protocol": "https",

"name": "macaw onprem Docker Registry",

"port": 5000,

"host": "macaw-p.engr.cloudfabrix.com",

"password": "macaw@local",

"email": "macaw@local.com",

"description": "This is the onprem docker registry"

},

"name": "onprem MDR/Docker",

"description": "onprem installed MDR/Docker"

}

],

"capabilities": {

"restart-policy": {

"name": "unless-stopped"

},

"installation": [],

"resource-profiles": {

"default": "macaw-rp-mwr.small",

"supported": [

"macaw-rp-mwr.small",

"macaw-rp-mwr.medium",

"macaw-rp-mwr.large",

"macaw-rp-mwr.2xlarge",

"macaw-rp-mwr.4xlarge",

"macaw-rp-mwr.8xlarge",

"macaw-rp-mwr.iota",

"macaw-rp-mwr.iota-small",

"macaw-rp-mwr.iota-medium"

]

},

"env-variables": [

{

"name": "MACAW_SSL_TRUSTSTORE_LOCATION",

"value": "/opt/macaw/secrets/truststore/ca_truststore"

},

{

"name": "MACAW_SSL_TRUSTSTORE_PASSWORD",

"value": "macaw@1234"

}

],

"labels": [

{

"name": "io.macaw.type",

"value": "macaw-micro-services"

}

],

"storage": [

{

"path": "/opt/java",

"name": "JAVA_1.8",

"read-write-mode": "ro"

},

{

"path": "/opt/macaw-platform-dda6e47ce5945a0a",

"name": "MACAW_PLATFORM_MOUNT",

"read-write-mode": "rw"

},

{

"path": "/opt/appd-shared-dda6e47ce5945a0a",

"name": "MACAW_ENVIRONMENT_DEFAULT_MOUNT",

"read-write-mode": "rw"

}

],

"dns-configuration": {

"search-domain": [],

"opts": [],

"server": []

},

"volumes": [

{

"path": "/opt/java",

"name": "JAVA_1.8",

"read-write-mode": "ro"

},

{

"path": "/opt/macaw/secrets/truststore",

"sub-path": "certificates/truststore",

"name": "MACAW_PLATFORM_MOUNT",

"read-write-mode": "ro"

}

],

"log-configuration": {

"enable": true,

"driver": {

"name": "json-file",

"opts": {

"max-size": "10m",

"max-file": 5

}

}

}

},

"opt-in-capabilities": {

"debug": {

"name": "Macaw Debug",

"labels": [

{

"name": "io.macaw.debug.port",

"value": "enabled"

}

],

"env-variables": [

{

"name": "MACAW_ENABLE_DEBUG_PORT",

"value": "true"

}

],

"actions": [],

"volumes": [],

"ports": [

{

"protocol": "tcp",

"endpoint": "Debug",

"type": "dynamic",

"name": "Macaw Debug Port",

"port": 8787

}

],

"description": "Enables debug port for Macaw Microservices"

},

"nmt-summary": {

"name": "Native Memory Tracking Summary",

"labels": [

{

"name": "io.macaw.jvm.nativememory",

"value": "summary"

}

],

"env-variables": [

{

"name": "MACAW_JVM_ARGS_ADDITIONAL",

"value": "-XX:NativeMemoryTracking=summary"

}

],

"actions": [],

"volumes": [],

"ports": [],

"description": "Enables Native Memory Tracking Summary flag on the JVM for Macaw Microservices"

},

"logtoES": {

"name": "Elasticsearch Logging",

"labels": [

{

"name": "io.macaw.logging.elasticsearch",

"value": "true"

}

],

"env-variables": [

{

"name": "MACAW_PLATFORM_LOGGING_URL",

"value": "http://macaw-p.engr.cloudfabrix.com:9200/_bulk"

}

],

"actions": [],

"volumes": [],

"ports": [],

"description": "Enables Elasticsearch Logging for Macaw Microservice"

},

"nmt-detail": {

"name": "Native Memory Tracking Detail",

"labels": [

{

"name": "io.macaw.jvm.nativememory",

"value": "detail"

}

],

"env-variables": [

{

"name": "MACAW_JVM_ARGS_ADDITIONAL",

"value": "-XX:NativeMemoryTracking=detail"

}

],

"actions": [],

"volumes": [],

"ports": [],

"description": "Enables Native Memory Tracking Detail flag on the JVM for Macaw Microservices"

},

"adpm": {

"name": "Macaw APM with ADPM Provider",

"labels": [

{

"name": "io.macaw.apm.provider",

"value": "adpm"

}

],

"env-variables": [

{

"name": "MACAW_APM_PROVIDER",

"value": "adpm"

},

{

"name": "MACAW_APM_CENTRAL_REPO",

"value": "macaw-p.engr.cloudfabrix.com:8181"

}

],

"actions": [

{

"url": "https://demo.macaw.io/adpm",

"name": "Launch ADPM"

}

],

"volumes": [

{

"path": "/opt/macaw/apm",

"sub-path": "apm/adpm/agent",

"name": "MACAW_PLATFORM_MOUNT",

"read-write-mode": "rw"

}

],

"ports": [],

"description": "Enables APM capabilities for Macaw Microservices"

}

}

}

]

}

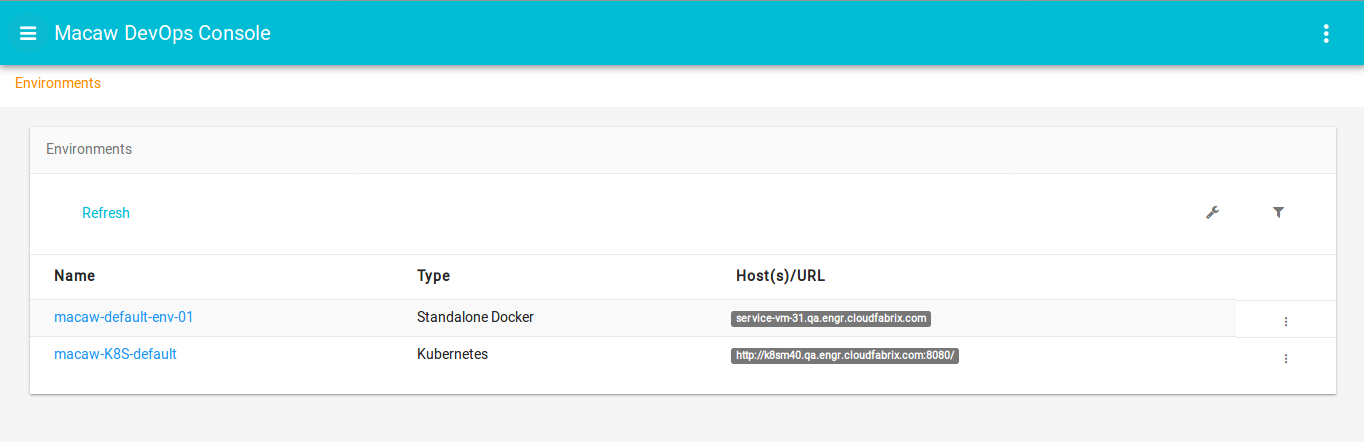

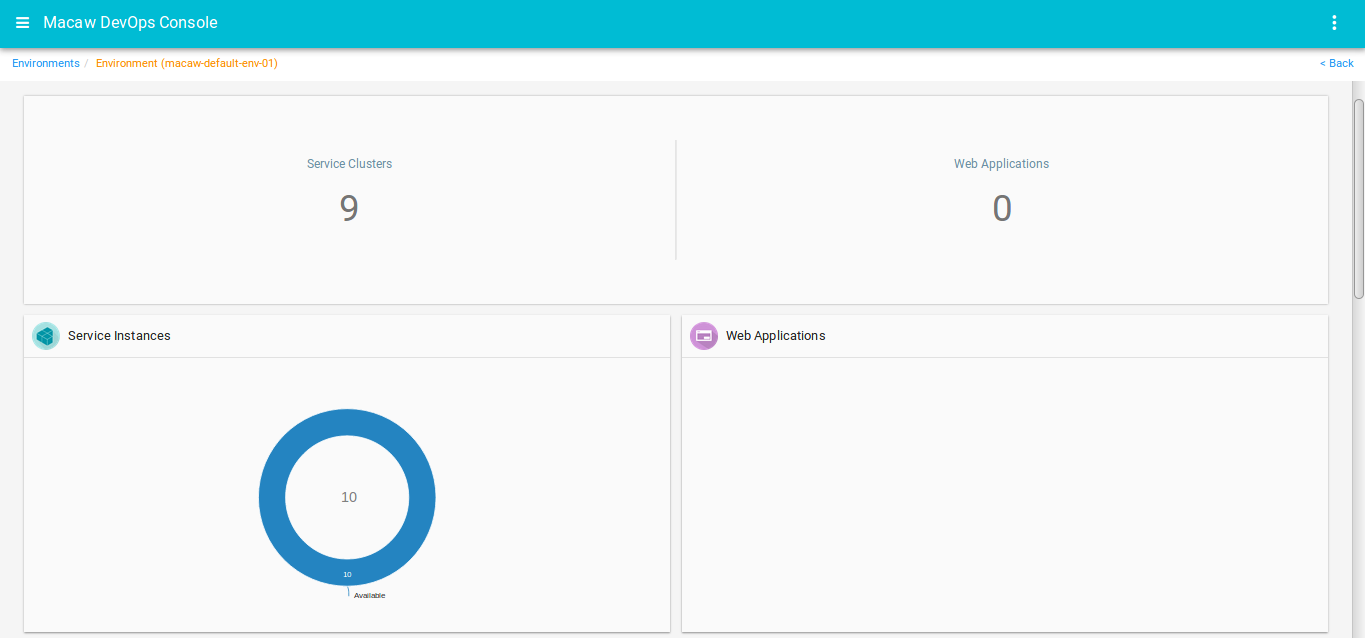

Environments

Macaw Platform segregates compute resources into environments. During the Service Blueprint deployment, you provision into an environment which is either backed by individual docker hosts or a Kubernetes cluster or swarm cluster.

Macaw supports the following provisioning environments:

- Standalone Docker Hosts

- Swarm Cluster

- Kubernetes Cluster (Kubernetes v1.5.2)

Standalone Docker Hosts

A group of individual docker hosts can be grouped together under an environment. The only criteria for grouping the nodes is: the storage definition defined under the environment should be accessible from all the nodes in the environment. For example, if you have defined a storage mount like below in the environment, then it is expected that all the nodes provide this storage.

"storage": [

{

"path": "/opt/java",

"name": "JAVA_1.8",

"read-write-mode": "ro"

}

]

Though Macaw Platform doesn’t enforce this, it is highly recommended to group hosts with same Docker Version, Storage Mounts, CPU/Memory resources into an environment.

As part of the Macaw setup, it is mandated to provide a single service host which is then used to create a default environment.

Below is how compute resources are provided in the environment definition.

"machines": [

{

"pass-phrase": "",

"ip": "macaw-s1.engr.cloudfabrix.com",

"login": "macaw",

"version": "7.1",

"os": "centos"

},

{

"pass-phrase": "",

"ip": "macaw-s2.engr.cloudfabrix.com",

"login": "macaw",

"version": "7.1",

"os": "centos"

}

]

During the Macaw setup phase, password less SSH communication is established to these service endpoints from the platform host. Without this password-less SSH provisioning requests would fail.

Kubernetes

Macaw Platform provides the capability to provision services onto the Kubernetes cluster. Below are the requirements before a Kubernetes environment can be created.

- Kubernetes Master

- Credentials – User/password or Token based Authentication

- Namespace – If no namespace is available, default can be provided. Any non-default namespace should be created upfront on the Kubernetes.

- Mandatory PVCs and any optional PVCs required by services should be created up front on the K8 cluster

In the below section we will cover in detail on how to enable Kubernetes environment in Macaw platform. Below is a sample K8 environment JSON spec.

{

"environments": [

{

"id": "9ba77ea5-2186-5282-9b88-93b373a59f32",

"name": "macaw-k8-env-01",

"type": "kubernetes",

"kubernetes-master": {

"url": "http://k8-master-qa.qa.engr.cloudfabrix.com:8080",

"username": "user@k8",

"password": "password",

"namespace": "macaw",

"auth": "basic-auth"

},

"repositories": [

{

"name": "macaw-k8-repo",

"description": "Internal MDR and Docker Registry Repository",

"mdr": {

"protocol": "https",

"name": "Internal MDR",

"host": "1.1.1.1",

"repo": "dev",

"token": "6f009a42-9a9a-4cfa-a248-fb82d12f8a01",

"version": "v2",

"port": 8639,

"description": "This is the internal development MDR"

},

"docker-registry": {

"username": "foo",

"protocol": "https",

"name": "Internal Docker Registry",

"port": 5000,

"host": "docker-01.local.host",

"password": "123456",

"email": "macaw@www.macaw.io",

"description": "This is the internal development docker registry"

}

}

],

"ui-pairs": [

{

"url": "https://macaw-p.engr.cloudfabrix.com",

"name": "platform_uipair",

"description": "Platform HAProxy and Tomcat",

"volumes": [

{

"path": "/warfiles",

"sub-path": "platform_uipair",

"name": "MACAW_PLATFORM_MOUNT",

"read-write-mode": "rw"

}

],

"port": 443

}

],

"capabilities": {

"restart-policy": {

"name": "unless-stopped"

},

"installation": [],

"resource-profiles": {

"default": "macaw-rp-mwr.small",

"supported": [

"macaw-rp-mwr.small",

"macaw-rp-mwr.medium",

"macaw-rp-mwr.large",

"macaw-rp-mwr.2xlarge",

"macaw-rp-mwr.4xlarge",

"macaw-rp-mwr.8xlarge",

"macaw-rp-mwr.iota",

"macaw-rp-mwr.iota-small",

"macaw-rp-mwr.iota-medium"

]

},

"env-variables": [

{

"name": "MACAW_ENV_VARIABLE_NAMES_TRANSLATOR",

"value": "FIXED_SET_ENV_NAMES_TRANSLATOR"

},

{

"name": "MACAW_SSL_TRUSTSTORE_LOCATION",

"value": "/opt/macaw/secrets/truststore/ca_truststore"

},

{

"name": "MACAW_SSL_TRUSTSTORE_PASSWORD",

"value": "macaw@1234"

}

],

"labels": [

{

"name": "io.macaw.type",

"value": "macaw-micro-services"

}

],

"storage": [

{

"persistent-volume-claim": {

"claim-name": "macaw.installation.java",